Real-world data sets often contain anomalies or outlier data points. The cause of anomalies may be data corruption as well as experimental or human error. Anomalies can impact the performance of the model, so, if you want to train a robust data science model, you need to make sure the data set is free from any anomalies. That’s what anomaly detection does.

Anomaly Detection Algorithm Techniques to Know

- Isolation forest

- Local outlier factor

- Robust covariance

- One-class support vector machine (SVM)

- One-class SVM with stochastic gradient descent (SGD)

- K-means clustering

- Long short-term memory

- Angle-based outlier detection

In this article, we will discuss five anomaly detection algorithms and compare their performance for a random sample of data.

What Is Anomaly Detection?

Anomalies are data points that stand out from other data points in the data set and don’t confirm the normal behavior in the data. These data points or observations deviate from the data set’s normal behavioral patterns.

Anomaly detection is an unsupervised data processing technique to detect anomalies within the data set. An anomaly can be broadly classified into different categories:

- Outliers: Short or small anomalous patterns that appear in a non-systematic way in data collection.

- Change in events: Systematic or sudden change from the previous normal behavior.

- Drifts: Slow, unidirectional long-term change in the data.

Anomaly detection is very useful for detecting fraudulent transactions and disease detection and handling case studies with high-class imbalance. Anomaly detection techniques can be used to build more robust data science models.

How Does Anomaly Detection Work?

Simple statistical techniques such as mean, median and quantiles can be used to detect univariate anomaly feature values in the data set. Various data visualization and exploratory data analysis techniques can also be used to detect anomalies.

Anomaly Detection Algorithms to Know

Here are some unsupervised machine learning algorithms to detect anomalies, and further compare their performance for a random sample data set.

1. Isolation Forest

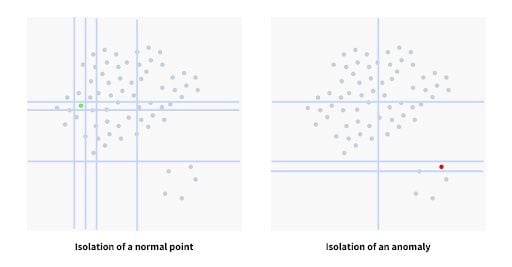

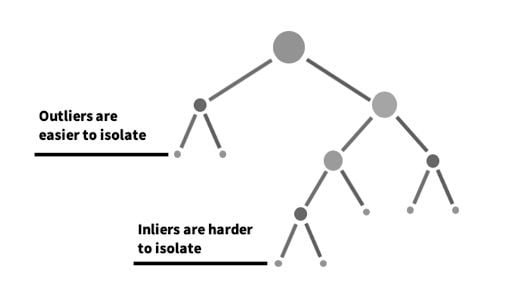

Isolation forest is an unsupervised anomaly detection algorithm that uses a random forest algorithm, or decision trees, under the hood to detect outliers in the data set. The algorithm tries to split or divide the data points such that each observation gets isolated from the others.

Usually, the anomalies lie away from the cluster of data points, so it’s easier to isolate the anomalies from the regular data points.

In the images above, the regular data points require a comparatively larger number of partitions than an anomaly data point.

The anomaly score is computed for all the data points and any points where the anomaly score is greater than the threshold value can be considered as anomalies.

2. Local Outlier Factor

Local outlier factor is another anomaly detection technique that considers the density of data points to decide whether a point is an anomaly or not. The local outlier factor computes an anomaly score that measures how isolated the point is with respect to the surrounding neighborhood. It factors in the local and global density to compute the anomaly score.

3. Robust Covariance

For Gaussian-independent features, simple statistical techniques can be employed to detect anomalies in the data set. For a Gaussian or normal distribution, the data points lying away from the third deviation can be considered anomalies.

If every feature in a data set is Gaussian, then the statistical approach can be generalized by defining an elliptical hypersphere that covers most of the regular data points. The data points that lie away from the hypersphere can be considered anomalies.

4. One-Class Support Vector Machine (SVM)

A regular support vector machine algorithm tries to find a hyperplane that best separates the two classes of data points. In an SVM that has one class of data points, the task is to predict a hypersphere that separates the cluster of data points from the anomalies.

5. One-Class SVM With Stochastic Gradient Descent (SGD)

This approach solves the linear one-class SVM using stochastic gradient descent. The implementation is meant to be used with a kernel approximation technique to obtain results similar to sklearn.svm.OneClassSVM, which uses a Gaussian kernel by default.

6. K-Means Clustering Algorithm

A k-means clustering algorithm organizes data points into clusters based on each data point’s distance from the center of each cluster. Clusters of data points must have certain similarities in common, depending on the situation. Data points that don’t fit into any clusters are categorized as outliers.

7. Long Short-Term Memory (LSTM)

Long short-term memory is a recurrent neural network that can retain input from past feedback loops. This enhances its ability to spot patterns in data that follow sequential order. When analyzing time series, LSTM can identify trends and normal behavioral patterns and label any data points that don’t fit these patterns as outliers.

8. Angle-Based Outlier Detection (ABOD)

Angle-based outlier detection determines unusual data by measuring the angles between different data points. The process works by measuring the angle formed by any three data points until all angles are recorded. Then the variance of each angle is calculated, with inliers having higher variance values and outliers having lower ones. Once a threshold is established, researchers can pinpoint outliers based on whether their variance falls below this threshold.

Which Anomaly Detection Algorithm Should You Use?

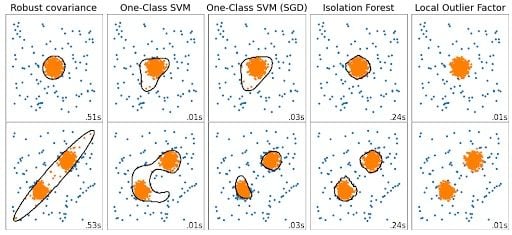

Five anomaly detection algorithms are trained on two sets of sample data sets, row 1 and row 2.

One-class SVM tends to overfit a bit, whereas the other algorithms perform well with the sample data set.

Advantages of Using an Anomaly Detection Algorithm

Anomaly detection algorithms are very useful for fraud detection or disease detection case studies where the distribution of the target class is highly imbalanced. Anomaly detection algorithms can also help improve the performance of the model by removing anomalies from the training sample.

Apart from the above-discussed machine learning algorithms, data scientists can always employ advanced statistical techniques to handle anomalies.

Frequently Asked Questions

What is anomaly detection?

Anomaly detection is the practice of analyzing a data set to identify data points that don’t follow general trends or normal behavior in the data. Removing these anomalies improves the quality and accuracy of the data set.

What are the three types of anomaly detection?

The three types of anomaly detection are unsupervised, semi-supervised and supervised.

What are examples of anomaly detection?

Common real-life examples of anomaly detection include algorithms that help financial institutions detect fraudulent transactions and alert medical professionals when diseases are identified in patients. Anomaly detection algorithms may also be used in factory settings to support predictive maintenance for equipment.