Machine learning models are categorized as either supervised or unsupervised. If it’s a supervised model, it’s then sub-categorized as a regression or classification model.

9 Machine Learning Models to Know

- Linear Regression

- Decision tree

- Random forest

- Neural network

- Logistic regression

- Support vector machine

- Naive Bayes

- Clustering

- Principal component analysis

We’ll go over what these terms mean and the corresponding models that fall into each category.

Supervised Machine Learning Models Explained

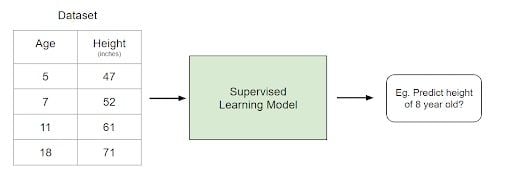

Supervised learning involves learning a function that maps an input to an output based on example input-output pairs.

For example, if I had a data set with two variables, age (input) and height (output), I could implement a supervised learning model to predict the height of a person based on their age.

Within supervised learning, there are two sub-categories: regression and classification.

Regression Models for Machine Learning

In regression models, the output is continuous. Below are some of the most common types of regression models.

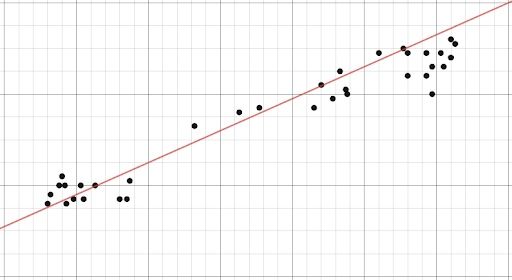

Linear Regression

The idea of linear regression is simply finding a line that best fits the data. Extensions of linear regression include multiple linear regression, or finding a plane of best fit, and polynomial regression, or finding a curve of best fit.

Decision Tree

Decision trees are a popular model, used in operations research, strategic planning and machine learning. Each square in a decision tree is called a node, and the more nodes you have, the more accurate your decision tree will generally be. The last nodes of the decision tree, where a decision is made, are called the leaves of the tree. Decision trees are intuitive and easy to build but fall short when it comes to accuracy.

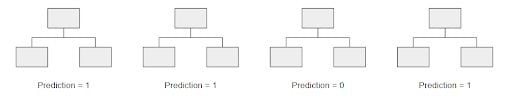

Random Forest

Random forests are an ensemble learning technique that builds off of decision trees. Random forests involve creating multiple decision trees using bootstrapped data sets of the original data and randomly selecting a subset of variables at each step of the decision tree. The model then selects the mode of all of the predictions of each decision tree. What’s the point of this? By relying on a “majority wins” model, it reduces the risk of error from an individual tree.

For example, if we created one decision tree, the third one, it would predict 0. But if we relied on the mode of all four decision trees, the predicted value would be 1. This is the power of random forests.

Neural Network

A neural network is a network of mathematical equations. It takes one or more input variables, and by going through a network of equations, results in one or more output variables. You can also say that a neural network takes in a vector of inputs and returns a vector of outputs, but I won’t get into matrices in this article.

The blue circles represent the input layer, the black circles represent the hidden layers, and the green circles represent the output layer. Each node in the hidden layers represents both a linear function and an activation function that the nodes in the previous layer go through, ultimately leading to an output in the green circles.

Machine Learning Classification Models

In classification models, the output is discrete. Below are some of the most common types of classification models.

Logistic Regression

Logistic regression is similar to linear regression but is used to model the probability of a finite number of outcomes, typically two. There are a number of reasons why logistic regression is used over linear regression when modeling probabilities of outcomes. In essence, a logistic equation is created in such a way that the output values can only be between 0 and 1 .

Support Vector Machine

A support vector machine is a supervised classification technique that can actually get pretty complicated but is pretty intuitive at the most fundamental level.

Let’s assume that there are two classes of data. A support vector machine will find a hyperplane or a boundary between the two classes of data that maximizes the margin between the two classes. There are many planes that can separate the two classes, but only one plane can maximize the margin or distance between the classes.

Naive Bayes

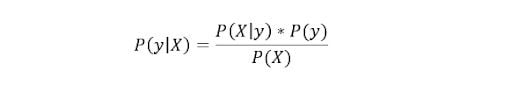

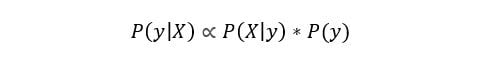

Naive Bayes is another popular classifier used in data science. The idea behind it is driven by Bayes Theorem:

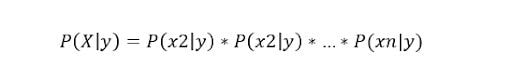

This equation is used to answer the following question: “What is the probability of y (my output variable) given X?” And because of the naive assumption that variables are independent given the class, you can say that:

By removing the denominator, we can then say that P(y|X) is proportional to the right-hand side.

Therefore, the goal is to find the class y with the maximum proportional probability.

Decision Tree, Random Forest, Neural Network

These models follow the same logic as previously explained. The only difference is that that output is discrete rather than continuous.

Unsupervised Learning Machine Learning Models

Unlike supervised learning, unsupervised learning is used to draw inferences and find patterns from input data without references to labeled outcomes. Two main methods used in unsupervised learning include clustering and dimensionality reduction.

Clustering

Clustering is an unsupervised technique that involves the grouping, or clustering, of data points. It’s frequently used for customer segmentation, fraud detection and document classification.

Common clustering techniques include k-means clustering, hierarchical clustering, mean shift clustering, and density-based clustering. While each technique has a different method in finding clusters, they all aim to achieve the same thing.

Dimensionality Reduction

Dimensionality reduction is the process of reducing the number of random variables under consideration by obtaining a set of principal variables. In simpler terms, it’s the process of reducing the dimension of your feature set, i.e. reducing the number of features. Most dimensionality reduction techniques can be categorized as either feature elimination or feature extraction.

A popular method of dimensionality reduction is called principal component analysis.

Principal Component Analysis (PCA)

In the simplest sense, PCA involves projecting higher dimensional data, such as three dimensions, to a smaller space, like two dimensions. This results in a lower dimension of data, two dimensions instead of three, while keeping all original variables in the model.