Supervised learning is an essential part of machine learning. Classification techniques are used when the variable to be predicted is categorical. A common example of a classification problem is trying to classify an Iris flower among its three different species.

4 Steps to Build a Logistic Classifier

- Import the required libraries

- Clean the data set.

- Analyze the data set via feature engineering.

- Prepare the model.

Logistic regression is a classification technique borrowed by machine learning from the field of statistics. Logistic regression is a statistical method for analyzing a data set in which there are one or more independent variables that determine an outcome. The intention behind using logistic regression is to find the best fitting model to describe the relationship between the dependent and the independent variable.

In this article, we’ll first take a theoretical approach on what logistic regression actually is, and then we will build our first classification model.

Why Linear Regression Can’t Be Used as Classifier

Consider a scenario in which we need to classify whether a particular type of cancer is malignant or not. If we use linear regression for this problem, you’d need to set up a threshold based on which classification can be done as linear regression returns a continuous value.

But in scenarios where the actual class is malignant, the predicted continuous value is 0.4. Given that the threshold value is 0.5, the data point will be classified as not malignant, which may lead to serious consequences. As a result, it can be inferred that linear regression is not suitable for classification problems because it’s unbounded and the predicted value is continuous, not probabilistic.

The decision for converting a predicted probability into a class label is decided by the parameter known as threshold. A value above that threshold indicates one class, while a value below indicates the other.

Linear regression is also highly sensitive to imbalanced data. It tries to fit the line by minimizing the error, or the distance between the line and the actual value. As a result it can be inferred that linear regression is better off with regression problems and is not suitable for classification problems, which brings logistic regression into picture.

What Is a Logistic Classifier?

Logistic regression is a classification technique used in machine learning. It uses a logistic function to model the dependent variable. The dependent variable is dichotomous in nature, i.e. there could only be two possible classes. For example, the cancer is either malignant or not. As a result, this technique is used while dealing with binary data.

3 Types of Logistic Regression

Though generally used for predicting binary target variables, logistic regression can be extended and further classified into three different types that are as mentioned below:

- Binomial: This is used when the target variable can have only two possible types, such as predicting mail as spam or not.

- Multinomial: This is used when the target variable has three or more possible types, which may not have any quantitative significance, such as when predicting a disease.

- Ordinal: This is used when the target variables have ordered categories, such as web series ratings from one to five.

Logistic regression uses the sigmoid function to map the predicted values to probabilities. This function maps any real value into another value between zero to one. This function has a non-negative derivative at each point and exactly one inflection point.

What Is a Cost Function for a Logistic Classifier?

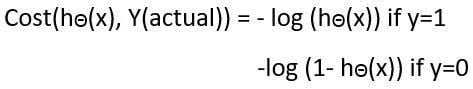

A cost function is a mathematical formula used to quantify the error between the predicted values and the expected values. Put simply, a cost function is a measure of how wrong the model is in terms of its ability to estimate the relationship between x and y. The value returned by the cost function is referred to as cost, loss or error. For logistic regression, the cost function is given by the equation:

This negative function is because when we train, we need to maximize the probability by minimizing loss function. Decreasing the cost will increase the maximum likelihood, assuming that samples are drawn from an identically independent distribution.

Now that you have an idea of what logistic regression is, we shall now be building our own logistic classifier.

4 Steps to Building a Logistic Classifier

Step 1: Import the Required Libraries

Our first step is to import the libraries required to build our model. It’s not necessary to import all the libraries from just one place. To get started, we will be importing the Pandas and NumPy libraries.

Once these libraries have been imported, our next step will be fetching the dataset and loading the data into our notebook. The data set we’ll be using in this example is about Heart Diseases.

#Import the Libraries and read the data into a Pandas DataFrame

import pandas as pd

import numpy as np

df = pd.read_csv("framingham_heart_disease.csv")

df.head()

The Pandas library is used for accessing the data. The read_csv function inputs the data of the format .csv into the pandas DataFrame.

The head() function is used to display the first few records of the DataFrame. The function by default displays the first five records but can be made to display any number of records by entering the desired value.

Step 2: Clean the Data Set

Data cleansing or data cleaning is the process of detecting and correcting corrupt or inaccurate records from a table or database. It identifies incomplete, incorrect or irrelevant parts of the data and then replaces, modifies or deletes the coarse data.

The missing values of the data set can be detected using the isnull function. These records with missing values are either deleted or filled with the mean value of the record. However, entering the approximation may introduce variance and/or bias in the model.

The bias error is an error from erroneous assumptions in the learning algorithm. High bias can cause an algorithm to miss the relevant relations between features and target outputs and thus result in underfitting.

#Handling missing data

series = pd.isnull(df['cigsPerDay'])On the other hand, variance is an error caused due to the sensitivity of the model towards small fluctuations in the training set. High variance can cause an algorithm to model these fluctuations in the training data, thus resulting in the overfitting of the model.

In this example, we also note that certain features of the data set such as “Education” don't play a factor in deciding the output. Such features shall be dropped before building the model.

#Dropping unwanted columns

data = df.drop(['currentSmoker','education'], axis = 'columns')

cigs = data['cigsPerDay']

cig = cigs.mean()

integer_value = math.floor(cig)

cigs.fillna(integer_value, inplace = True)

data.dropna( axis = 0, inplace = True)

Certain features like CigsPerDay already denote that the person is a smoker, so, the column “CurrentSmoker” is of no use.

Step 3: Analyze the Data Set

Now that our data set is clean and free of irregularities, the next step will be to create two separate DataFrames for people with high risk of heart disease and for those with lower risk of heart disease.

This step can also be referred to as feature engineering. Another important step is visualizing the data, as it greatly helps in determining which features to select that may yield optimum results.

#Analyzing The Dataset

Heart_Attack = data[data.TenYearCHD == 1]

No_Heart_Attack = data[data.TenYearCHD == 0]

final = data.drop(['diaBP','BMI','heartRate'], axis = 'columns')

Another important step in feature engineering is to scale the data because logistic regression is sensitive to whether or not the data has been scaled. If the data is not scaled, the model might consider 3,000 grams to be greater than 5 kg, which may result in erroneous prediction.

In this example I have also dropped the values for ‘diaBP’, ‘BMI’ and ‘heartRate’ as they have similar values for either values of TenYearCHD.

Step 4: Prepare the Model

Now that we have cleaned the data and selected the effective features, we can fit the model on our training data. To do this, we first need to split the data set into training and testing data with a given random state, so that the output remains the same every time the program is executed. In this example, the random state is 99.

#Preparing the model

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X,y, test_size = 0.20, random_state = 99)

from sklearn.linear_model import LogisticRegression

model = LogisticRegression()

model.fit(X_train, y_train)

model.score(X_test,y_test)

Once done, import LogisticRegression from sklearn.linear_model and fit the regressor over the training data. The performance of the model can be evaluated by calculating the model score.

In this example, the model is found to be 85.6 percent accurate. Confusion matrix is also a good technique for summarizing the performance of the prediction model. In it there are two possible predicted classes — positive and negative.

The confusion matrix can be further used to determine various important metrics including accuracy, ROC score, precision and f score, etc.

Advantages of Logistic Classifier

Logistic regression is one of the most efficient techniques for solving classification problems. Some of the advantages of using logistic regression include:

- Logistic regression is easier to implement, interpret and very efficient to train. It’s very fast at classifying unknown records.

- It performs well when the data set is linearly separable.

- It can interpret model coefficients as indicators of feature importance.

Disadvantages of Logistic Classifier

Though used widely, logistic regression also comes with some limitations that are as mentioned below:

- It constructs linear boundaries. Logistic regression requires independent variables to be linearly related to the log odds.

- The major limitation of logistic regression is the assumption of linearity between the dependent variable and the independent variables.

- More powerful and compact algorithms such as neural networks can easily outperform this algorithm.

With that, we have reached the end of this article. I hope this has helped you gain a solid understanding of what logistic regression is and when to use it in your machine learning journey.