In our last article, we discussed the build solution of fine-tuning large language models. Now, we’ll cover the buy solution.

Prompt tuning is when you refine LLM outputs by making changes to the prompts. Prompt tuning empowers developers to quickly apply generalized models to new and specific tasks with flexibility, speed and cost-efficiency without fine-tuning.

Let’s dive into the significance of prompt tuning and its various approaches, including hard prompting and soft prompting.

What Are the Types of Soft Prompting?

- Prompt tuning

- Prefix tuning

- P-tuning

- Multi-task prompt tuning

- Programmatic tuning

What Is Hard Prompting?

Hard prompting is when you create templates for different tasks that wrap around the user-generated prompt, and is the most straightforward approach to prompt tuning.

This applies best to task-oriented, repeatable jobs, and engineers use it to quickly adapt language models to specific tasks.

Hard prompting distinguishes between system prompts that instruct the LLM how to behave and applies across multiple user prompts focused on the specific task. You can easily overwrite user prompts with subsequent prompts, while system prompts are more persistent.

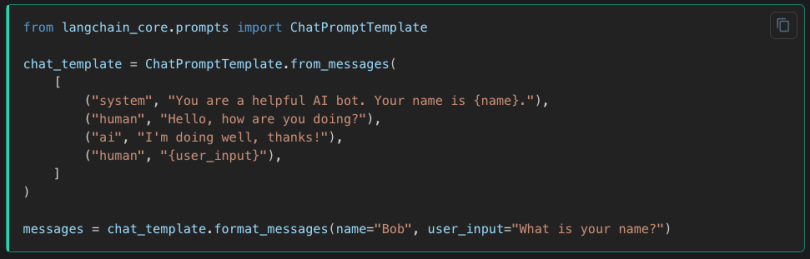

The example below from LangChain tells the system that it is a helpful AI and what to do with the user prompt, or input.

LangChain provides a comprehensive set of tools and modules for building and deploying language model-based applications, including powerful applications like chaining and creating agents.

A popular example of a hard prompting chain is the retrieval augmented generation model, which combines pre-trained language models with external knowledge retrieval to generate more informed and relevant responses. The prompt template informs the model to respond to the user prompt given the context retrieved from the external database.

The goal of RAG models is to both reduce hallucinations by providing context and introduce specific data without the need to retrain the model.

Hard prompting has some drawbacks, such as instability and a lack of fine-grained control over the model’s behavior. Learning the appropriate prompt takes a trial-and-error approach. Additionally, it depends on user judgment, and instability can compound in pipelines.

Enter soft prompting.

What Is Soft Prompting?

Soft prompts involve fine-tuning a token embedding, which is a numerical vector representation of the tokens in which similar words will be closer together in distance, and is customized to the specific task rather than the entire model. Unlike hard prompts, soft prompts are not human-readable.

It focuses on task-specific tokens instead of model weights, which are the learned parameters of the model that convey the importance of the input features. This allows more efficient training at the cost of generalization across tasks, and is particularly useful for single-task, single-training scenarios.

Hugging Face, a popular platform for natural language processing tasks, provides excellent documentation on soft prompting.

To get started with soft prompting, you’ll need to tune your embeddings using techniques like parameter-efficient fine tuning, which is a set of techniques to update model weights without a large computation or time costs.

Types of Soft Tuning

Prompt Tuning

Prompt tuning focuses on updating a singular token embedding in the initial input layer of the model.

It’s focused on encoder-based models, such as BERT, which are best suited for text classification tasks. While focusing on a single embedding token decreases training time and cost, it may face issues with smaller models with less than 1 billion parameters.

Prompt tuning is not the same as prompt engineering, which involves creating a new template.

Prefix Tuning

Prefix tuning is a soft prompting technique that tunes the token embedding of each transformer block instead of just the initial embedding. It’s specifically designed for BART, or casual, decoder models, and uses a feed-forward network instead of embeddings to reduce parameters.

Prefix tuning is best suited for generation tasks. Because prefix tuning updates the embeddings throughout the model, it requires more training than prompt tuning but can adapt better for smaller models.

P-Tuning

P-tuning is a variant of soft prompting where you can insert task-specific token embeddings anywhere in the text, not just at the beginning. It operates on every layer of the model similar to prefix tuning, allowing deep layers to directly affect model predictions and helping to scale across multiple model sizes.

Unlike prefix tuning, it’s based on encoder models, making it effective for natural language understanding tasks. This is a subset of NLP focused on understanding the meaning of sentences for tasks like classification, as opposed to generation tasks like completion or summarization.

Additionally, the reparameterization layer, which reduces the size of your embedding space to increase stability and performance by having fewer parameters, can be either a feed-forward network or a long short-term memory model.

Different methods perform better for different tasks and data sets. Instead of a verbalizer, like in both prompt and prefix tuning, which matches label words to class labels, this method uses a randomly initialized classification head on top of the tokens.

This enables sequence labeling tasks such as named entity recognition, which assigns predefined categories to words in a sentence, and extractive question answering.

Multi-Task Prompt Tuning

Multi-task prompt tuning is an advanced technique that splits training into two parts.

The first part, source training, involves training over multiple tasks, while the second part, target adaptation, involves fine-tuning the embeddings to a specific task.

To accomplish this, multi-task prompt tuning combines task-specific prompts into a singular matrix shared across tasks. This is similar to multi-loss training.

Programmatic (Oobleck) Prompting

DSPy is a novel approach that modularizes prompt tuning, making it more akin to software engineering. It aims to simplify the process of prompt tuning and make it more accessible to developers.

It’s in between the hard and soft prompt frameworks. DSPy creates a Python-based framework for defining signatures (i.e., context, question -> answer) and trains a model to optimize a human-readable prompt for the task.

These generated prompts can lead to chains of thought that wouldn’t initially be apparent, reducing the reliance on trial and error and making prompting more systematic.

DSPy simplifies multi-hop retrievals, which are tasks with multiple steps where the output from one step becomes the input to the next step. It also generates examples for k-shot learning, and is designed to integrate into a large language model pipeline.

Expect changes as the codebase is under active development.

Use Prompt Tuning to Enhance Your AI Solutions

Hard prompting offers a quick and readable approach but may suffer from instability, while soft prompting provides a more robust and repeatable solution, albeit with a black-box nature and the need for per-task training.

As prompt tuning continues to evolve, it raises interesting questions about the skills and expertise required for prompt engineering: How do you interview a prompt engineer? What are the key competencies and best practices in this field?

Prompt tuning is an exciting and rapidly evolving area of AI research and development. By understanding the various approaches and techniques available, developers and researchers can harness the power of language models to create more flexible, efficient and cost-effective AI solutions.