BERT (short for Bidirectional Encoder Representations from Transformers) is a modern language representation method that was developed by Google researchers in 2018 and outlined in the 2019 publication “BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding.” The method is useful for tasks such as question answering, language inference, and commonsense inference. As the paper outlines, BERT outperforms preceding natural language processing (NLP) methods when trained on several benchmark natural language understanding (NLU) data sets. These include the General Language Understanding Evaluation (GLUE) data set, the Multi-Genre Natural Language Inference (MultiNLI) data set, the Stanford Question Answering Dataset (SQUAD), and many more.

What makes BERT unique is its ability to bake bidirectional context into word representations. What exactly does this mean? Typically, language representations are the results of context-free models or unidirectional/left-to-right language models. BERT is the first extension of pre-training contextual representations that includes context from both directions. To understand this, let’s consider three types of text representation:

1. Context-Free

A context-free model, such as word2vec and GloVe, would give the word “pitcher” the same representation in the sentences “He was a baseball pitcher” and “He was thirsty, so he asked for the pitcher of water.” Although we know, based on the context, the word “pitcher” has different meanings, context-free models are unable to provide differentiated representations.

2. Unidirectional Context

A unidirectional contextual model, such as OpenAI GPT, would represent each word using the words that came before it, going from left to right. For example, “pitcher” in “He was a baseball pitcher” is represented using “He was a baseball.” Unlike context-free models, unidirectional models provide some context for word representations. Despite this, unidirectional models are limited because words can only be represented with preceding text. This limitation motivates the need for bidirectional language models that fully capture the contextual meaning of words.

3. Bidirectional Context

While unidirectional models provide some context, sometimes we need context in both directions in order to fully understand the meaning of a word. For example, consider the sentence “He knew the pitcher of water was on the table.” Models like OpenAI GPT would represent the word “pitcher” with “He knew the.” BERT, being bidirectional, would appropriately represent the word “pitcher” with “He knew the” and “of water was on the table.” By representing words with both preceding and succeeding text, BERT is able to accurately represent the meaning of words in text. You can read more about BERT’s ability to capture bidirectional context in the Google AI blog post here.

Given our understanding now, how might this model be used in practice? The most relevant application is with Google’s search engine, which uses BERT to enhance search results. In this Google blog post, the authors outline some key improvements to search results after implementing BERT. They use, as an example, the query “Can you get medicine for someone pharmacy.” Before BERT, the results suggested resources for how to get a prescription filled. After BERT, the results correctly represented the context: picking up prescription medicine for another person rather than prescriptions in general. The results then showed pages with relevant content.

Now let’s consider another interesting application of BERT: finding fraudulent job listings. In recent decades, corporations have adopted a variety of cloud-based solutions for advertising job ads such as application tracking systems (ATS). Although this allows corporations to facilitate more efficient hiring, it lends itself to scammers generating and advertising fraudulent content. Specifically, scammers have become adept at crafting convincing ads for blue-collar and secretarial jobs. Further, through application tracking systems like Workable, scammers can effortlessly collect thousands of resumes using fraudulent posts as bait.

There are two aims of employment scams. The first is the collection of contact information such as emails, ZIP codes, phone numbers and addresses. This information can then be sold to marketers and cold callers. The more malicious aim of employment scams is identity theft. The fake posts usually direct users from the ATS to third-party sites, where users are then put through a series of phony interview activities. Eventually users are asked for highly sensitive information such as social security numbers and bank account information, which can be used for money laundering. Fortunately, this is a problem that we can address using BERT modeling.

Recently, the University of the Aegean published the Employment Scam Aegean Dataset in an effort to bring the employment scam issue to light. The data contains about 18,000 records containing both real and fraudulent job advertisements. The task of identifying fake job posts naturally falls under binary classification. Specifically, we will use the BERT model to classify fake posts by representing the words in the job postings, which come labeled as either “fraudulent” or “genuine,” using bidirectional context. In this use case, the contextual meaning of words found in “fraudulent” postings should be distinct from the contextual meaning of words found in “genuine” postings. Capturing these bidirectional contextual differences should result in improved classification performance compared to both unidirectional and context-free models. Before beginning, thanks to Dima Shulga, whose post "BERT to the Rescue" inspired my work here.

1. Import Packages

First, let’s import some necessary packages:

import pandas as pd

import numpy as np

import torch.nn as nn

from pytorch_pretrained_bert import BertTokenizer, BertModel

import torch

from keras.preprocessing.sequence import pad_sequences

from sklearn.metrics import classification_report

2. Data Exploration

Next, let’s read the data into a data frame and print the first five rows. We can also set the max number of display columns to “None”:

pd.set_option('display.max_columns', None)

df = pd.read_csv("fake_job_postings.csv")

print(df.head())

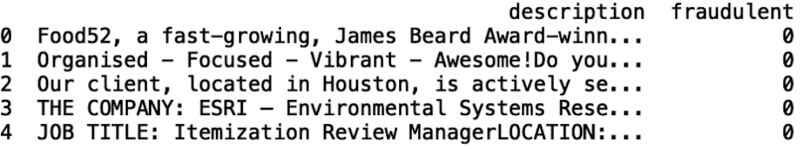

For simplicity, let’s look at the “description” and “fraudulent” columns:

df = df[['description', 'fraudulent']]

print(df.head())

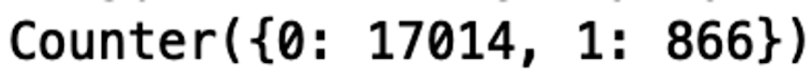

The target for our classification model is in the column “fraudulent.” To get an idea of the distribution in and kinds of values for “fraudulent” we can use “Counter” from the collections module:

from collections import Counter

print(Counter(df['fraudulent'].values))

The 0 value corresponds to a normal job posting and the 1 value corresponds to a fraudulent posting. We see that the data is slightly imbalanced, meaning there are more normal job posting (17,000) than fraudulent postings (866).

Before proceeding, let’s drop NaN values:

df.dropna(inplace = True)

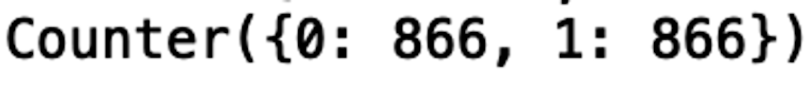

Next, we want to balance our data set such that we have an equal number of “fraudulent” and “not fraudulent” types. We also should randomly shuffle the targets:

Next, we want to format the data such that it can be used as input into our BERT model. We split our data into training and testing sets:

train_data = df.head(866)

test_data = df.tail(866)We generate a list of dictionaries with “description” and “fraudulent” keys:

train_data = [{'description': description, 'fraudulent': fraudulent } for description in list(train_data['description']) for fraudulent in list(train_data['fraudulent'])]

test_data = [{'description': description, 'fraudulent': fraudulent } for description in list(test_data['description']) for fraudulent in list(test_data['fraudulent'])]Generate a list of tuples from the list of dictionaries:

train_texts, train_labels = list(zip(*map(lambda d: (d['description'], d['fraudulent']), train_data)))

test_texts, test_labels = list(zip(*map(lambda d: (d['description'], d['fraudulent']), test_data)))Generate tokens and token ids:

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased', do_lower_case=True)

train_tokens = list(map(lambda t: ['[CLS]'] + tokenizer.tokenize(t)[:511], train_texts))

test_tokens = list(map(lambda t: ['[CLS]'] + tokenizer.tokenize(t)[:511], test_texts))

train_tokens_ids = list(map(tokenizer.convert_tokens_to_ids, train_tokens))

test_tokens_ids = list(map(tokenizer.convert_tokens_to_ids, test_tokens))

train_tokens_ids = pad_sequences(train_tokens_ids, maxlen=512, truncating="post", padding="post", dtype="int")

test_tokens_ids = pad_sequences(test_tokens_ids, maxlen=512, truncating="post", padding="post", dtype="int")Notice we truncate the input strings to 512 characters because that is the maximum number of tokens BERT can handle.

Finally, generate a boolean array based on the value of “fraudulent” for our testing and training sets:

train_y = np.array(train_labels) == 1

test_y = np.array(test_labels) == 1

3. Model Building

We create our BERT classifier which contains an “initialization” method and a “forward” method that return token probabilities:

class BertBinaryClassifier(nn.Module):

def __init__(self, dropout=0.1):

super(BertBinaryClassifier, self).__init__()

self.bert = BertModel.from_pretrained('bert-base-uncased')

self.dropout = nn.Dropout(dropout)

self.linear = nn.Linear(768, 1)

self.sigmoid = nn.Sigmoid()

def forward(self, tokens, masks=None):

_, pooled_output = self.bert(tokens, attention_mask=masks, output_all_encoded_layers=False)

dropout_output = self.dropout(pooled_output)

linear_output = self.linear(dropout_output)

proba = self.sigmoid(linear_output)

return probaNext, we generate training and testing masks:

train_masks = [[float(i > 0) for i in ii] for ii in train_tokens_ids]

test_masks = [[float(i > 0) for i in ii] for ii in test_tokens_ids]

train_masks_tensor = torch.tensor(train_masks)

test_masks_tensor = torch.tensor(test_masks)Generate token tensors for training and testing:

train_tokens_tensor = torch.tensor(train_tokens_ids)

train_y_tensor = torch.tensor(train_y.reshape(-1, 1)).float()

test_tokens_tensor = torch.tensor(test_tokens_ids)

test_y_tensor = torch.tensor(test_y.reshape(-1, 1)).float()Finally, we prepare our data loaders:

BATCH_SIZE = 1

train_dataset = torch.utils.data.TensorDataset(train_tokens_tensor, train_masks_tensor, train_y_tensor)

train_sampler = torch.utils.data.RandomSampler(train_dataset)

train_dataloader = torch.utils.data.DataLoader(train_dataset, sampler=train_sampler, batch_size=BATCH_SIZE)

test_dataset = torch.utils.data.TensorDataset(test_tokens_tensor, test_masks_tensor, test_y_tensor)

test_sampler = torch.utils.data.SequentialSampler(test_dataset)

test_dataloader = torch.utils.data.DataLoader(test_dataset, sampler=test_sampler, batch_size=BATCH_SIZE)

4. Fine Tuning

We use the Adam optimizer to minimize the Binary Cross Entropy loss, and we train with a batch size of 1 for 1 EPOCHS:

BATCH_SIZE = 1

EPOCHS = 1

bert_clf = BertBinaryClassifier()

optimizer = torch.optim.Adam(bert_clf.parameters(), lr=3e-6)

for epoch_num in range(EPOCHS):

bert_clf.train()

train_loss = 0

for step_num, batch_data in enumerate(train_dataloader):

token_ids, masks, labels = tuple(t for t in batch_data)

probas = bert_clf(token_ids, masks)

loss_func = nn.BCELoss()

batch_loss = loss_func(probas, labels)

train_loss += batch_loss.item()

bert_clf.zero_grad()

batch_loss.backward()

optimizer.step()

print('Epoch: ', epoch_num + 1)

print("\r" + "{0}/{1} loss: {2} ".format(step_num, len(train_data) / BATCH_SIZE, train_loss / (step_num + 1)))

And we evaluate our model:

bert_clf.eval()

bert_predicted = []

all_logits = []

with torch.no_grad():

for step_num, batch_data in enumerate(test_dataloader):

token_ids, masks, labels = tuple(t for t in batch_data)

logits = bert_clf(token_ids, masks

loss_func = nn.BCELoss(

loss = loss_func(logits, labels)

numpy_logits = logits.cpu().detach().numpy()

bert_predicted += list(numpy_logits[:, 0] > 0.5)

all_logits += list(numpy_logits[:, 0])

print(classification_report(test_y, bert_predicted))This displays a confusion matrix containing precision, recall, and f1-score metrics, all of which describe model performance. Obviously, this post is just a primer on solving the employment scam with BERT. Through additional fine-tuning, we can increase the accuracy further and uncover even more fraudulent listings. The advantages of the BERT model over context-free and unidirectional context models are clear. Bidirectional language processing makes BERT much better at using context to determine a listing’s legitimacy, thus protecting job seekers from exploitation by bad actors. Although this is just one way of putting machine learning to work solving real-world problems, it’s a good example of how useful BERT can be.