Retrieval augmented generation (RAG) is the process of optimizing large language model (LLM) outputs by having the system retrieve information from outside of its training data. The technique applies search algorithms to LLMs that query external data from knowledge bases, databases and web pages, all to form more accurate and up-to-date outputs. RAG can be used to make the output of a large language model match a specific business problem.

What Is Retrieval Augmented Generation (RAG)?

RAG (retrieval augmented generation) is a technique used to customize the outputs of a LLM (large language model) for a specific domain without altering the underlying model itself.

RAG: What Is Retrieval Augmented Generation?

Retrieval augmented generation, or RAG, is a way of making language models better at answering domain-specific queries by augmenting the user’s query with relevant information obtained from a corpus. It doesn’t make any attempt to change the model it’s using. It’s like hiring an adult human who is already trained and already has skills. All you need to do is hand a manual to a human and tell them to learn it.

How Does Retrieval Augmented Generation (RAG) Work?

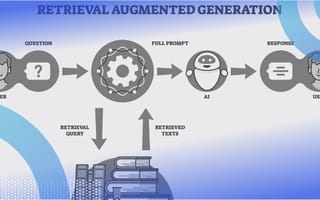

The way RAG works is that whenever someone wants to ask the LLM something, some other system must go find relevant information in the training material and feed it to the LLM. Usually this is performed by doing some type of semantic search to find documents, or subsections of documents, that seem related to the query.

There are all kinds of clever search techniques to make this work better, but the general idea is “find the relevant information.” This is the retrieval step of RAG, and it happens before the LLM is invoked.

Next, the LLM is asked a question that includes all the relevant information retrieved in the previous step. The prompt might read:

“The user asked: <insert user question here>.

Please answer the question using the information below:”

Usually, the goal of RAG is to restrict the LLM’s answer to the information contained in the relevant information, so the prompt might instead say: “Please answer the question using the information below and nothing else you know.”

Why Is Retrieval Augmented Generation (RAG) Important?

LLMs are at the core of chatbots, generative AI and natural language processing (NLP) tools. Users often look to these technologies for answers to questions, summarizations or text creation, making it critical for LLM knowledge and outputs to be accurate. However, LLMs can be trained on static or inaccurate data, making for unpredictable, false or out-of-date responses.

Using RAG helps to remedy this issue, as it enables an LLM to retrieve more context and relevant data from a predetermined knowledge source before producing a response. This can make for more informed and accurate LLM responses — plus allows developers to have more control over generated outputs — which boosts reliability and user trust. RAG also eliminates the need to retrain a model, as it works to add any newly retrieved data to a pre-trained LLM.

Benefits of RAG

Here are some specific reasons when RAG is the best tool; it is usually all you need.

RAG Adapts to New Training on the Fly

Just shove it into your prompt and go. This is important for us: Our customers’ product documentation is always changing and we wouldn’t have time to re-fine-tune a model every time it changes.

RAG Can Be Better at Restricting Answers to Specific Questions

Even though a fine-tuned model can’t recall incorrect information, it can still make stuff up. It’s usually more effective to just tell a model, “Don’t mention anything that isn’t in the information below.”

With RAG, You Can Get Started Immediately

Fine-tuning requires time. Training requires even more time. RAG requires zero training and zero ahead-of-time data collection.

RAG Allows You to Handle Data Silo Situations

Sometimes, data is siloed across an organization. For example, Department A might not be able to use data from Department B. RAG completely avoids this by not including any company-specific data in the model’s training set.

RAG Can Easily Adapt to Improvements in Foundational Models

Large players like OpenAI and Google spew out improvements in foundational models all the time. Imagine you fine-tune GPT3, and then GPT4 comes out. Now you have to fine-tune again. With RAG, you can just swap in the latest model and see how it performs relative to the last one. We do this all the time — comparing how new models perform in our pipeline relative with what we currently have in production.

You can, of course, pair RAG with either foundation model training or fine-tuning. For example, we’re considering training our own model that is specifically designed to help users use software. We could then use that model instead of a generic LLM (like GPT4) in our RAG system.

I recommend only doing that if you don’t see performance with raw RAG that you want or if you have enough scale that improving performance by a few percentage points is worth the training cost.

Challenges of RAG

You already know that I think RAG is usually the right answer. So let’s approach this question a bit differently and explore reasons you shouldn’t always use RAG.

RAG Can Be Slower Than Raw LLMs

RAG is typically slower than a raw or fine-tuned LLM because it involves a semantic retrieval step before the LLM call. This is usually fine for consumer applications, but there are tons of scenarios where mega speed is important. For example, let’s say you’re using an LLM to make a decision that blocks your API from returning. In that scenario, a 100ms retrieval step might not be acceptable.

Models are slow to train and fine-tune, but once trained/fine-tuned, they are fast to run. It goes without saying that big models are typically slower to run than small models.

RAG May Not Be Effective for Domain-Specific Outputs

In scenarios where you need to generate extremely specific, high-dimensional outputs, it’s unlikely a general-purpose LLM will be good enough for you, although you should always try first before going down the path of training your own model.

For example, I’m pretty sure Suno, an incredibly cool tool for creating music from natural language prompts, has trained its own model or at least done a hefty amount of fine-tuning.

Another example is Adept’s Fuyu model, which was built specifically for AI agents to navigate user interfaces. General-purpose large language models are particularly bad at this. We tried the basic version, just throwing a whole webpage DOM at GPT4 to see if it could use the user interface. It barely works.

Differences Between Retrieval Augmented Generation (RAG) and Semantic Search

Although they are related concepts, retrieval augmented generation and semantic search have different purposes:

- RAG retrieves relevant external information from a data source and passes it to an LLM to provide more accurate responses for users.

- Semantic search applies semantics to understand the meaning of search queries and returns relevant data based on context, as opposed to keyword search that returns results only on a matching keyword.

They also work differently:

- RAG operates on search algorithms (to gather new data) and language generation capabilities (to produce outputs that use the new data).

- Semantic search operates on semantic algorithms (to interpret query context) and indexing systems (to manage and store data).

Combining semantic search and RAG technologies together can enhance LLM outputs, especially when retrieving data for complex questions or from large-scale knowledge bases.

Frequently Asked Questions

What is retrieval augmented generation?

Retrieval augmented generation (RAG) is a technique used to optimize large language model (LLM) outputs by retrieving additional information outside of the LLM’s training data. External data can be queried from specific knowledge bases, databases and web pages to provide more context to LLMs, which helps generate more accurate and up-to-date responses.

What are the best uses for RAG?

Use RAG when you need language output that is constrained to some area or knowledge base, when you want some degree of control over what the system outputs, when you don’t have time or resources to train or fine-tune a model, and when you want to take advantage of changes in foundation models like ChatGPT.

What are some alternatives to RAG?

One is model training, which is creating your own model from scratch. Model training involves gathering a big training set and running it through an optimization procedure to get a set of weights that represent the model.

Another is fine tuning, which is taking a model that has been trained and adapting it to your own use case by training it further.

What is the difference between fine-tuning and retrieval augmented generation?

Fine-tuning an LLM involves training a pre-trained model on a targeted dataset in order to improve performance for specific tasks. Retrieval augmented generation doesn’t require training, and it uses search algorithms to retrieve and integrate external data into a pre-trained LLM for improved outputs.

What is the difference between RAG and LLM?

RAG is an optimization technique that can be applied to improve LLM outputs. LLMs are machine learning models designed to understand and generate human language.