In machine learning, creating a best-performing optimal model involves finding a set of parameters, a training data set and a good learning algorithm. Meta learning techniques help find these elements through multiple learning episodes; in other words, by learning to solve a set of tasks instead of solving only one task at a time.

What Is Meta Learning?

Meta learning is a machine learning technique that enables models to adapt new tasks using prior experience from a variety of training tasks.

This way, the meta learning model gets better at solving a new and unseen task. This method is also referred to as the learning-to-learn approach.

How Does Meta Learning Work?

To understand how meta learning works in simple terms, let’s consider this example. Suppose we have a robot that can pick up objects and place them where they belong. However, the robot has not been trained to pick up an object it has never seen before. In a traditional supervised learning paradigm, we need to gather a large dataset of images of the new object with its location coordinates and train the robot for each new object.

With meta learning, however, we can train the robot to learn how to quickly adapt to new objects by leveraging its previous experience with other objects. The robot learns to identify shared features across previously seen objects, enabling it to generalize to novel ones with minimal examples.In the training setup for meta learning, we use a few-shot learning approach.

The entire algorithm for meta learning process for training robot involves the following steps:

- Collect a dataset of each object with various shapes and textures, and for each object, specify the correct location to place it.

- Split the dataset into training and testing sets.

- Divide the training dataset into K-shot tasks, where each task consists of K objects and their corresponding locations.

For each K-shot task, perform the following steps:

- Select N number of objects with their corresponding current locations at random from the training set.

- Train the model to predict the correct location for each object in the task based on its features with gradient descent/any other optimization algorithm.

- Update the model parameters using the loss calculated for the current task.

- After training on all K-shots, the model should have learned to quickly adapt to new tasks by leveraging the experience gained from previous tasks.

- Evaluate the model’s parameters on the test set, which consists of objects and locations that were not seen during training.

If the model has trained well, the model will achieve better results on unseen tasks. This is one variation of the meta learning algorithm; there are other types as well.

Approaches to Meta Learning

There are three significant approaches to meta learning: metric-based learning, model-based learning and optimization-based learning.

Metric-Based Meta Learning

Metric-based meta learning is commonly used for various tasks such as image similarity detection, signature detection, facial recognition, etc. This approach focuses on learning a distance metric which is a function that measures the similarity or dissimilarity between pairs of data points.

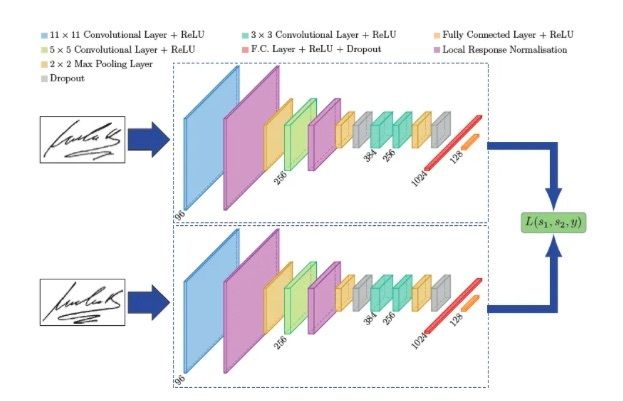

Metric learning can be used to learn a good representation of space for the data. This is done by training the meta learning algorithm with different sets of tasks, each with a small amount of training data. The image below is an example of metric-based meta learning from the paper SigNet: Convolutional Siamese Network for Writer Independent Offline Signature Verification. It depicts a Siamese network that uses metric-based meta learning to identify real and forged images.

The step-by-step working detail of metric learning is as follows:

- Prepare the data to perform similarity learning. It could be a pair of images, audio files or any other type of data.

- Initialize the distance metric with the random values.

- Use the distance metric to compute the pairwise similarity for all pairs of data points.

- Compute a loss function that measures how well the computed similarities match the target similarity matrix.

- Compute a gradient of a loss function with respect to the parameters.

- Update the distance metric using the gradients computed from the above step.

Steps 3 to 6 are repeated until the distance metric converges or for a fixed number of iterations.

Model-Based Meta Learning

In this approach, the meta learner learns to find the model’s parameters/architecture in such a way that it can quickly adapt it to new tasks with a small amount of training data.

The step-by-step working detail of model-based meta learning is:

- Prepare the data for different tasks and initialize the model’s parameters with random values.

- Train a model for a set of tasks.

- Given a new task, use the learned model to generate a prior distribution of the task parameters, the prior distribution represents what the model expects the task parameters to be, based on the distribution it learned from different tasks.

- Sample a small amount of data from the new task.

- Use the prior knowledge and sample data to update the model’s parameters for new tasks.

- Use the posterior distribution to adapt the model to the new task by updating the model parameters using the sampled data and the posterior distribution, by this, the model is updated for the new task.

- Use this model to perform inference for the new task.

Model-based meta learning is commonly used in reinforcement learning (RL), where the agent is adapted to a newer environment to make decisions in a dynamic environment.

Optimization-based Meta Learning

This family of meta learning algorithms learns a set of initialization parameters of the model that can be quickly adapted to new tasks.

The steps of optimization-based meta learning are:

- Prepare the data for the different sets of tasks and initialize the model’s parameters with random values.

- Given a new task, initialize the model with the current parameters and perform a small number of gradient steps on the task to obtain adapted parameters. These adapted parameters are used to perform the task.

- Compute the loss on the task using the adapted parameters.

- Compute the gradients of the loss with respect to the adapted parameters.

- Update the initialization parameters using the gradients from the above step such that the initialization parameters are better suited for quick adaptation to new tasks.

- After the model has been trained, it can be used to perform a new task by initializing the model with the updated initialization parameters, performing a small number of gradient steps on the task to obtain adapted parameters. We can perform the task using the adapted parameters.

Optimization-based meta learning is used in many areas of machine learning where it is used to learn how to optimize the weights of neural networks, hyperparameters of the algorithm and other parameters.

Benefits of Meta Learning

Meta learning has several benefits, among them:

- Faster adoption to new tasks. Meta learning allows a model to learn how to quickly adapt to new tasks with a small amount of training data. This is particularly useful in scenarios where the distribution of tasks is non-stationary and new tasks are constantly introduced.

- Data efficiency. Meta learning can lead to efficient usage of data by allowing a model to learn from a small number of examples. This is because meta learning algorithms learn to identify the underlying structure of a set of tasks, which can be used to generalize to new tasks with fewer training examples.

- Improved generalization. Meta learning can improve the generalization performance by learning to extract useful features or representations from a set of tasks. By learning to extract features that are relevant across a wide range of tasks, a meta-learner can generalize better to new tasks.

- Automatic model selection. Meta learning can be used to automatically select the best model architecture and hyperparameters of the given task. This can save time and effort in the model selection process, particularly in scenarios where the optimal model architecture and hyperparameters are unknown.

- Domain adaptation. Meta learning can be used to learn how to adapt to different domains by learning a set of domain-agnostic representations that can be used to learn multiple domains.

Applications of Meta Learning

Meta learning has wide range of applications on different domains. Major applications include:

- Few-shot learning. Meta learning is often used for few-shot learning tasks, where the goal is to learn a model that can quickly adapt to new tasks with a small amount of training data. Few-shot learning is useful in scenarios where new tasks are introduced frequently, and it is not practical to collect large amounts of labeled data for each task.

- Computer vision. Meta learning has been applied to computer vision tasks such as object detection, image classification and semantic segmentation. Here, meta learning can be used to learn models that can quickly adapt to new datasets with a small number of training examples

- Natural language processing. Meta learning has been used in NLP applications, such as language modeling, sentiment analysis and other similar tasks. In these areas, it is used for cross-domain and cross-lingual generalization.

- Recommendation systems. Meta-learning can be used to learn personalized recommendation models for individual users based on their past interactions with a system. This is useful in providing personalized recommendation systems.

- Robotics. Meta learning has been used in many robotics applications such as robot manipulation, grasping, and navigation. In these applications, meta learning can be used to learn models that can be completely adapted to new environments with a small number of training examples.

Most of the time, deep learning requires more amount of annotated training data, which is cost and time intensive. To tackle this we can use meta learning which can be used for efficient training with less amount of data. Meta learning can be applied in various domains of machine learning.

Frequently Asked Questions

What is meta learning in machine learning?

Meta learning is a machine learning technique that enables models to quickly adapt to new and unseen tasks by leveraging experience from previous tasks.

How does meta learning differ from traditional machine learning?

Traditional models are trained for specific tasks with large datasets. Meta learning trains models to generalize across tasks, allowing fast adaptation using limited data, often through few-shot learning.

What are the main approaches to meta learning?

There are three primary approaches: metric-based, model-based and optimization-based meta learning.