Word2vec is a technique and family of machine learning model architectures used in natural language processing (NLP) to create vectors that represent words. These vectors aim to capture the semantic relationships between words, where words close together in the vector space indicate they have similar contexts. Word2vec models are shallow, two-layer neural networks trained to reconstruct the linguistic contexts of words.

Why Use Word2Vec for NLP?

Using a neural network with only a couple layers, word2vec tries to learn relationships between words and embeds them in a lower-dimensional vector space. To do this, word2vec trains words against other words that neighbor them in the input corpus, capturing some of the meaning in the sequence of words.

This article explores quick and easy ways to generate word embeddings with word2vec using the Gensim library in Python.

What Is Word2Vec?

Developed by a team of researchers at Google, word2vec attempts to solve a couple of the issues with the bag-of-words model approach:

- High-dimension vectors

- Words assumed completely independent of each other

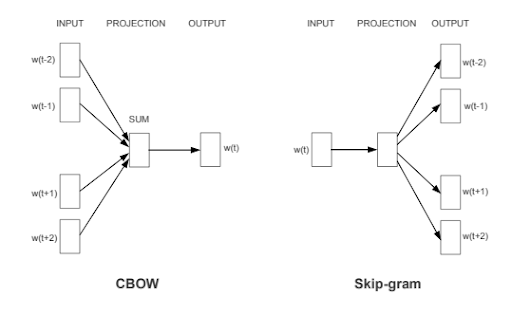

Using a neural network with only a couple layers, word2vec tries to learn relationships between words and embeds them in a lower-dimensional vector space. To do this, word2vec trains words against other words that neighbor them in the input corpus, capturing some of the meaning in the sequence of words. The researchers devised two novel approaches:

- Continuous bag of words (CBoW)

- Skip-gram

Both approaches result in a vector space that maps word-vectors close together based on contextual meaning. That means, if two word-vectors are close together, those words should have similar meaning based on their context in the corpus.

For example, using cosine similarity to analyze the vectors produced by their data, researchers were able to construct analogies like king minus man plus woman = ?

The output vector most closely matched queen.

king - man + woman = queen

If this seems confusing, don’t worry. Applying and exploring word2vec is simple and will make more sense as I go through examples!

How to Install Gensim Word2Vec Library in Python

The Python library Gensim makes it easy to apply word2vec, as well as several other algorithms for the primary purpose of topic modeling. Gensim is free and you can install it using Pip or Conda:

pip install --upgrade gensimor

conda install -c conda-forge gensimYou can find the data and all of the code in my GitHub. This is the same repo as the spam email data set I used in my last article.

Import the Dependencies

from gensim.models import word2vec, FastText

import pandas as pd

import re

from sklearn.decomposition import PCA

from matplotlib import pyplot as plt

import plotly.graph_objects as go

import numpy as np

import warnings

warnings.filterwarnings('ignore')

df = pd.read_csv('emails.csv')I start by loading the libraries and reading the .csv file using Pandas.

Word2Vec Example in Python (With Matplotlib)

Before playing with the email data, I want to explore word2vec with a simple example using a small vocabulary of a few sentences:

sentences = [['i', 'like', 'apple', 'pie', 'for', 'dessert'],

['i', 'dont', 'drive', 'fast', 'cars'],

['data', 'science', 'is', 'fun'],

['chocolate', 'is', 'my', 'favorite'],

['my', 'favorite', 'movie', 'is', 'predator']]Generate Word Embeddings

You can see the sentences have been tokenized since I want to generate embeddings at the word level, not by sentence. Run the sentences through the word2vec model.

# train word2vec model

w2v = word2vec(sentences, min_count = 1, size = 5)

print(w2v)

#word2vec(vocab=19, size=5, alpha=0.025)Notice when constructing the model, I pass in min_count = 1 and size = 5. That means it will include all words that occur ≥ one time and generate a vector with a fixed length of five.

When printed, the model displays the count of unique vocab words, array size and learning rate (default .025).

# access vector for one word

print(w2v['chocolate'])

#[-0.04609262 -0.04943436 -0.08968851 -0.08428907 0.01970964]

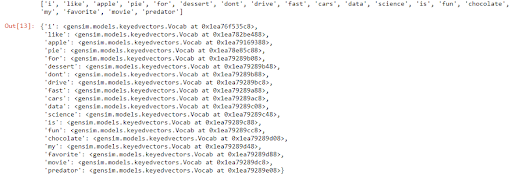

#list the vocabulary words

words = list(w2v.wv.vocab)

print(words)

#or show the dictionary of vocab words

w2v.wv.vocab

Notice that it’s possible to access the embedding for one word at a time. Also take note that you can review the words in the vocabulary a couple different ways using w2v.wv.vocab.

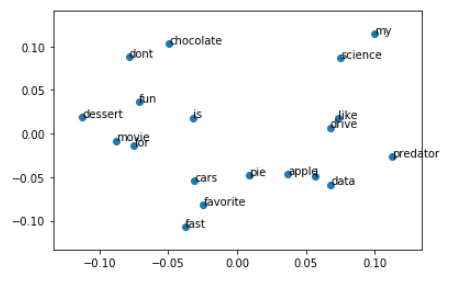

Visualize Word Embeddings

Now that you’ve created the word embeddings using word2vec, you can visualize them using a method to represent the vector in a flattened space. I am using Sci-kit Learn’s principal component analysis (PCA) functionality to flatten the word vectors to 2D space, and then I’m using Matplotlib to visualize the results.

X = w2v[w2v.wv.vocab]

pca = PCA(n_components=2)

result = pca.fit_transform(X)

# create a scatter plot of the projection

plt.scatter(result[:, 0], result[:, 1])

words = list(w2v.wv.vocab)

for i, word in enumerate(words):

plt.annotate(word, xy=(result[i, 0], result[i, 1]))

plt.show()

Fortunately, the corpus is tiny so it is easy to visualize; however, it’s hard to decipher any meaning from the plotted points since the model had so little information from which to learn.

Word2Vec Example in Python (With Plotly)

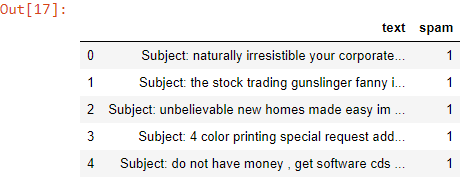

Now that I’ve walked through a simple example, it’s time to apply those skills to a larger data set. Inspect the email data by calling the dataframe head().

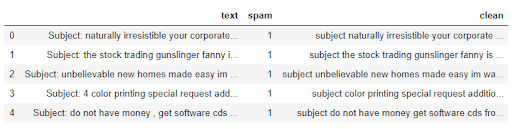

df.head()

Cleaning the Data

Notice the text has not been pre-processed at all! Using a simple function and some regular expressions, cleaning the text of punctuation and special characters then setting it all to lowercase is simple.

clean_txt = []

for w in range(len(df.text)):

desc = df['text'][w].lower()

#remove punctuation

desc = re.sub('[^a-zA-Z]', ' ', desc)

#remove tags

desc=re.sub("</?.*?>"," <> ",desc)

#remove digits and special chars

desc=re.sub("(\\d|\\W)+"," ",desc)

clean_txt.append(desc)

df['clean'] = clean_txt

df.head()

Notice the clean column has been added to the dataframe and the text has been cleaned of punctuation and upper case.

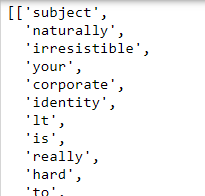

Creating a Corpus and Vectors

Since I want word embeddings, we need to tokenize the text. Using a for loop, I go through the dataframe, tokenizing each clean row. After creating the corpus, I generate the word vectors by passing the corpus through word2vec.

corpus = []

for col in df.clean:

word_list = col.split(" ")

corpus.append(word_list)

#show first value

corpus[0:1]

#generate vectors from corpus

model = word2vec(corpus, min_count=1, size = 56)

Notice the data has been tokenized and is ready to be vectorized!

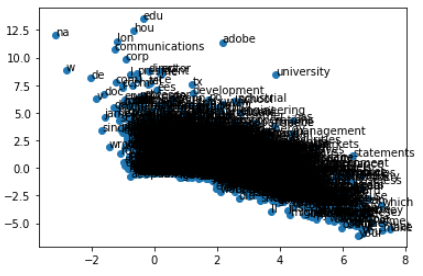

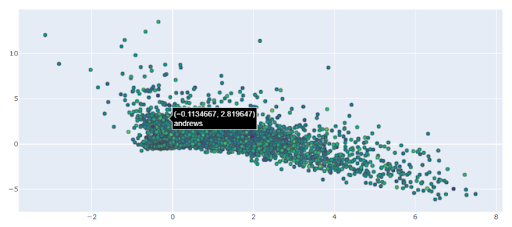

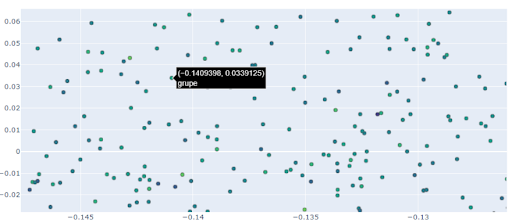

Visualizing Email Word Vectors

The corpus for the email data is much larger than the first simple example performed earlier. Because of how many words we have, I can’t plot them like I did using Matplotlib.

Good luck reading that! It’s time to use a different tool. Instead of Matplotlib, I’m going to use Plotly to generate an interactive visualization we can zoom in on. That will make it easier to explore the data points.

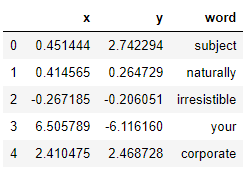

I use the PCA technique, then put the results and words into a dataframe. This will make it easier to graph and annotate in Plotly.

#pass the embeddings to PCA

X = model[model.wv.vocab]

pca = PCA(n_components=2)

result = pca.fit_transform(X)

#create df from the pca results

pca_df = pd.DataFrame(result, columns = ['x','y'])

#add the words for the hover effect

pca_df['word'] = words

pca_df.head()Notice I add the word column to the dataframe so the word displays when hovering over the point on the graph.

Next, construct a scatter plot using Plotly Scattergl to get the best performance on large data sets. Refer to the documentation for more information about the different scatter plot options.

N = 1000000

words = list(model.wv.vocab)

fig = go.Figure(data=go.Scattergl(

x = pca_df['x'],

y = pca_df['y'],

mode='markers',

marker=dict(

color=np.random.randn(N),

colorscale='Viridis',

line_width=1

),

text=pca_df['word'],

textposition="bottom center"

))

fig.show()Notice I use NumPy to generate random numbers for the graph colors. This makes the graph a bit more visually appealing! I also set the text to the word column of the dataframe. The word appears when hovering over the data point.

Plotly is great since it generates interactive graphs and it allows me to zoom in on the graph and inspect points more closely.

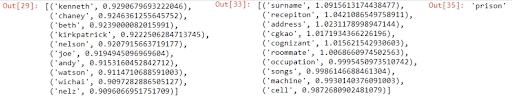

Analyzing and Predicting Using Word Embeddings

Beyond visualizing the word embeddings, it’s possible to explore them with some code. Additionally, the models can be saved as a text file for use in future modeling. Review the Gensim documentation for the complete list of features.

#explore embeddings using cosine similarity

model.wv.most_similar('eric')

model.wv.most_similar_cosmul(positive = ['phone', 'number'], negative = ['call'])

model.wv.doesnt_match("phone number prison cell".split())

#save embeddings

file = 'email_embd.txt'

model.wv.save_word2vec_format(filename, binary = False)Gensim uses cosine similarity to find the most similar words.

It’s also possible to evaluate analogies and find the word that’s least similar or doesn’t match with the other words.

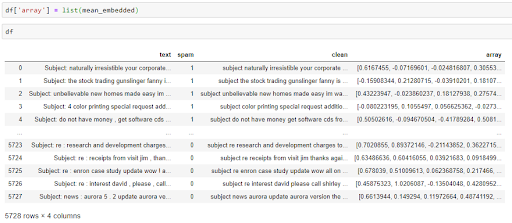

Using Word Embeddings in Predictive Modeling

You can also use these vectors in predictive modeling. To use the embeddings, you need to map the word vectors. In order to convert a document of multiple words into a single vector using the trained model, it’s typical to take the word2vec of all words in the document, then take its mean.

mean_embedding_vectorizer = MeanEmbeddingVectorizer(model)

mean_embedded = mean_embedding_vectorizer.fit_transform(df['clean'])To learn more about using the word2vec embeddings in predictive modeling, check out this Kaggle notebook.

Using the novel approaches available with the word2vec model, it’s easy to train very large vocabularies while achieving accurate results on machine learning tasks. Natural language processing is a complex field, but there are many libraries and tools for Python that make it easy to get started.

Frequently Asked Questions

What is the difference between TF-IDF and Word2Vec?

Both TF-IDF and word2vec are used in natural language processing (NLP) to help computers understand relationships between words, though they have different approaches.

TF-IDF (Term Frequency-Inverse Document Frequency) is a statistical measure that evaluates the importance of a word in a document in relation to a collection of documents (corpus). Word2vec is an NLP technique that creates vectors in a vector space as representations of words, where vectors that are close together represent words that have similar contexts. TF-IDF is effective for keyword extraction and text classification, while word2vec is effective for capturing semantic relationships in text.

What is the difference between word embedding and Word2Vec?

In natural language processing, word embedding is a representation of a word (typically as a vector), while word2vec is a specific family of machine learning model architectures used to produce word embeddings.

What is an example of Word2Vec?

In word2vec, vectors (which represent words) that are close together in a vector space means they have similar contexts. For example, when given the words “king,” “queen,” “man” and “woman,” the vector of “king” would likely be closest to the vector of “queen” in the vector space. This is because the word2vec arithmetic logic would be as follows: king - man + woman = queen.

What are the problems with Word2Vec?

Problems with the word2vec technique include:

- Unable to process unknown or out-of-vocabulary words

- Treats each word as an independent vector, even if multiple words share sub-words and morphological similarities

- Can map words with multiple meanings to a single vector

- Less effective with small data sets