Artificial intelligence, machine learning and deep learning are trending tech terms that we hear everywhere these days, but there are major misconceptions about what these words actually mean. Many companies claim to incorporate some kind of AI in their applications or services, but what does that mean in practice?

What Is AI, Machine Learning and Deep Learning?

- Artificial intelligence (AI): a program that can sense, reason, act and adapt.

- Machine learning: algorithms whose performance improve as they are exposed to more data over time.

- Deep learning: subset of machine learning in which multilayered neural networks learn from vast amounts of data.

Artificial Intelligence vs. Machine Learning vs. Deep Learning

First, let’s go over the basics of what AI, machine learning and deep learning are and how they relate to each other:

- Artificial intelligence (AI): the development of machines capable of performing tasks that typically require human intelligence.

- Machine learning: a branch of AI where machines are built to learn and adapt to new tasks without explicit programming.

- Deep learning: a branch of machine learning where machines use multilayered neural networks to simulate complex decision-making.

Now, let’s dive deeper into each of these concepts to better understand them and how they are applied in the real world.

What Is Artificial Intelligence (AI)?

Artificial intelligence, or AI, describes when a machine mimics cognitive functions that humans associate with other human minds, such as learning and problem solving. AI can merely be a programmed rule that tells the machine to behave in a specific way in certain situations. In other words, artificial intelligence can be nothing more than a program of several if-else statements.

An if-else statement is a simple rule programmed by a human. Consider a robot moving on a road. A programmed rule for that robot could be:

if something_is_in_the_way is True:

stop_moving()

else:

continue_moving()So, when we’re talking about artificial intelligence it’s more worthwhile to consider two more specific subfields of AI: machine learning and deep learning.

What Is Machine Learning?

We can think of machine learning as a series of algorithms that analyze data, learn from it and make informed decisions based on those learned insights.

Machine learning is a relatively old field and incorporates methods and algorithms that have been around for dozens of years, some of them since the 1960s. These classic algorithms include the Naïve Bayes classifier and support vector machines, both of which are often used in data classification. In addition to classification, there are also cluster analysis algorithms such as K-means and tree-based clustering. To reduce the dimensionality of data and gain more insight into its nature, machine learning uses methods such as principal component analysis and T-distributed stochastic neighbor embedding (t-SNE).

What Is Deep Learning?

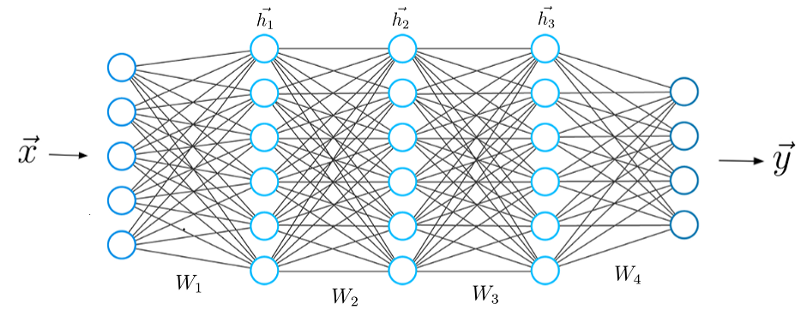

Deep learning is a subfield of artificial intelligence based on artificial neural networks.

Since deep learning algorithms also require data in order to learn and solve problems, we can also call it a subfield of machine learning. The terms machine learning and deep learning are often treated as synonymous. However, these systems have different capabilities.

Unlike machine learning, deep learning uses a multi-layered structure of algorithms called the neural network.

Deep learning is especially efficient at learning patterns in data and making predictions, making it useful for tasks like natural language processing (NLP), image recognition, speech recognition, machine translation and more.

Advantages of AI vs. Machine Learning vs. Deep Learning

AI Advantages

Artificial intelligence (AI) can enable better decision-making and reduce human error, and is used for a variety of automated tasks across virtually every industry — from IT security malware search, to weather forecasting, to stockbrokers looking for optimal trades.

Machine Learning Advantages

Machine learning can offer enhanced accuracy and predictive capabilities in comparison to traditional AI models. It can also incorporate classical algorithms for various kinds of tasks such as clustering, regression or classification.

Deep Learning Advantages

Deep learning models use artificial neural networks, don’t require feature extraction and can increase their accuracy when given more training data. These aspects allow deep learning models to solve complex, hierarchical tasks that machine learning models have more difficulty solving, like generative AI, NLP and computer vision tasks.

All recent advances in intelligence are due to deep learning. Without deep learning we would not have self-driving cars, chatbots or AI assistants like Alexa and Siri. Google Translate would remain primitive and Netflix would have no idea which movies or TV series to suggest.

Frequently Asked Questions

What is artificial intelligence (AI)?

Artificial intelligence (AI) describes a machine's ability to mimic human cognitive functions, such as learning, reasoning and problem solving.

What is machine learning?

Machine learning is a branch of AI that uses a series of algorithms to analyze and learn from data, and make informed decisions from the learned insights. It is often used to automate tasks, forecast future trends and make user recommendations.

Is machine learning and AI the same thing?

No, machine learning and artificial intelligence are not the same thing, though they are closely related. AI is a field that involves creating systems capable of performing tasks that typically require human intelligence, while machine learning is a subfield of AI that focuses specifically on building algorithms that allow systems to learn from data without explicit programming.