In computer science, Monte Carlo tree search (MCTS) is a heuristic search algorithm for decision processes, most notably those employed in software that plays board games. It’s a probabilistic and search algorithm that combines classic tree search implementations alongside machine learning principles of reinforcement learning.

Monte Carlo Tree Search Definition

Monte Carlo tree search is a heuristic search algorithm that relies on intelligent tree search to make decisions. It’s most often used to perform game simulations, but it can also be utilized in cybersecurity, robotics and text generation.

Before we dive into the Monte Carlo tree search algorithm, we need to understand a few basics. So, let’s discuss a few terms first that will help us understand the problem space.

What Is Monte Carlo Tree Search?

Monte Carlo tree search is a method that relies on intelligent tree search that balances exploration and exploitation. It performs random sampling in the form of simulations and stores the statistics of actions to make more educated choices in each subsequent iteration.

Monte Carlo tree search only searches a few layers deep into the tree and prioritizes which parts of the tree to explore. It then simulates the outcome rather than exhaustively expanding the search space. In doing so, it limits how many evaluations it has to make.

The individual evaluation relies on the playout/simulation in which the algorithm effectively plays the game from a given starting point all the way to the leaf state by making completely random decisions, and then it records the results which is then used to update all the nodes in the random path all the way to the root. When it completes the simulation, it then selects the state that has the best rollout score.

To better understand how it works, you need to understand combinatorial games and game trees.

What Is a Combinatorial Game?

Monte Carlo tree search is used in combinatorial games. These are sequential games with perfect information. The preconditions for a combinatorial game include:

- It must be a two-player game

- It must be a sequential game in which players take turns to play their move.

- It must have a finite set of well-defined moves.

Examples of a combinatorial game include, chess, checkers, Go and tic-tac-toe.

What Is a Game Tree?

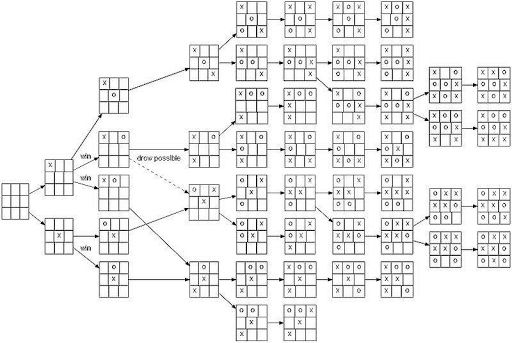

A game tree is a graph representing all possible game states within a combinatorial game. A game tree is a directed graph whose nodes represent a particular state of the game, and the edges represent possible next states from the given state. The leaf states of the tree represent either a win, lose or tie for the game.

This can be used to measure the complexity of a game, as it represents all the possible ways a game can pan out.

For example, following is the game tree of Tic-Tac-Toe:

If we want to replace one player with a computer program (AI), we need to pick the move that leads to the winning leaf node path.

There are various algorithms we can use to solve this problem, including:

- Minimax.

- Minimax with alpha, beta pruning.

- A* search.

These algorithms traverse the complete game tree (in various fashions) to pick the optimal move. But what if the game tree size is very large?

When the game has a high branching factor it’s not always computationally possible to traverse the entire game tree to pick the best move. This is when Monte Carlo tree search is very effective. Monte Carlo tree search (MCTS) utilizes a heuristic search algorithm method to solve the game tree.

How Monte Carlo Tree Search Works

The Monte Carlo tree search algorithm has four phases. We assign state values and number of interactions for each node.

1. Selection

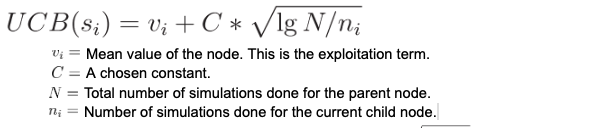

In this phase, the algorithm uses the following formula to calculate the state value of all the possible next moves and pick the one which gives the maximum value.

The first term vi is the exploitation term. The second term, which is the square-root of lg N/ni is the exploration term. The algorithm considers both nodes, one with a high state value, and one that is relatively unexplored, to make a selection. This contact defines the weightage between exploitation and exploration.

At the beginning when no node is explored, it makes a random selection because there is no data available to make a more educated selection.

When a node is unexplored, i.e. when n1=0, the second term becomes ∞ and thus obtains a maximum possible value and automatically becomes a candidate for selection. Thus, the equation makes sure all children get selected at least once.

2. Expansion

In this phase, we expand from one node and start looking one level deeper. The node we expanded from becomes the parent (current state), and its children become the possible next moves.

3. Simulation

In this phase, we simulate the game from the selected child node in phase one and continue the game by making random choices until we reach an end state, i.e. a win, lose or draw. Let’s assign following values to these results/outcomes:

Win = +1Loose = -1Draw = 0

4. Backpropagation

In this phase, we backpropagate and update the result we found in the simulation phase to all the nodes in the random path we traversed and up till the root node. This sets the value v(i) which is then used in the selection phase of the formula.

Advantages of the Monte Carlo Tree Search

There are several advantages to using a Monte Carlo tree search, including:

- Domain agnostic.

- The ability to halt it at any time.

- Asymmetric tree growth.

1. Domain Agnostic

Monte Carlo tree search doesn’t require any strategic or tactical knowledge about the given domain to make reasonable decisions. The algorithm can function effectively with no knowledge of a game, apart from its legal moves and end conditions. This means that a single Monte Carlo tree search implementation can be reused for a number of games with little modification.

However, in more complex games, such as those with high branching factor or real-time ones, as well as in various practical domains, like transportation, scheduling or security, an efficient Monte Carlo tree search application often requires a problem-dependent modification or integration with other techniques.

2. Anytime Algorithm

The algorithm can be halted at any time to return the current best estimate. The search tree built thus far may be discarded or preserved for future reusability.

3. Asymmetric

Monte Carlo tree search performs asymmetric tree growth that adapts to the topology of the search space. The algorithm visits more interesting nodes more often and focuses its search time in more relevant parts of the tree.

This makes the Monte Carlo tree search suitable for games with large branching factors, such as 19x19 Go. Such large combinatorial spaces typically cause problems for standard depth- or breadth-based search methods, but the adaptive nature of Monte Carlo tree search means that it will eventually find optimal moves and focus its search effort there.

Disadvantages of Monte Carlo Tree Search

There are a few disadvantages to Monte Carlo tree search, including:

1. Memory Requirement

As the tree growth becomes rapid after a few iterations, it requires a huge amount of memory.

2. Reliability

There is a reliability issue with Monte Carlo tree search. In certain scenarios, there might be a single branch or path that might lead to loss against the opposition when implemented for those turn-based games. This is mainly due to the vast amount of combinations and each of the nodes might not be visited enough number of times to understand its result or outcome in the long run.

3. Iterations

Monte Carlo tree search algorithm needs a huge number of iterations to be able to effectively decide the most efficient path. So, there is a bit of a speed issue there.

Monte Carlo Tree Search Applications

Monte Carlo tree search has various use cases, including:

1. Game simulation

It’s used in two-player board games like tic-tac-toe, Chess and Go.

2. Security

Malware is one of the biggest threats in IT security, with millions of malicious applications released every year at an ever growing rate. For this reason, automated techniques based on machine learning are fundamental tools for helping security experts in analyzing and classifying dangerous software. Active malware analysis is one that focuses on acquiring knowledge about dangerous software by executing actions that trigger a response in the malware. It uses Monte Carlo tree search.

3. Robotics

Mobile robots hold great promise in reducing the need for humans to perform jobs such as vacuuming, seeding, harvesting, painting, search and rescue and inspection. In practice, these tasks must often be done without an exact map of the area and could be completed more quickly through the use of multiple robots working together. Many multi-robots on-line coverage path planning algorithms have been developed and Monte Carlo tree search planner is now being used because of its efficient completion time.

4. Text Generation

Monte Carlo Tree search is used in simulation-based natural language generation that accounts for both building a correct syntactic structure and reflecting the given situational information as input for the generated sentence. The Monte Carlo tree search for this nontrivial search problem in simulation, uses context-free grammar rules as search operators.

Monte Carlo Tree Search Example

To run through an example, we need a simple game from which we can draw a game tree. So let’s invent a hypothetical game.

- The Game: Pair

- Number of players: 2

- Number of Boxes in the board: 4

To win, a player has to play two adjacent boxes.

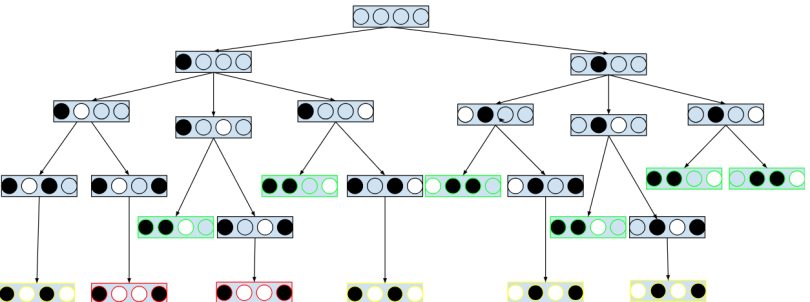

- Green leaves are the winning positions, i.e +1 (Total 6 Nodes).

- Yellow leaves are a draw, i.e 0 (Total 4 Nodes)

- Red leaves are losing positions, i.e -1 (Total 2 Nodes).

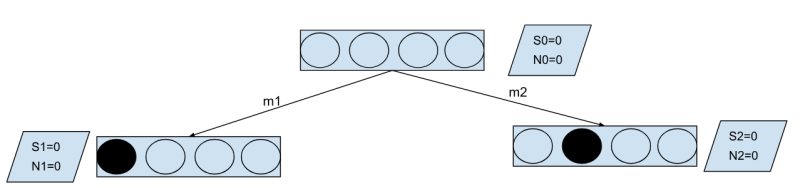

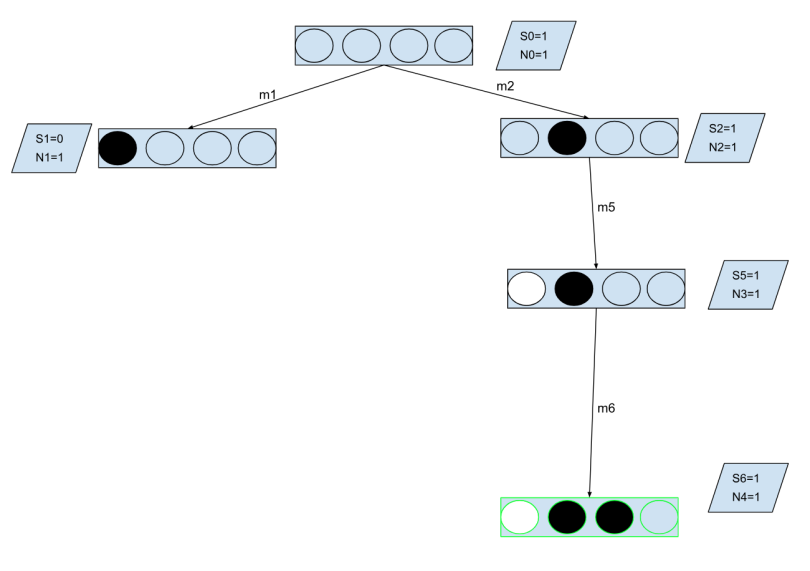

Iteration 1

1. Selection, Expansion

S0is the initial state from which we need to pick the next move betweenm1andm2, which will result in either stateS1orS2.- Here we calculate the upper confidence bound (UCB) value for both

S1andS2, since both are leaf node the value will be infinity for both and hence we randomly pickS1and simulateS1.

2. Simulation S1

- Since

S1is leaf node and not visited, we simulated the game with random moves till the leaf node. - For simplicity we only simulate once and make

m3andm4moves which results in a draw. - As per the game

Draw = 0, we assignS4=0and increase the count of visitN4=1.

3. Backpropagation

- Now we backpropagate this result all the way to the root.

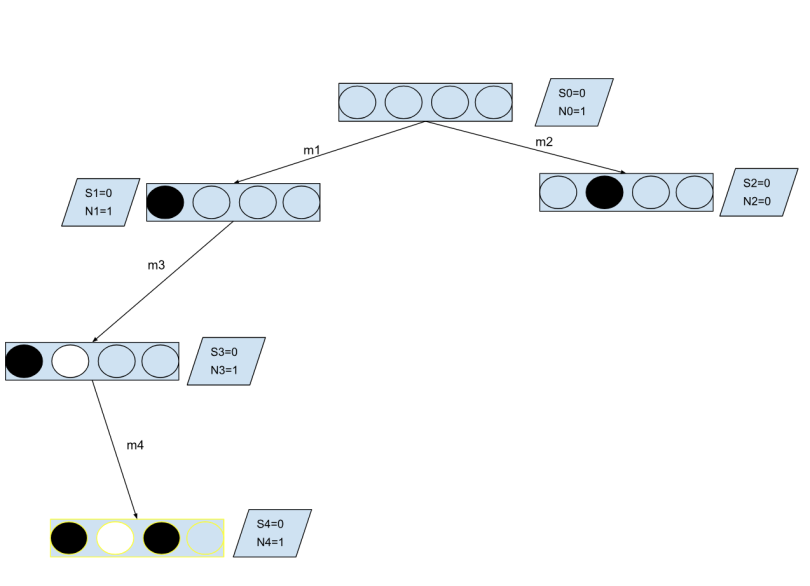

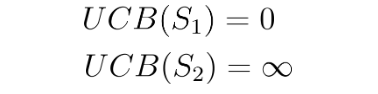

Iteration 2

1. Selection

- Now, we again need to calculate the UCB values for

S1andS2. After one interaction:

Since N is 0 for S2, we pick S2 this time.

2. Simulation S1

- Since

S1is a leaf node and wasn’t visited, we simulated the game with random moves until we reach the leaf node. - For simplicity, we’ll only simulate once and make

m5and m6 moves, which results in a win. - As per the game

Win = 1, we assignS6=1and increase the count of visitN4=1.

3. Backpropagation

- Now, we backpropagate this result all the way to the root.

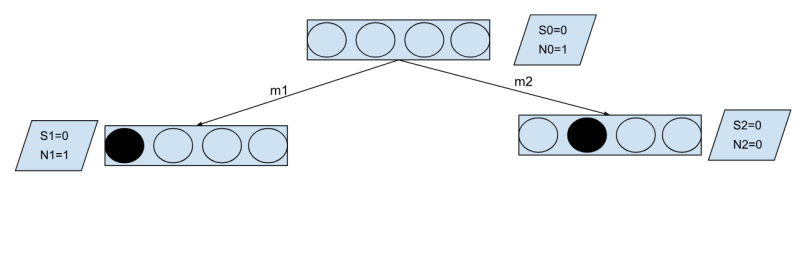

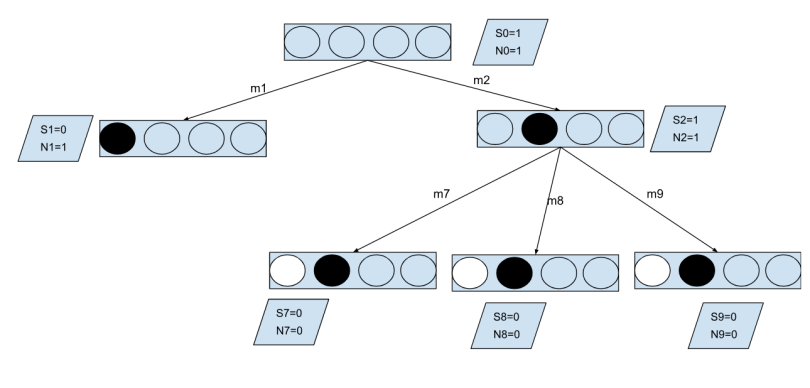

Iteration 3

1. Selection

- Now, we again need to calculate the UCB values for

S1andS2. After the second interaction:

As N = 1 both and for S2UCB = 1, which is higher. So, we pick S2 again this time.

Iteration 4

- Now,

S2has already been visited and we again select it. So now, we expandS2. S2will become the parent, and we’ll run the selection on its children.

- Here, we calculate the UCB Value for

S7,S8andS9. Since all are leaf nodes, the value will be infinity. So we will randomly pickS7and SimulateS7.

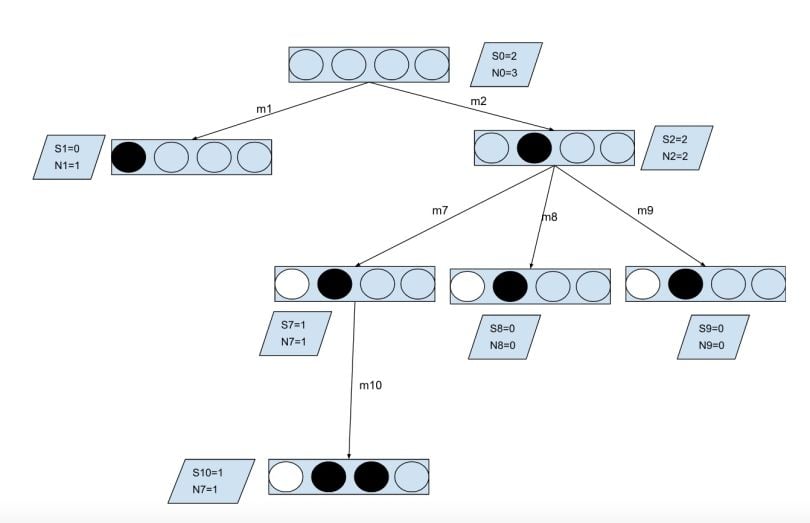

2. Simulation S7

- Since

S7is a leaf node and wasn’t visited, we simulated the game with random moves until we reached the leaf node. - For simplicity, we only simulate once and make

m10moves, which results in a win. - As per the game,

Win = 1, so, we assignS10=1and increase the count of visitN4=1.

Backpropagation

- Now, we backpropagate this result all the way to the root.

Monte Carlo Tree Search Example Results

Because this is an anytime algorithm, if we stop it here, UCB value for S2 > S1, m2 will be a favorable move. So the algorithm chooses move M2 (second place) over move M1 (corner place) because it was part of more iterations and had a higher score.

The more the algorithm plays the game, the more data it gathers about the different possible moves and makes better decisions.