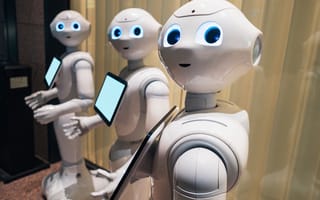

Embodied AI refers to artificial intelligence systems that can engage with their surrounding environment through a body, such as a robot or virtual avatar. In contrast, disembodied AI exists purely as algorithms or software running on computer servers — think chatbots like ChatGPT or virtual assistants like Amazon’s Alexa, which interact with users through text and voice but have no physical form.

Embodied AI Definition

Embodied AI, also known as embodied intelligence, is AI that can engage with its surroundings through a physical system. It gathers real-time insights from its movements and interactions with its environment to improve its decision-making over time.

Rather than relying on data people provide to describe the world, embodied AI interacts directly with its environment, gaining more accurate and immediate insights that it can use to inform its decisions and actions moving forward. This technology bridges perception and cognition with the physical realm, expanding the opportunities for artificial intelligence to leave a more lasting mark on industries ranging from healthcare to manufacturing.

What Is Embodied AI?

Embodied AI are physical systems powered by artificial intelligence that can directly engage with their surroundings. They use devices like sensors and motors to compile data from their movements and environments, then apply machine learning, computer vision and natural language processing to glean insights from that data. From there, they can adjust their decisions and actions over time.

In this sense, embodied AI closely follows human and animal cognition, learning from the interactions it has with its environment. This approach is applied in a number of technologies, including autonomous vehicles, drones and avatars in virtual reality (VR) and augmented reality (AR) experiences.

Key Components of Embodied AI

To better understand how embodied AI learns from its environment, it helps to break down the technology into four main components:

- Perception: Embodied AI uses devices like sensors, microphones and cameras to perceive its surroundings, gathering visual data and other sensory information to learn about its environment.

- Motor control: Physical systems outfitted with AI must learn how to navigate different settings and interact with individual objects. As a result, perception and motion are closely connected in embodied AI.

- Reasoning and planning: Through a trial-and-error approach, embodied AI learns what decisions lead to a desired outcome. It then becomes better at making decisions in real time, allowing it to stay on track to achieve a specific goal.

- Learning in context: Embodied AI uses techniques like reinforcement learning, imitation learning and self-supervised learning to learn from experiences and interactions with its environment, so it can excel within particular contexts.

Applications of Embodied AI

Embodied AI offers exciting opportunities to produce more intelligent physical systems in industries like logistics, healthcare and extended reality.

Logistics

Autonomous mobile robots leverage AI to determine optimal routes, identify hazards and transport packages in warehouses and factories. And AI-powered delivery robots and drones can calculate the best path to reach their destination on time, avoiding objects like pedestrians and birds.

Healthcare

In the medical field, surgical robots can use AI to guide them in making precise incisions, applying the appropriate amount of pressure and performing careful movements during procedures. Robots and exoskeletons can gather feedback from sensors to provide proper support to patients during physical rehabilitation as well, thanks to embodied AI.

Automotive

Self-driving cars are another form of embodied AI, as they gather data about their surroundings via sensors and analyze this data in real time. They can then alter route suggestions based on traffic updates, navigate various driving conditions and engage safety mechanisms when they detect obstacles.

Extended Reality

VR and AR employ AI avatars for use cases like physical rehabilitation and gaming. To ensure these avatars move naturally in virtual environments, humans train them through a technique known as “simulated embodiment” — where humans wear a VR or AR headset and perform motions for an avatar to mimic. The avatars process these motions as if they were their own, enabling them to learn from experience.

Education

Social robots use AI to identify facial expressions and vocal cues, depending on sensors, microphones and cameras they are equipped with. They’re also designed to respond to touch, resulting in physical interactions that feel intuitive to users. These traits make them ideal for working with children to support their socio-emotional growth.

Benefits of Embodied AI

Embodied AI provides several advantages over disembodied AI, primarily thanks to its ability to learn from and adapt to its environment.

Enhanced Problem-Solving

Embodied AI doesn’t just rely on existing rules to figure out how to address different situations. Because it can physically interact with its environment, embodied AI can gather new information to inform its decision-making process. It can then respond to unforeseen obstacles and develop solutions based on the most up-to-date data.

Improved Learning

The physical aspect of embodied AI enables it to directly interact with objects in its environment and compile immediate feedback, so it can learn from its experiences and adjust its actions and decisions accordingly. This gives embodied AI much more room to grow than if it solely relied on its training data to learn how to complete tasks.

Greater Adaptability

Collecting real-time data from physical interactions allows embodied AI to adapt to varying situations. And it can respond quickly to changing real-world contexts. Because of this flexibility, embodied AI doesn’t need to be reprogrammed for different tasks, saving developers time and effort.

More Intuitive Interactions

Due to its ability to learn from experiences and pivot on the fly, embodied AI can make human-AI interactions feel more intuitive. Some embodied AI systems also take on physical forms like social robots, which are designed to communicate and understand expressions, so humans can more naturally live and work alongside AI.

Challenges of Embodied AI

Although embodied AI represents a major step forward for artificial intelligence, it still comes with some downsides.

High Costs

Several factors can influence the overall cost of embodied AI, including the complexity of its underlying models, the hardware it uses and the resources required to integrate artificial intelligence into a physical system. The price tag can rise even further when considering the costs of maintaining an embodied AI system — and potentially scaling it.

Simulation Limits

Embodied AI systems are commonly trained on synthetic data generated from digital twin simulations and world foundation models that simulate real-life scenarios. However, simulations don’t perfectly mirror actual conditions, so embodied AI trained mostly via simulation may struggle to adapt to real-world situations.

Safety Issues

While precautions are taken to prepare embodied AI before it is deployed in the real world, there’s no guarantee it will work as planned. Consider the Cruise ban after one of the company’s autonomous vehicles accidentally pinned a pedestrian. Questions remain about the reliability of embodied AI and whether consumers and businesses can trust the technology completely.

Ethical Concerns

Embodied AI also raises a range of ethical questions, as job losses, a growing digital divide and increased social isolation are all possible impacts. Some forms of embodied AI like humanoid robots can even express and perceive emotion to a certain degree, igniting debates about whether AI should have this capability at all.

The Future of Embodied AI

The future of embodied AI hints at wider adoption of the technology, thanks to several developments in the field.

Advances in Simulation Environments

Open-source simulation environments like iGibson and MuJoCo have allowed researchers to freely experiment with simulation tools for the purpose of robotics and AI research. The latter platform was acquired and open-sourced by Google DeepMind in 2021. More recently, DeepMind partnered with Nvidia and Disney Research to create an open-source physics engine that works with platforms like MuJoCo to produce powerful robotic simulations.

Meta AI has also dabbled in robotic simulations since it first introduced the Habitat platform in 2019. The simulator is now in its third version, offering more accurate simulations to study how humans and robots could collaborate in home settings. This progress is part of Meta’s ultimate goal to release humanoid robots in the near future.

Combining Large Language Models With Embodied AI

As large language models (LLMs) and other foundation models continue to make strides, there’s increasing buzz around integrating these models into physical systems. The idea is to build general-purpose embodied agents — physical systems that possess the intelligence to complete a variety of tasks instead of a limited set of tasks. These advanced agents would seamlessly combine perception and language skills with physical motion and manipulation.

While integrating LLMs into physical bodies presents several challenges, robotics companies have been inching toward this reality. One of the latest examples is Sanctuary AI equipping its Phoenix robots with tactile sensors to better train them in dexterous manipulation, signaling that general-purpose embodied agents may be just beyond the horizon.

Broader Societal Roles

Improvements in AI and robotics have already put embodied AI to work in new ways. For example, PAL Robotics’ humanoid robot ARI served as a receptionist at IT company Ayesa’s Barcelona office, welcoming visitors, providing tours and showing off Ayesa’s products. And in a study conducted by Singaporean and Australian researchers, a social robot was introduced into nursing facilities, and was shown to be a viable way to address the ongoing shortage of human caregivers.

Embodied AI could also play an important role in space exploration. Automated systems already help maintain satellites in orbit. Looking ahead, embodied AI could help space robots make decisions on their own — a crucial skill for missions to Mars since they can’t receive commands from Earth due to the distance between the two planets.

Frequently Asked Questions

What is embodied artificial intelligence?

Embodied artificial intelligence refers to AI integrated into physical systems that can directly engage with their surroundings. Gathering data with devices like sensors and cameras, embodied AI learns from its environment to improve its decision-making over time.

What is the difference between embodied AI and AI?

AI is a discipline that involves any machine performing tasks that typically require human-level intelligence. Embodied AI is a specific type of AI that has a physical form, allowing it to interact with the world around it. By learning from direct experiences and feedback, it can refine its decisions and actions over time.

What is an embodied robot?

Embodied robots are robots equipped with AI, giving them the intelligence to learn by interacting with their surroundings. They can perceive their environments, adjust their actions and decisions based on learned experience and work more collaboratively with humans and other machines as a result.

What is an example of embodied AI?

A common example of embodied AI is robotic vacuum cleaners. These robots use AI to map out their surroundings and learn from their environments, following the most efficient paths possible for cleaning a space.

Is Siri an embodied agent?

No, Siri is not an embodied agent. It isn’t integrated into a physical body, nor does it take on a virtual representation of a body.

What is the difference between an AI agent and embodied AI?

AI agents are software programs that can complete complex, multi-step tasks, autonomously deciding how to go about accomplishing a goal. Meanwhile, embodied AI is artificial intelligence that has been integrated into a physical body and can learn from its surroundings. Unlike embodied AI, AI agents don’t have a physical form or gather direct feedback from their environments.