Robots, while having the ability to make our lives easier in so many different ways — like vacuuming floors or working alongside us in factories — also have the power to really creep us out.

This is due to a phenomenon known as the uncanny valley, which refers to the way we negatively react to humanoid figures that exhibit human-like traits and behaviors. While this feeling is primarily associated with robots, it can also occur when video games and CGI-animated movies depict characters that look human in ways we find unsettling.

What Is the Uncanny Valley?

The uncanny valley is a term that describes the “eerie sensation” one feels when they encounter a robot or computer-generated character with human-like characteristics. It was first coined in 1970 by Masahiro Mori, a robotics professor at the Tokyo Institute of Technology.

What Is the Uncanny Valley?

The uncanny valley is a term that describes the sense of discomfort or unease we experience when we encounter a robot with certain human-like characteristics. Evoking a negative emotional response in humans, these robots typically come close to meeting our expectations of what a robot should look and act like, only to fall short somehow before descending into the uncanny valley.

It’s not just robots that conjure such strange feelings: digital avatars and animated characters may make us uneasy, too.

Roboticist Masahiro Mori, who coined the term, believed the phenomenon to be a survival instinct: “Why were we equipped with this eerie sensation? Is it essential for human beings?” he wrote in an essay, which was published in the Japanese journal Energy more than 50 years ago. “I have not yet considered these questions deeply, but I have no doubt it is an integral part of our instinct for self-preservation.”

What Causes the Uncanny Valley Phenomenon?

It’s not like a Roomba is going to freak you out, nor will every robot with a human-like face. But a certain movement or gesture like a nod of a robotic head, a blink of a mechanical eye or the way silicone dimples an artificial cheek can quickly elicit a feeling of discomfort. And it really shouldn’t be surprising that these robots, which are able to mimic humans so well, stir these types of feelings — their resemblance to humans in appearance and actions is often remarkable. It’s really human perception that’s at the heart of the uncanny valley, not the robots or the technology behind them.

Though an exact cause is difficult to pin down, in the last few years, researchers believe they have identified the neural mechanisms in the brain that elicit these negative reactions, which they write in the Journal of Neuroscience are “based on nonlinear value-coding in ventromedial prefrontal cortex, a key component of the brain’s reward system.”

What does that mean, exactly? Basically, a robot is less likely to creep us out if it’s able to do something that’s ultimately useful.

“This is the first study to show individual differences in the strength of the uncanny valley effect, meaning that some individuals react overly and others less sensitively to human-like artificial agents,” Astrid Rosenthal-von der Pütten, one of the study’s authors, said in a University of Cambridge news report. “This means there is no one robot design that fits — or scares — all users. In my view, smart robot behavior is of great importance, because users will abandon robots that do not prove to be smart and useful.”

Is the Uncanny Valley Real?

While the uncanny valley is a widely accepted concept, there exists debate over its legitimacy, with one of the biggest issues being the lack of a consistent reaction that researchers can point to as a clear example of the uncanny valley.

In response to these doubts, researchers have tested for uncanny valley reactions in children. One experiment found the uncanny valley to be more prevalent in children older than nine while another discovered “typically developing” children display the reaction more than children with autism spectrum disorder.

These findings suggest the uncanny valley is a valid concept, but reinforce the idea that the type of reactions associated with it vary based on a number of factors.

Uncanny Valley Examples

Here are some examples of robots and digital characters that can fall into the uncanny valley.

Uncanny Valley in Robots

1. Actroid

Actroid robots, which are manufactured by the Japanese robotics company Kokoro Dreams, a subsidiary of Sanrio, operate autonomously and heighten human-robot interaction through “motion parameterization” — basically, expressive gestures like pointing and waving that make humans feel like they’re being paid attention to, though often without a suitable bridge between expectation and reality. Though Actroids also blink and make breathing motions that could easily weird one out, they have been used to help adults with autism spectrum disorder develop nonverbal communication skills. A new line of Actroid robots are in development, while current models are available for rental.

2. Alter

In 2020, Alter 3, which is powered by an artificial neural network and was developed as a joint project from researchers at Osaka University and the University of Tokyo, conducted an orchestra at the New National Theater in Tokyo and has performed in Germany and the United Arab Emirates. To make Alter 3 better capable of interacting with humans, researchers equipped the robot, which has a human-like face and robotic body, with cameras in both eyes as well as a vocalization system in the robot’s mouth.

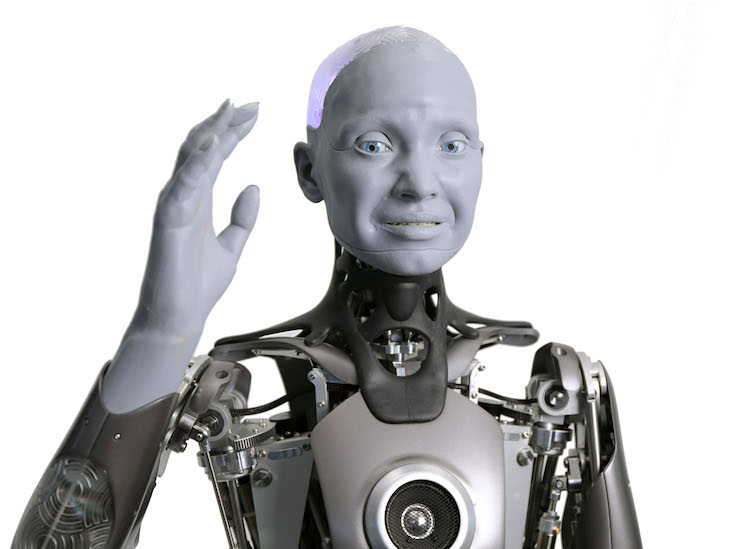

3. Ameca

Ameca, a humanoid robot from Engineered Arts, has a silicon face and is equipped with sensors that can track a person or object’s movement. It’s able to express astonishment and surprise, and can recognize faces and voices. Ameca also yawns and shrugs, and can discern emotions and even age. It can also shush you if you’re being too loud.

4. BigDog

It’s not just those robots with human-like characteristics that evoke negative reactions, robots that favor our four-legged friends also descend into the uncanny valley at times. BigDog, which was a canine-like, legged robot developed by Boston Dynamics, could cause some discomfort, especially if you watch it traipse through the woods or try to regain its balance after slipping on some ice. The fact that it had no head and seemed destined to become some sort of robotic pack animal also evokes a slight sense of pity. Now considered a “legacy robot” by Boston Dynamics, it was the first robot with legs to leave the company’s lab.

5. CB2

Designed to mimic the look and behavior of a gigantic two-year-old child, CB2, a child robot from the University of Osaka, was developed in 2006 and used by researchers to study robot learning and cognition, according to IEEE Spectrum. Gray and hairless, CB2’s eyes were outfitted with cameras and sensors were placed on its skin, which resembled a futuristic, space-like suit.

6. Diego-san

Developed by Hanson Robotics in 2013, Diego-san is another robotic child that likely left more than a few people who interacted with it feeling slightly unsettled. According to Hanson’s website, Diego-san, which has a full set of teeth seemingly baked into a mask-like half-face on top of a robotic body, was designed to learn much like a real child would and has made a home for itself at the Machine Perception Lab of the University of California, San Diego, where researchers are using it to study artificial intelligence and human-robot interaction.

7. Erica

Erica, which has been described as a “pretentious method-acting humanoid robot,” is another source of human unease. Developed as a joint effort between Osaka University, Kyoto University and the Advanced Telecommunications Research Institute International, Erica was initially slated to work in broadcast news in Japan, but has also found a home in Hollywood, cast in a feature film that has yet to be released. A portrait of Erica, which is short for Erato Intelligent Conversational Android, won third place in London’s National Portrait Gallery competition.

8. Geminoid HI

Developed by Osaka University roboticist Hiroshi Ishiguro in 2006, Geminoid strongly favors its creator and can even mimic Ishiguro’s voice and head movements. More recent Geminoid iterations that are just as unsettling also exist for research purposes. That research takes two approaches — one related to engineering and the other cognitive features — with the aim to “realize an advanced robot close to humankind, and at the same time, the quest for the basis of human nature.”

9. Jules

Unveiled in 2006, Jules, another humanoid robot from Hanson Robotics that’s also dubbed a custom character robot that can be transformed into any human likeness, is equipped with natural language processing, computer vision and facial recognition — all of which make Jules ideal for conversation. Today, Jules still resides at his original home, with researchers at the University of West England in Bristol.

10. Motormouth Robot KTR-2

Imagine a rubber mouth paired with an ill-shapen nose and you have the Motormouth Robot KTR-2. The sound that emanates from this robot, which was designed to imitate human speech, according to Gizmodo, is difficult to describe other than buzzy. It uses an air pump for lungs and is equipped with its own set of metallic vocal cords and a tongue made of silicone.

11. Moya

DroidUp’s Moya is described as the world’s first “fully biomimetic embodied intelligent robot.” Designed to move with a high walking accuracy rate and respond to humans with realistic micro-expressions, Moya features a synthetic, bipedal body that maintains a human-like temperature between 32 and 36 degrees Celsius (90 and 97 degrees Fahrenheit) and utilizes cameras behind its eyes to track movement and interact. DroidUp intends for Moya to launch in late 2026 for approximately $173,000, targeting roles in healthcare, education and public service where a warm, lifelike presence can better connect with people.

12. Nadine

When it comes to the uncanny valley, there are few robots that will transport you there quicker than Nadine, a humanoid social robot developed by researchers from Nanyang Technological University in Singapore. Nadine, with its realistic skin, hair and facial features, has worked in customer service and led bingo games. Nadine is also able to recognize faces, speech, gestures and objects.

13. Saya

Saya, which was developed by researchers in Japan, was the first robot teacher. When it was first introduced in a classroom, it couldn’t do more than take attendance and ask students to be quiet. Initially developed as a receptionist, by the time Saya entered the classroom, it could express emotions like surprise, fear and anger and was controlled remotely by humans. “Robots that look human tend to be a big hit with young children and the elderly,” Hiroshi Kobayashi, Tokyo University of Science professor and Saya’s developer, told the Associated Press in 2009. “Children even start crying when they are scolded.”

14. Sophia

Sophia, an AI-powered humanoid robot from Hanson Robotics, is famous, having been featured on the cover of Cosmopolitan magazine and as a guest on The Tonight Show where she played rock-paper-scissors with host Jimmy Fallon. But what sets Sophia apart when it comes to the uncanny valley is her own self-awareness, seeing herself as the self-described “personification of our dreams for the future of AI.”

15. Telenoid R1

If you’re still feeling uneasy about Geminoid HI from Osaka University’s Hiroshi Ishiguro, this Telenoid R1 robot won’t do much to quell your discomfort. While this robot is much less lifelike — IEEE Spectrum described it as “an overgrown fetus” — it displays its few human-like characteristics, like a bald head and arm- and leg-less torso, in a way that really evokes the true sense of what it’s like to be stuck deep in the uncanny valley. Though its developers acknowledged how “eerie” it is at first, they believed humans would ultimately adapt to it.

Uncanny Valley in Media and Animation

16. Grand Moff Tarkin in Rogue One

The uncanny valley can be seen in movies, with one of the most controversial uses of CGI occurring in 2016’s Rogue One: A Star Wars Story. To bring the character Grand Moff Tarkin back into the Star Wars universe, filmmakers designed a digitally created version of the actor Peter Cushing, who passed away in 1994. For fans of the franchise, watching the CGI representation of a deceased actor could be “distracting” and lead to a jarring descent into the uncanny valley.

17. Motion-Captured Characters in The Polar Express

In The Polar Express, the uncanny valley effect is triggered by motion-capture technology that rendered characters with near-photorealistic skin textures, but lacked the subtle eye micro-movements found in living humans. This discrepancy resulted in a “dead eye” look, where the characters’ gazes appeared vacant and hollow despite their lifelike appearance. This visual mismatch caused many viewers to experience an instinctive sense of unease or revulsion, as their brains struggled to reconcile the human-like figures with their mechanical, non-human expressions.

18. MotionScan Technology in L.A. Noire

L.A. Noire is a detective video game that relies on human players being able to determine if characters are lying or telling the truth. To enhance the realness of individual interrogations, the game’s creators employed a technology called MotionScan, which uses a system of cameras to capture the movements and expressions of human actors. While this data contributed to more life-like CGI characters, users noticed how characters’ expressions didn’t always match their voices or certain contexts, leading to uncomfortable experiences at times.

19. The Na’vi in Avatar

James Cameron’s Avatar made a splash in 2009 because it employed CGI animation to depict the fictional Na’vi people in the world of Pandora. However, not everyone was a fan of blue lifeforms displaying human-like features. Besides the appearances of the Na’vi, their movements have been described as “just a little too fluid.” Together, these factors made the Na’vi appear less than real, potentially creating a bizarre experience for some viewers.

Frequently Asked Questions

What is the uncanny valley?

The uncanny valley is the sense of unease or discomfort triggered by observing robots, humanoid figures or animated CGI characters that appear almost (but not quite fully) human.

What is an example of the uncanny valley?

Examples of the uncanny valley include reactions to robots like Sophia and Ameca, which can feel unsettling when their synthetic skin and movement delays remind the brain they aren't truly human. The “dead eyes” of characters in media like The Polar Express also illustrate the uncanny valley, where near-human CGI triggers unease because it lacks realistic bodily movement.

Why does the uncanny valley exist?

The uncanny valley phenomenon is thought to be an evolutionary survival instinct that triggers a “disgust” response to protect humans from potential threats, such as corpses or individuals with contagious diseases. Additionally, it stems from a cognitive conflict where human brains become confused and unsettled by an object that appears human, but behaves in a slightly “off” or non-human way.