Steve Jobs once claimed Apple didn’t do any market research. But that’s not true — at least not when David Fradin was working alongside him in the early 1980s.

“It’s kind of funny to me, because when I was at Apple, there were 50 people working in the corporate market-research department doing this: the observations and the interviews and the surveys,” Fradin said. “He was trying to throw a curve ball, in my opinion, to the competitors.”

The two had a complicated relationship. Fradin is now the president of Spice Catalyst and a professor of product management at WileyNXT. He has written eight books on product development and marketing and, over the past decade, led the training of roughly a third of Cisco’s product management team. Back then, he was a group product manager at Apple, charged with bringing the first hard disc drive on a PC to market and, later, marketing the release of the now-infamous Apple III.

Tips for the New Product Development Process

- Spend time on discovery.

- Only ship high-quality products.

- Collect usage data.

- Make sure your product is intuitive and won’t confuse users.

The latter project, in today’s parlance, was failing fast. The executive committee lost faith in its viability as a flagship venture, but they agreed to let Fradin manage it as an independent business unit. Meanwhile, Jobs was in a commensurate management role, but the project under his watch was more auspicious: developing the Macintosh.

We all know what happened.

“[Steve Jobs] was trying to throw a curve ball, in my opinion, to the competitors.”

The point here is not about who won and lost. Rather, that early stage market research is crucial to product development — and that Jobs, if Fradin’s contention is correct, was slyly nudging competitors toward the wrong scent.

“So entrepreneurs picked up on that. ‘We don’t need to do market research, we’ll just go build this thing,’” he explained. “To the point in which a number of angel investors and venture capitalists here in Silicon Valley tell you, ‘Ready, fire, aim.’”

But that rush to ship new features and releases may be short-sighted.

“You can’t get to product features unless you do the preliminary work. Unfortunately, most companies don’t do that preliminary work. And that’s why I’ve estimated about 35 percent of all new products and services fail in the marketplace. Which, by the way, is about half a trillion to a trillion dollars lost worldwide,” Fradin said.

To avoid that outcome, what a product manager needs to do, he told me, is spend several months on the front end to clarify personas and determine the problems customers want to solve — or, as Harvard business professor Clayton Christensen first described them, “jobs to be done.”

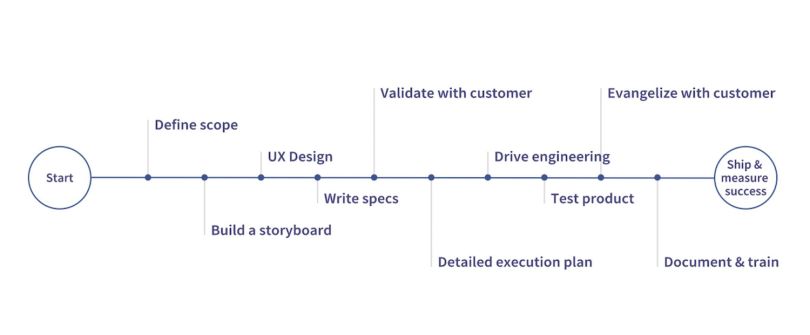

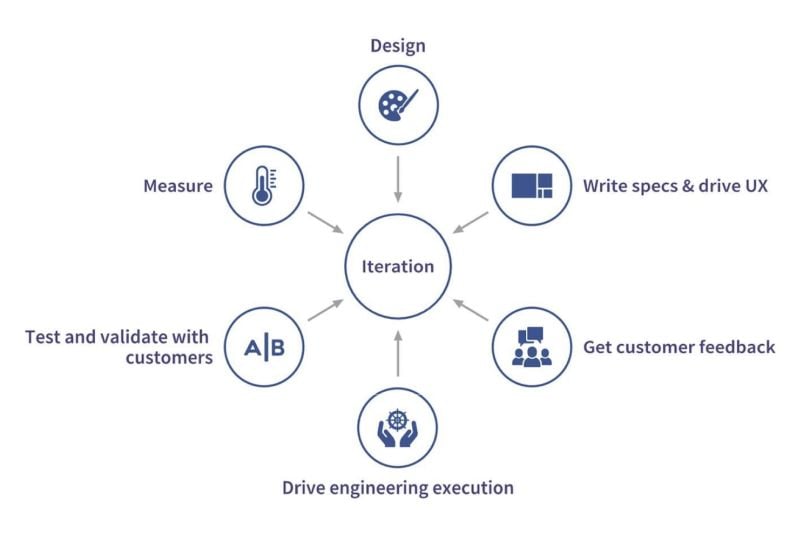

In a Spice Catalyst course for product managers called “Product Success Management Training,” Fradin separates the development process into three core phases: product planning, product development and product marketing. These proceed more or less chronologically, but there is no definitive end. They continue to cycle as the market evolves and users reveal new insights.

“It doesn’t just start at one point and end at another,” said Palak Kadakia, VP of product management at UiPath. “There’s constant, cyclical work that happens.”

Of course, product development approaches are as varied as the companies that employ them. Here are three methods reflecting different markets, business strategies and users.

User-Focused Development

UiPath is an automation platform that uses machine learning and bots to help companies expedite administrative tasks. Kadakia said the December of 2020 release of UiPath Apps is a good window into the firm’s approach to product development. In brief, the service supports customer agents at call centers by surfacing data from multiple interfaces on a single screen.

Where do you begin?

Kadakia: For UiPath Apps, the goal was to make robotic automation services (RPA) more effective in contact centers. We began by identifying customer-service representatives as the personas we wanted to target. When you’re setting a vision, developing these personas is really important because you want to know who you’re solving problems for. What is the need? What is the opportunity? And are there already companies or other people doing this?

Understanding business goals is also important. If our mission at UiPath was to grow unattended automation, and we’re working on back-of-office automation to help contact centers become more effective, that’s not going to fly.

After you define the product roadmap, how do you execute it effectively?

Kadakia: You start to build design-UX mock-ups of what you want the idea to look like. In this case, “I want people to be able to put the data from 12 places in one single screen.”

Now, how do they build that single-page application? Well, we’re not a consulting service, so we need to develop an automated designer that allows people to drag and drop components to create it themselves. And how will we differentiate? By making that designer citizen-developer friendly. So anyone — a power user of Excel, for instance — can build a UI that can replace multiple apps they would have otherwise been using.

“When you’re setting a vision, developing personas is really important because you want to know who you’re solving problems for. What is the need? What is the opportunity? And are there already companies or other people doing this?”

Next, we define all the capabilities this designer would need to have. That includes high-level requirements, such as whether this is going to live in the cloud, or something we will ship on premises. To determine the client-server architecture, the product team starts to work with engineering leadership to define the rough blueprint of what the end result of the product will look like.

From there, the challenge is selling it to your company’s leadership — small and large. That role never changes. You have to get buy-in from everyone because you’re going to be investing resources in this product. Once you have support for a go-to-market motion, you start building a team that can actually build the product.

How do you decide whether to build in house or acquire smaller companies or rights to API?

Kadakia: There is a ton of value in acquisitions.

When we started planning UiPath Apps, we knew our customers needed a way to interface with up to 12 different applications. And RPA could help with that. But RPA didn’t have a presentation layer. We weighed the costs of building the user interface ourselves while, in parallel, looking at companies that were already doing that to see if we should acquire them. Any good product team, especially if you have the funding, should consider both options. We were fortunate to find a small company that gave us, in effect, a year head start on our product. We gained two things: a little bit of code to get us started, and an amazing couple of developers we wouldn’t have otherwise found.

Remember, you can acquire people, you can acquire technology, like API, and you can acquire an entire business that already has sales motion. You should evaluate all these, and pick the one that makes the most sense for your business goals and where you are in the product life cycle.

How did you define the scope of the release and schedule key tasks and milestones?

Kadakia: Ui Path Apps took 13 months to develop and ship. You never want that timeline to extend to two to three years. Imagine wasting 30 people’s time over two years and then realizing you made a mistake.

A phased release can help accelerate the schedule. We begin with a private preview, or alpha release, in which customers can give feedback. Later, we have a beta release that’s available to everyone, but not yet in production. During this phase, we run tests, add features and eliminate bugs.

Once we’re confident it meets quality standards, we ship our GA [general availability] release and continue to iterate at a two-week cadence. Typically, we only have 30 percent of features developed when we ship the GA. So we start moving through the backlog. Along the way, the market may change, customer demands may change, our competition may change. We respond, in kind, with new features and ideas.

How do you evaluate results?

Kadakia: We instrument our product to gather telemetry [in-app data sent to external monitoring devices]. These instruments track events that occur both in the back-end code and front-end UI. They show you when users are loading pages, what API calls are being made, how frequently API calls are being made and so on. Then, you funnel that information into a data warehouse and analyze it. So, for example, we track active cloud service usage to see how many people are logging in and how frequently they are logging in. Then we track the depth of that usage: for instance, which types of controls visitors use in the app. That helps us define the product’s future direction.

These are tools you embed in your code, and they work at different levels. For example, Microsoft‘s Azure Monitor collects insights from the code you’ve written. Snowflake is a data warehouse that lets you store and manage the data that’s coming in from telemetry. Then there are tools, like Tableau or Microsoft Power BI, that let you analyze that data.

“Even if you only ship 5 percent of your product goal, if it’s high quality, customers will have confidence in your solution.”

We measure the success of a new product in several ways. To assess engagement, we measure the number of users, the frequency and depth of usage from the same users over time and the percentage of customers who purchased the product and are using it.

To determine a product’s business value, we compare earned revenue to the cost of goods sold (COGS). We also tally the number of licenses sold and the number of organizations that have deployed the product, which is especially important for on-premise use. For some products like Robots, we track the number of automations run by Robots, the number of hours of automation run and so on.

What are your best product development tips?

Kadakia: The most important thing is that anything you ship must be high quality. Even if you only ship 5 percent of your product goal, if it’s high quality, customers will have confidence in your solution.

The second thing is to make sure you understand your user well. For example, a citizen developer has different needs than a pro developer, as we found with Ui Path Apps.

The other important thing is being intentional about collecting usage data, because that helps you define your future roadmap. Having worked on so many different products over my career, the one thing that has stuck with me is this: Data speaks volumes. It will tell you much more than users will verbalize themselves.

Product-Led Development

With a fully distributed team, Frameable designs video-collaboration and productivity tools for teams working remotely. For the past year, it’s been iterating the beta release of Subtask, a task-management tool being positioned to shake up a $5 billion industry. Vice President of Engineering Chris Becker credits market research and working code models as keys to development. Lacking the thousands of users needed to warrant A/B testing, the startup’s product team turned to forums like Reddit to solicit early feedback, leveraging social channels and paid advertising to grow the audience.

What did you discover from market research?

Becker: One thing we’ve noticed very clearly is that most task-management tools aren’t built for the people that are doing the work. They’re built for their managers. So it’s all about tracking and reporting, clicking estimates, spitting out reports. But we’ve found better ways to collaborate.

Folks use task-management tools because their companies force them to — that’s what they have to use. And so they end up looking for new tools when they switch jobs. This becomes an important time to engage them.

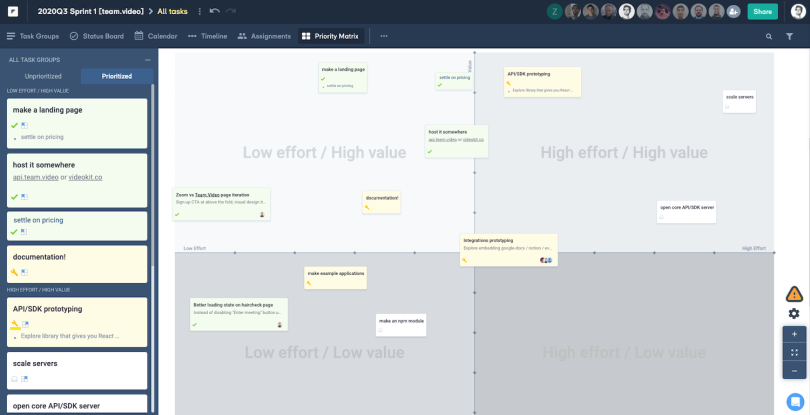

What tools helped you decide to prioritize Subtask for development?

Becker: One feature we used is called an Eisenhower Matrix. It’s a two-by-two, effort-to-value priority matrix, where one axis goes from low to high value, and the other axis goes from low effort to high effort. So in one quadrant you have your quick wins, and in another you have your high-stakes, high-reward releases. The other two quadrants represent less desirable initiatives. By laying out all your options visually, you get a clear idea of your focus.

How did you test the product?

Becker: The best tests give users working software they can experiment with at their leisure, in their own environment, without you watching over their shoulders. That’s when we learn if users don’t use it at all, if they try it once or if they actually come back a week later. And if there are bugs, they’re going to tell you. When you get that stream of feedback, you’re on a good track.

How did you measure performance?

Becker: Like most sites, we track clicks to see what pages users load. We want to know how many people are going through the door, how many people are viewing a feature and how many are engaging with it. Then, we want to know if they’re coming back a week later. So there’s a series of gateways you want to measure.

“Often when people talk about a piece of software being intuitive, it really just means that it works the way other tools do.”

Engagement is always relative to how prominent a feature is in your application. If you have a big red button dead center on your landing page, that’s the thing people are going to click 90 percent of the time. If you have a feature that’s buried under three menus, then you might have 1 percent of your audience using that. So when we’re trying to figure out what features to build, we’re coming up with working hypotheses. Is this actually something a user must have, or not? And a lot of that comes down to user feedback.

What’s your best product development tip?

Becker: Often when people talk about a piece of software being intuitive, it really just means that it works the way other tools do. For example, with timelines, people are used to seeing a series of rows, arranged in a Gantt chart. It’s important to adhere to these common patterns, or users can get confused. During the beta release, we refined the timeline feature to mirror these patterns. We also made it visible at a larger scale for teams plotting multi-year projects.

Growth-Focused Product Development

Alon Bartur leads roughly half of Productboard’s product teams, which are distributed across the U.S. and Prague. He discussed a new feature on the product-management platform that tracks dependencies among product orders, a top-of-mind concern for the enterprise firms Productboard has its sights on as prospective clients.

Where did you begin?

Bartur: A key business objective for Productboard in 2021 is to move upmarket — selling the project-management software to larger, enterprise organizations. Many are highlighting the importance of dependency tracking because they have, maybe, 10 or 15 product teams working on different aspects of the same product. So they’re dealing with a level of complexity we don’t typically see from existing customers. That gave us the idea to focus on a dependency tracker.

Next came the discovery phase. We did this by identifying active customers on Productboard and scheduling phone calls with them.

We wanted to understand what customers mean when they talk about “dependencies.” For us, it means as teams are developing or making changes to a roadmap and changing the timeframe because something is slipping, they’re aware of who to talk to. Identifying and surfacing a shared understanding of that principle at the forefront was the outcome of discovery.

How do you prioritize features for development?

Bartur: One of the premises of Productboard is the product itself helps synthesize input and feedback from a bunch of different channels. So often, that will be existing customers, but it could also be information coming in from the sales team that’s engaging with prospects. It could be support tickets. It could be competitive research. These are the inputs you normally want to look at.

Data-driven prioritization tools help avoid some of what I’ve seen earlier in my career where you encounter recency bias. Your VP of sales will come in and say, “We just lost this really big deal, and they really need this feature.” They’re really starting to apply pressure and, even for the most disciplined product people, that’s hard to completely remove yourself from.

“Data-driven prioritization tools help avoid some of what I’ve seen earlier in my career where you encounter recency bias.”

When you’re prioritizing, it’s important to segment out factors like who your sales prospects are, what size company they’re in, what domain they play in and the company’s maturity. You want to pay special attention to issues flagged by long-time customers and, interestingly, sales prospects you lose. Then you can extract what’s important for particular sets of people, rather than the aggregate.

How do you test features?

Bartur: We have this concept called the user impact score. It’s a summary of customer feedback, and it’s a weighted score that evaluates problem areas and needs for segmented groups. You can pull in Salesforce information and internal company data to help determine how features are performing for particular subsets. So, for example, if our prospects are enterprise companies greater than 1,000 people who have digital-first products and really care about X,Y and Z, we can determine how well a feature is performing for them.

The benefit of that is it can ground the collaboration between the product team and the go-to-market functions, like sales, with a rigorous backdrop. If there’s a misalignment, it forces the conversation to happen at the right level.

Next, the team will go back to customers with Figma prototypes, or other lightweight ways of validating whether the solution captures edge cases and solves the problem it set out to. A good rule of thumb is to test the feature with five to 10 users. If you need more than that, you might be attacking too broad a group of use cases, or trying to solve too many things for too many people.

What’s your best product development tip?

Bartur: Spending time on discovery can show you that personas engaging with your product are different than what you imagined. By using frameworks like the RICE (reach, impact, confidence, effort) scoring model you can prioritize releases based on these personas.

For us, the visualization of dependency paths on the product roadmap was a bigger lift than straightforward documentation, so the first version of our release doesn’t include the kind of visualizations we imagine for the future. We’re focusing on getting a viable solution out to the market. Later, we’ll try to get the solution to a lovable state, so it’s not just surfacing the information to get the job done, but actually doing it in a way that is delightful.