A handsome young man of means falls for a beautiful young woman of humble birth. Their families’ deep-seated class hostilities — plus maybe a staged death or bout of amnesia or two — threaten, but of course could never quell, their love. In the end, the two stage a spectacular wedding in a comeuppance to all those who stood athwart true love.

Formulaic? Maybe for the uninitiated. But for the millions who tune in to telenovelas — or Latin American soap operas — the archetypal nature of the melodrama is precisely the point.

It’s also part of why Lorena Mesa chose telenovelas as her source material for diving into natural language generation. Mesa, a data engineer at GitHub and chair of the Python Software Foundation, is in the midst of generating her own telenovela script. Or, more precisely, she built a neural network to generate some portion of a telenovela script, using existing telenovela scripts as the training corpus.

“I’ve always been really intrigued with the question of whether we can automate a creative process,” Mesa told Built In.

“I’ve always been really intrigued with the question of whether we can automate a creative process.”

Telenovelas’ unabashed embrace of tried-and-true plots, character tropes and tidy resolutions all lend themselves well to text generation. The fixed nature makes it that much easier to recognize successful outputs. “It’s a lot easier to quantify than something like an indie art-house film,” she said.

“Also, it’s really fun to watch telenovelas. Let’s be real.”

Mesa’s project also doubles as a handy tutorial for anyone looking to get started with deep-learning text generation and NLG. She occasionally presents a talk about the project, titled “¡Escuincla babosa!: A Python Deep Learning Telenovela,” that demystifies the process. (“Escuincla babosa” translates roughly to “spoiled brat” and is a reference to an iconic scene from Mexican telenovela María la del Barrio.) In it, she walks through the steps of choosing the best-fit neural network architecture and deep learning framework, with examples of how to fit and train a model.

“When people think of machine learning, they imagine the machine is this giant, complex Skynet kind of machine, but in reality, it’s one tool in a larger toolset that learns relationships that exist intrinsically in the data you input.”

Like telenovelas, it’s all about relationships.

Text Generation Likes Predictability. So Do Telenovelas.

In her presentation, Mesa outlines the common arc of telenovelas. They are defined by:

- A fixed melodramatic plot (e.g. love lost, mothers and daughters fighting, long-lost relatives, love found).

- A finite beginning and end.

- A conclusion that ties up loose ends, generally with a happy element (e.g. big wedding).

Let’s take the first point, specifically the fixed nature. Telenovelas trade in melodrama and all its requisite heightened emotion and over-the-top villains, but they’re grounded by routine and (some) reality — especially compared to their American soap-opera counterparts. In fact, as Mesa notes in her talk, Jorge González argued in his paper “Understanding Telenovelas as a Cultural Front: A Complex Analysis of a Complex Reality” that creating believable worlds is central to the “relationship of fidelity” that telenovela audiences establish with the material.

That same predictability likely helps simplify the training of a text-generating neural network. The relationships and patterns are clearer, and thus (relatively) easier to learn — when the past is more predictable, it follows that the future will be as well. Contrast that to a soap opera from daytime American TV, where a character might, say, transform into a jaguar or zombie or try to freeze the planet.

“There’s discourse around what constitutes a telenovela.”

“There’s discourse around what constitutes a telenovela,” Mesa said. It’s less subjective than other options, which makes it easier to measure performance, she added.

Having a finite beginning and end is also helpful. Generally speaking, the more data, the better. But for an entry-level project, too much can be overwhelming. While U.S. soap operas sometimes shuffle, zombie-like, for decades — General Hospital aims to soon begin production of its 58th(!) season — telenovelas are tidier affairs. They’re limited-run serials that tend to wrap up in under 200 episodes, and each episode is short.

“You’re working with things that are maybe up to 25 minutes in length — maybe sometimes an hour if it’s the more over-the-top examples,” Mesa said.

Choosing a Deep Learning Architecture

Predictability also drives the choice of whether to employ supervised learning, unsupervised learning or a hybrid of the two. Mesa used supervised learning, in which “you have an idea of ground truth.”

In supervised learning, it’s clear how the output will be labeled. “You know what the outcome of the machine learning is supposed to be,” she said.

Not so with unsupervised learning. Mesa invoked the classic examples of news clustering and genome sequencing — we don’t know the day’s major news stories beforehand, and there’s still much we don’t understand about human DNA.

But that’s the task itself. What about the class of neural network? Mesa considers a few different architectures:

- Feedforward: With Feedforward, information flows only move one way, from input layer to output layer.

- Radial basis function: Includes a distance criterion.

- Recurrent neural network (RNN): Propagates data forward and backwards, from later processing stages back to earlier processing stages; it’s a directed graph!

There are several factors to consider, such as movement of information and how the neurons are connected. RNNs, Mesa points out, suit text generation “because of the model’s ability to process data in sequence” and because it allows for moving the data forwards and backwards — or using the output value as the most current input object. Of course, it’s no secret that RNNs are a good match for text generation, as anyone who’s toyed with auto-Shakespeare knows, but the high-level decision process Mesa outlines is edifying for newcomers seeking to understand the why, not just the what.

Picking a Framework

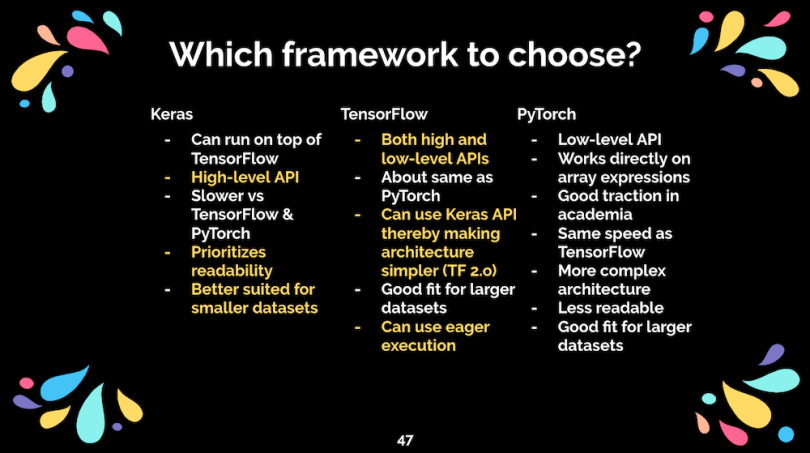

Less obvious is what Python framework to choose: Keras, TensorFlow or PyTorch? Three main points of consideration, Mesa notes in her presentation, are the level of technical expertise required to get started, ease of use and the nature of the project’s requirements — how fast does it need to work, and how large or small is the data set?

Mesa was a deep-learning beginner, looking for an option with a robust developer community and plenty of available resources. She also needed intuitive debugging, built-in bootstrapping tools and something easy to set up. As for the project itself, the data set, as mentioned, is small — she started with Queen of the South, Ugly Betty and Jane the Virgin, each five seasons or fewer, totaling less than 100 megabytes of text data. (Note: Mesa uses text, not video.)

You can see from the slide below that both Keras and TensorFlow have pertinent selling points:

But TensorFlow, to an extent, has Keras baked in. Coupled with having both high- and low-level APIs and the ability to enable eager execution — which allows you to debug in the order you write the program and iterate — TensorFlow seems the better option here.

In her presentation, Mesa also drills down into how to then build the RNN — how to turn script text into input sequences, generate hot encodings (either for predicting characters or full words), and choose the number of training epochs to fit the model. It’s valuable information for when you’re ready to get your hands dirty.

But in keeping with the telenovela spirit, let’s not unnecessarily delay the dramatic conclusion. Here are a few lines of dialogue generated by Mesa’s model.

“i’m proof that surprise happen. even if you do not believe me. why would you? i am a woman after all.”

Using text from 100 episodes of Jane the Virgin, trained on 30 epochs (or complete presentations of the training data), the AI-generated character invoked — on brand — a seemingly immaculate conception: “is it a milagro [miracle]? was i drinking? no. no. my god, no! i wasn't. what made you ask that i am still me.”

Thirty epochs through Ugly Betty generated: “the graphics department is very unhappy. do not pull or bite their hair ahem let’s show softer side.” As Mesa notes, Betty works in the fashion industry, so the reference to a graphics department isn’t far off the mark.

Using data from Queen of the South, which is about the unlikely rise of a narcotrafficking queen, the AI fittingly conjures the threat of violence — if through some less-than-perfect syntax: “do not threaten me. do you understand me? do you understand me? whoa, whoa, all right? hey, look, i.”

Mesa also combined text from all three series, which generated this pearl: “i’m proof that surprise happen. even if you do not believe me. why would you? i am a woman after all.”

That final line — “i am a woman after all” — is particularly eye-catching, Mesa notes in her presentation, given that telenovelas are driven by strong women in lead roles and extreme situations.

What’s Next?

Despite the grammar and construction hiccups, the grasp of theme and tone is evident. Still, Mesa hopes to extend the project much further. One big step would be to generate character interactions, rather than simply lines of dialogue.

“The way it acts now, I can manually choose which data I want to throw in, choose the number of epochs, and say, ‘Throw me out some output.’ That does not necessarily give me an interaction,” she told Built In.

Potential next steps include building an RNN per character or even a new model for each plot line — a lost-love model, for instance, or a long-lost relative model.

Dialogue is also only a small subset of what makes telenovelas tick — think heightened line deliveries and dramatic pauses. Mesa is currently exploring options to incorporate such nuance into the generated script, although none is particularly easy or straightforward.

You may also have noticed by the titles of the source material that the sample lines of dialogue above came from English-language remakes of telenovelas. Mesa is now steering the data toward Spanish-language originals. “Spanish is very expressive in ways that maybe English is not, although that may also be influenced by my first language being Spanish.”

She’s also decided to ramp up the data and has even called in reinforcements to help with transcription. “I’ve definitely made use of family members in Mexico city during COVID,” she said.

In fact, anyone who wishes to assist is welcome to add transcripts to Mesa’ repository.

In the meantime, Mesa recommends that anyone getting started with NLG also consider culling an archetypal pop-culture touchstone as a corpus. “A lot of the learnings come from actually pulling together that data set and kind of figuring out what works and what doesn’t.”

And again, the recognizable patterns will steer you correctly. “What does traditional romantic comedy look like? We already have an image in our head, so as we’re spot-checking a little bit, we can see whether the language that’s populated on the other side is actually indicative of our ground truth,” she said.

When the blowout wedding wraps up, you’ll know you’ve done well.