Complex problems don’t have simple solutions.

Improving social media is a complex problem. It’s also a pressing one — the attack on our Capitol on January 6th should serve as a red flag about the immense danger of a polluted information ecosystem. Democracy depends on a certain level of shared reality and a commitment to civility. Social media, for better or worse, is destined to play a role in our fate as a society. And while it’s cathartic to watch Congress grill Mark Zuckerberg, Jack Dorsey and Sundar Pichai, these interrogations do little to solve our societal unpreparedness with respect to social media. We’re never going to fix social media by putting one person in the hot seat.

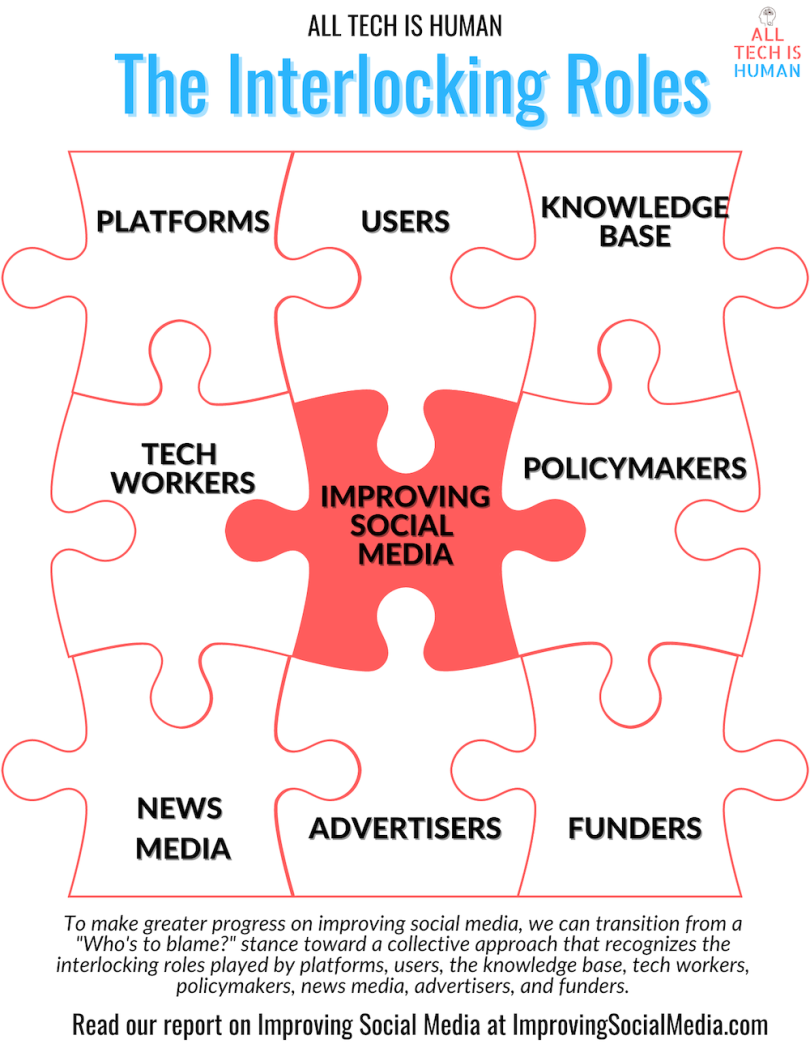

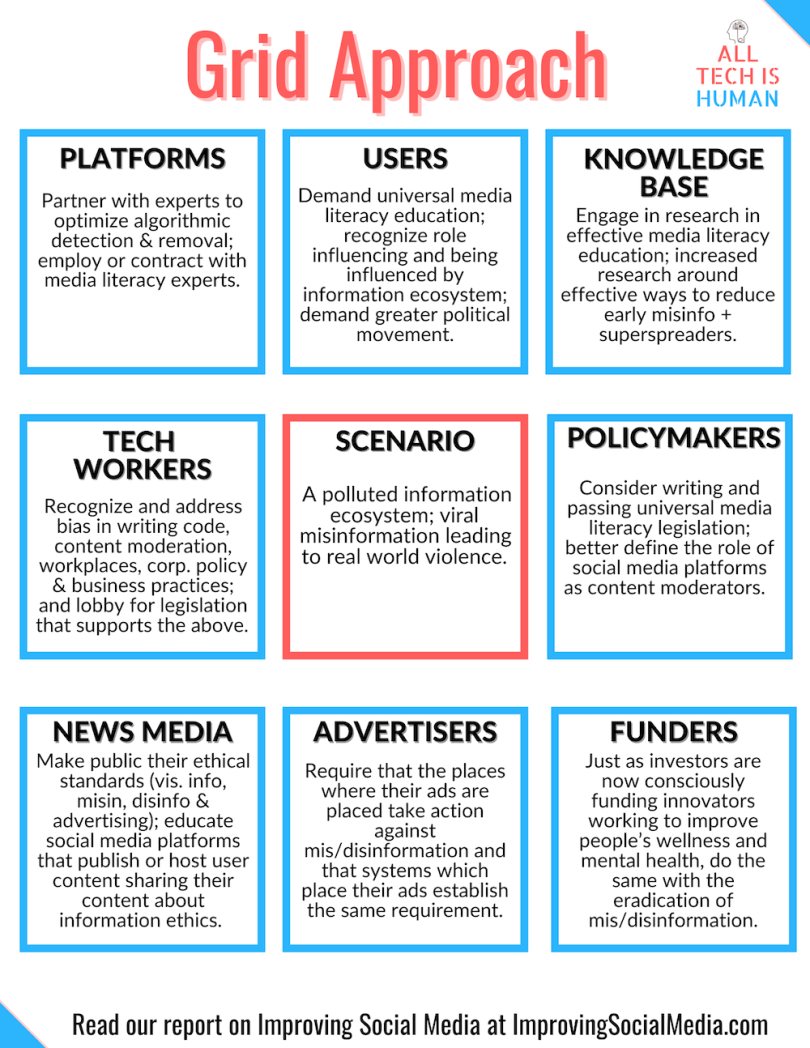

Ensuring that social media promotes and protects both individual civil liberties and the broader public interest should involve a far greater deal of co-creation than the current system. Any solution for improving social media will require a holistic, collective approach that considers the interlocking roles played by platforms, users, policymakers, tech workers, news media, advertisers, funders and the knowledge base that informs the entire ecosystem. Although each of these groups brings a vastly different perspective and skill set to the table, everyone has the same goal: creating a social media landscape that respects our shared values, limits harms and serves the interest of democracy.

Understanding the Ecosystem

Like all complex societal issues, improving social media requires understanding how various factors contribute to the overall problem. As such, the answer isn’t just a matter of creating more socially responsible tech companies, electing more informed and proactive policymakers, or producing better educated and more engaged citizens. It’s about all of these factors and more.

For example, if I asked you how we can reduce crime, a multitude of factors would come to mind. Any effective solution would involve changes to the criminal justice system, education and wealth distribution, all while balancing public safety with civil liberties. There is no simple fix.

Likewise, consider the question of how we can reduce automobile fatalities. Industry has a responsibility to make better products and has added features like seatbelts, airbags and better crash testing to build safer automobiles. Similarly, we require drivers to learn safe driving practices and maintain a license. Government has a major role, and our country has reduced automobile fatalities by creating and enforcing safer driving laws. Advocacy organizations also participate by putting pressure on industry and government.

So, when thinking about how to improve social media, we need to understand any effective solution will require a similar multilateral coalition.

This approach is a paradigm shift, moving from merely looking for someone to blame to a view that appreciates how the multiple stakeholders are interrelated to the overall state of social media. That’s the approach that my organization All Tech Is Human advocates for in our new report, entitled Improving Social Media: The People, Organizations and Ideas for a Better Tech Future.

The report takes a broad view: It’s the work of 100 collaborators, featuring a diverse range of 42 interviews across civil society, government, and industry. It also showcases more than 100 organizations actively working to improve social media. As the founder of All Tech Is Human, I operate at the intersection of a wide spectrum of perspectives and aim to channel their insights into actionable ways of improving our tech future.

I refer to our current approach to improving social media as being stuck in an “awareness stage,” wherein people recognize the problem but aren’t yet solutions-oriented. This phase is characterized by noticing and pointing out problems, ascribing causes and assigning blame. Of course, there’s plenty of blame to go around:

- Platforms have exploited our data and attention, with a business model that is good for tech companies but often bad for users.

- Policymakers have been asleep at the wheel, doing very little to legislate social media’s place in society. The most consequential law affecting social media right now, Section 230 of the Communications Decency Act of 1996, was written well before its advent.

- Media outlets have generally stuck with an oversimplified tech-versus-reformers narrative that’s great for storytelling but terrible for educating the general public about the complexity of the problems and possible solutions.

Moving from the awareness stage to an actual improvement stage, in which real change can happen, requires us to consider the various interlocking roles and to create a framework that includes all of these voices in the problem-solving process.

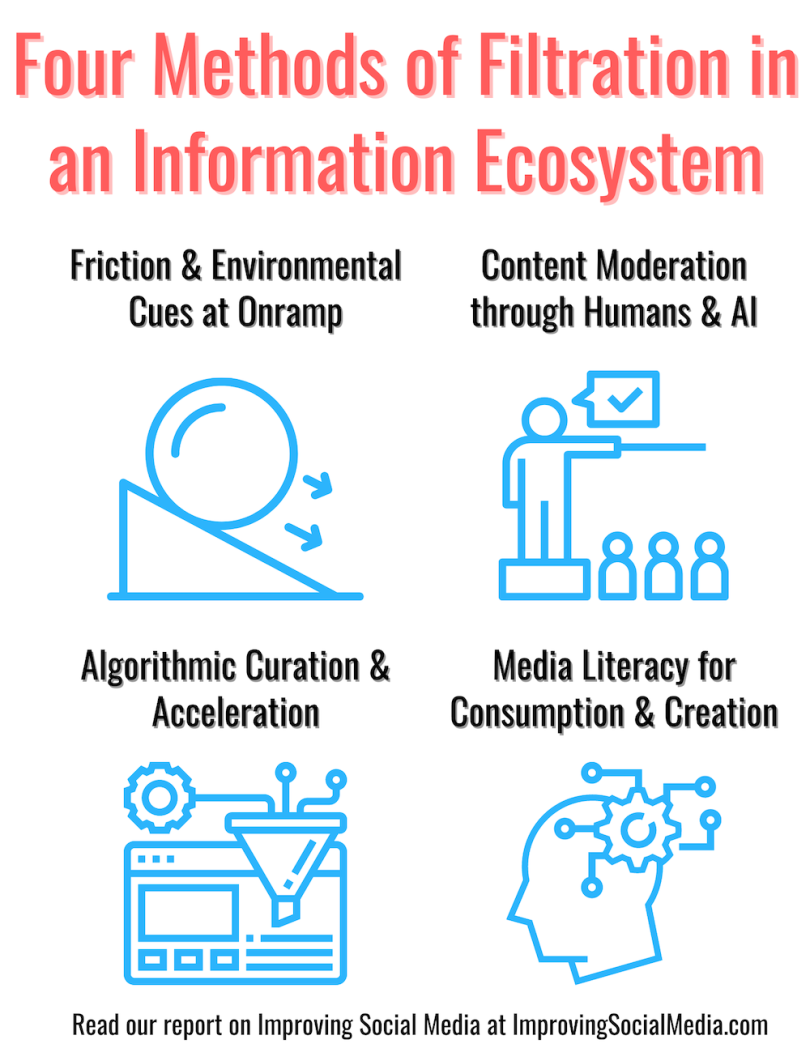

So, for example, in order to improve the information ecosystem and decrease the spread of misinformation, we need to consider:

- When is it appropriate for a platform to take down content?

- How does each particular social media environment alter human behavior though controlling how users comment and share?

- Are there sources of friction that can be added to the process of posting and sharing to reduce the spread of bad information?

- Can advertisers alter the actions of platforms by withholding resources that are central to ad-based models?

- How should we classify social media companies that are private businesses with their own First Amendment rights, even though many citizens relate to platforms in a quasi-governmental fashion?

Finding Human Solutions

The solution isn’t a technical fix. Instead, it’s a political process that involves a wide variety of stakeholders, each with vastly differing opinions about what an improved social media landscape would even look like. In order to create a better roadmap, we must have a better understanding of where we should even be headed. But if I’ve learned one thing from my work, it’s that various quarters often have fundamentally incompatible views about what would constitute an ideal social media ecosystem. It’s hard to draw an effective map when one group wants to go to the beach and another to the mountains, though.

One of the first steps is finding common ground and harmony among these disparate groups. What can bring them together is a shared vision of a social media future that maximizes its value to connect and promote knowledge while reducing its harms on both the individual and societal level. Just as different stakeholders have a wide variety of specific ideas about how best to reduce crime or automobile fatalities, improving social media will depend on uniting a broad coalition of groups around the shared goal of protecting individuals and democracy writ large.

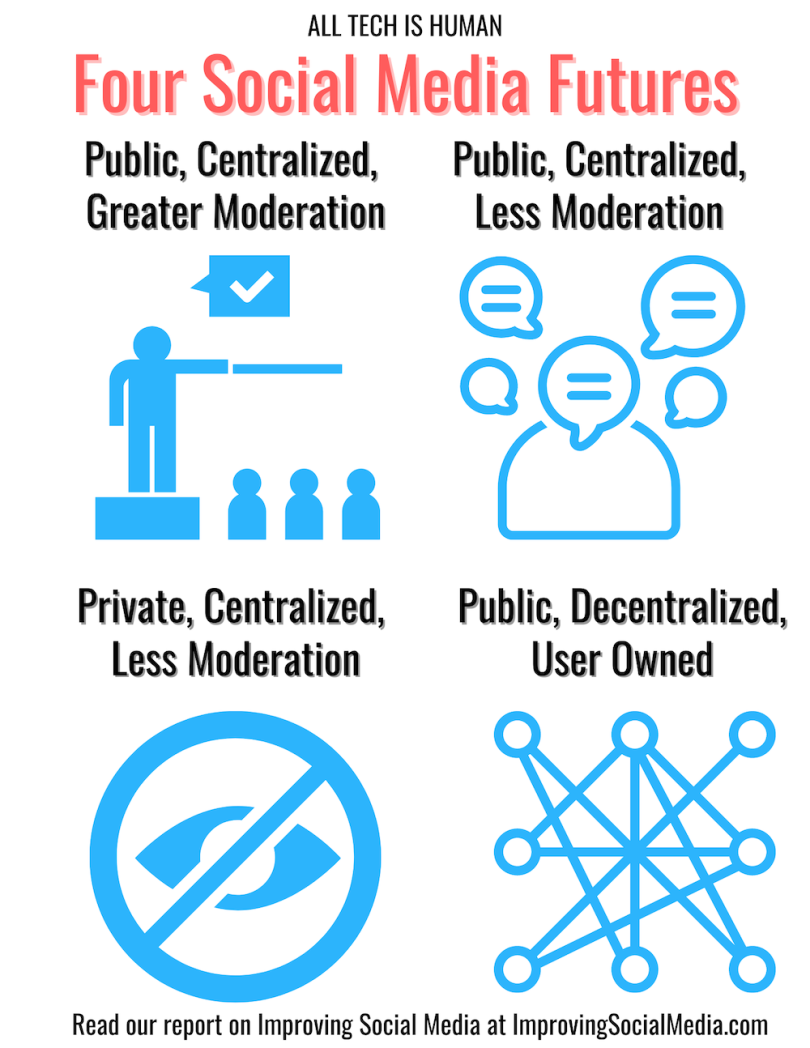

As with every political decision, there are inherent trade-offs to every path forward. Increasing content moderation may decrease hate speech and misinformation, but doing so upsets people who believe it gives companies massive, unchecked power and moves us away from the web’s original dream of unfettered communication. More secure communication pleases privacy advocates, but it angers groups trying to monitor and reduce extremist behavior or abusive content. Some people advocate more decentralized social networks, but this would also put a much greater onus on each user and open up difficult issues around how adequate moderation should occur.

The Road Ahead

This level of difficulty is analogous to how we have approached reducing crime or automobile fatalities. For example, we would likely have less crime if we instituted perpetual surveillance and draconian penalties for every infraction, regardless of severity. In reality, we naturally balance law enforcement with our societal expectations regarding civil liberties, fairness and freedom of mind. Likewise, we’d have fewer automobile fatalities if every vehicle had a breathalyzer ignition switch and reported your driving patterns, but such a system would be onerous and invasive. It’s all a trade-off.

Similarly, every decision we make about social media is going to come with trade-offs and upset a sizable segment of the population. The path to a better social media world will be increasingly political, marked by all the trade-offs, disagreements and compromises we have become accustomed to with important issues like healthcare and public safety.

Right now, we need to move toward a more action-oriented process that proactively considers the impacts of social media and includes a diverse range of perspectives to develop a course of action. Social media is clearly altering our future. Currently, though, decisions that affect everyone are made with the input and insight of very few people. Any viable path to fixing our situation must be guided by a principle of co-creation. If you are impacted by social media, then you should have the ability to alter its development and deployment. That may sound far-fetched, but it’s how democracy works.