I used to work as a research fellow in academia and I’ve noticed academia lags behind industry in terms of implementing the latest tools available. I want to share the best basic tools for academic data scientists—but also for early career data scientists and even non-programmers looking to employ data science techniques into their workflow.

Top 3 Data Science Tools

- IDEs: Jupyter Notebooks / PyCharm / Visual Studio Code

- Google Cloud Platform

- Anaconda

As a field, data science moves at a different speed than other areas. Machine learning constantly evolves and libraries like PyTorch and TensorFlow keep improving. Research companies like Open AI and Deep Mind keep pushing the boundaries of what machine learning can do ( i.e. DALL.E and CLIP). Foundationally, the skills required to be a data scientist remain the same: statistics, Python/R programming, SQL or NoSQL knowledge, PyTorch/TensorFlow and data visualization. However, the tools data scientists use constantly change.

1. IDEs: Jupyter Notebooks / PyCharm / Visual Studio Code

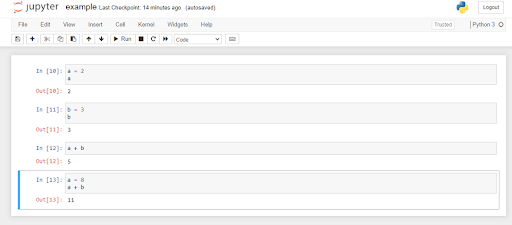

Using the right IDE (Integrated Development Environment) for developing your project is essential. Although these tools are well known among programmers and data science hobbyists, there are still many non-expert programmers who can benefit from this advice. Although academia falls short in implementing Jupyter Notebooks, academic research projects offer some of the best scenarios for implementing notebooks to optimize knowledge transfer management.

In addition to Jupyter Notebooks, tools like PyCharm and Visual Studio Code are standard for Python Development. PyCharm is one of the most popular Python IDEs (and my personal favorite). It’s compatible with Linux, macOS and Windows and comes with a plethora of modules, packages and tools to enhance the Python development experience. PyCharm also has great intelligent code features. Finally, both Pycharm and Visual Studio Code offer great integration with Git tools for version control.

2. Google Cloud Platform for Training and Transfering Machine Learning Models

There are plenty of options for machine learning as a service (MLaaS) to train models on the cloud, such as Amazon SageMaker, Microsoft Azure ML Studio, IBM Watson ML Model Builder and Google Cloud AutoML.

In terms of services provided by each one of these MLaaS suppliers, things constantly change. A few years ago, Microsoft Azure was the best since it offered services such as anomaly detection, recommendations and ranking, which Amazon, Google and IBM did not provide at the time. Things have changed.

Discovering which MLaaS provider is the very best is outside the scope of this article but in 2021 it’s easy to select a favorite based on user interface and user experience: AutoML from Google Cloud Platform (GCP).

In the past months I have been working on a bot for algorithmic trading. At the beginning, I started working on Amazon Web Services (AWS) but I found a few roadblocks that forced me to try for GCP. (I have used both GCP and AWS in the past and generally am in favor of using whichever system is most convenient in terms of price and ease of use.)

After using GCP, I recommend it because of the work they’ve done on their user interface to make it as intuitive as possible. You can jump on it without any tutorial. The functions are intuitive and everything takes less time to implement.

Another great feature to consider from Google Cloud Platform is Google Cloud storage. It’s a great way to store your machine learning models somewhere reachable for any back end service (or colleague) with whom you might need to share your code. I realized how important Google Cloud Storage was when we needed to deploy a locally-trained model. Google Cloud Storage offered a scalable solution and many client libraries in programming languages such as Python, c# or Java, which made it easy for any other team member to implement the model.

3. Anaconda

Anaconda is a great solution for implementing virtual environments, which is particularly useful if you need to replicate someone else's code. This isn’t as good as using containers, but if you want to keep things simple then it is still a good step in the right direction.

As a data scientist, I try to always make a requirement.txt file where I include all the packages used in my code. At the same time, when I am about to implement someone else’s code, I like to start with a clean slate. It only takes two lines of code to start a virtual environment with Anaconda and install all required packages from the requirement folder. If, after doing that, I can’t implement the code I’m working with, then it’s often someone else’s mistake and I don’t need to keep banging my head against the wall trying to figure out what’s gone wrong.

Before I started using Anaconda, I would often encounter all sorts of issues trying to use scripts that were developed with a specific version of packages like NumPy and Pandas. For example, I recently found a bug with NumPy and the solution from the NumPy support team is degrading to a previous NumPy version (a temporary solution). Now imagine you want to use my code without installing the exact version of NumPy I used. It wouldn’t work. That’s why, when testing other people’s code, I always use Anaconda.

Don’t take my word for it. Dr. Soumaya Mauthoor compares Anaconda with pipenv for creating Python virtual environments. As you can see, there’s an advantage to implementing Anaconda.

Although many industry data scientists already make use of the tools I’ve outlined above, academic data scientists tend to lag behind the curve. Sometimes this comes down to funding, but you don’t need Google money to make use of Google services. For example, Google Cloud Platform offers a free tier option that’s a great solution for training and storing machine learning models. Anaconda, Jupyter Notebooks, PyCharm and Visual Studio Code are free/open source tools to consider if you work in data science.

Ultimately, these tools can help any academic or novice data scientist optimize their workflow and become aligned with industry best practices.