The AWS API Gateway has a payload size limit of 10 MB. If a compressed payload smaller than 10 MB is passed to the API Gateway, it will be accepted. However, if the uncompressed payload exceeds 10 MB, the API Gateway will auto-decompress it and may reject the request with an “Error 413 Request entity too large”.

How to Bypass AWS API Gateway Limits With S3 Presigned URLs

- Fetch the presigned URL from S3 via an API Gateway to Lambda setup.

- Use the obtained URL to upload the file directly from the client.

To work around this limit, one recommendation is to use Amazon S3 for large file transfers by returning a S3 presigned URL in the API Gateway response, allowing direct uploads to S3. This approach helps bypass the 10MB limit of the API Gateway and enables uploads directly to S3, which can handle larger file sizes up to 5 TB with multipart uploads.

In this blog post, we’ll explore how to upload large files to AWS S3 using presigned URLs.

Using a S3 Presigned URL in the API Gateway to Upload a File

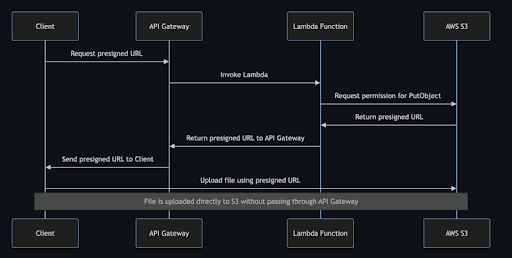

Originally, the file upload mechanism is handled through AWS API Gateway, which is limited to accepting files up to 10 MB. To accommodate larger files, we’ve implemented a solution using S3 presigned URLs, allowing files to be uploaded directly from the client interface without size limitations imposed by API Gateway.

The process involves two main steps:

- Fetch the presigned URL from S3 via an API Gateway to Lambda setup.

- Use the obtained URL to upload the file directly from the client.

How to Generate a S3 Presigned URL to Upload a Large File in AWS API Gateway

First, let’s look at the Lambda function, which is responsible for generating the presigned URL. We’ll use Node.js for the Lambda function:

const { S3Client, PutObjectCommand } = require('@aws-sdk/client-s3');

const { getSignedUrl } = require('@aws-sdk/s3-request-presigner');

const client = new S3Client({

region: 'eu-west-2',

signatureVersion: 'v4'

});

exports.handler = async (event, context) => {

// Define the parameters for the S3 bucket and the object key

const params = {

Bucket: 'YOUR_BUCKET_NAME',

Key: 'OBJECT_KEY'

}

const expires = 3600; // Set expiration time for the signed URL (in seconds)

try {

const url = await getSignedUrl(client, new PutObjectCommand(params), {

expiresIn: expires

});

return {

headers: {

'Access-Control-Allow-Origin': '*'

},

body: JSON.stringify({ url })

}

} catch (error) {

return {

headers: {

'Access-Control-Allow-Origin': '*'

},

body: JSON.stringify({ error: error.message })

}

}

};Client-Side Upload Using React

Next, we’ll use this presigned URL on the client side to upload a file in React:

import axios from 'axios';

// Function to upload the file using the presigned URL

export async function upload(file) {

const getUrlResponse = await getSignedUrl();

const url = getUrlResponse.body;

// Handle cases where the URL is not obtained

if (!url) return;

await uploadFile(url, file);

}

// Function to retrieve the signed URL

export async function getSignedUrl() {

const result = await axios.get('API_GATEWAY_URL')

.then(response => {

return { body: response.data.url }

})

.catch(err => {

return { body: null }

});

return result;

}

// Function to perform the file upload

export async function uploadFile(url, file) {

const result = await axios.put(url, file, {

headers: { 'Content-Type': file.type }

}).then(response => {

return { body: response.data }

}).catch(err => {

return { body: null }

});

return result;

}

CORS Configuration on S3CORS Configuration on S3

One common issue during implementation is CORS errors. Here’s how you can configure CORS in your S3 bucket settings:

{

"AllowedHeaders": ["*"],

"AllowedMethods": ["PUT"], // Ensure 'PUT' is allowed for file uploads

"AllowedOrigins": ["https://localhost:3000"] // Adjust this according to your client's URL

}

Advantages of Using S3 Presigned URLs in the API Gateway to Upload a File

By using AWS S3 presigned URLs, we can bypass the limitations of AWS API Gateway for large file uploads, offering a scalable and secure solution directly from client applications. This method not only simplifies the process but also keeps your data secure during transit.

Frequently Asked Questions

What is the AWS API Gateway limit?

The AWS API gateway limit is 10 MB. If the uncompressed payload exceeds 10 MB, the API Gateway will auto-decompress it and may reject the request with an “Error 413 Request entity too large”.

How do you bypass the AWS API Gateway limit?

You can securely bypass the AWS API Gateway limit by using AWS S3 presigned urls. To do so, you must:

- Fetch the presigned URL from S3 via an API Gateway to Lambda setup.

- Use the obtained URL to upload the file directly from the client.