Claude is a chatbot developed by AI startup Anthropic that can generate text content, including computer code, and carry on conversations with users, similar to OpenAI’s ChatGPT and Google’s Gemini. Anthropic claims Claude is differentiated from other chatbots due to its use of “constitutional AI” — a unique AI training method where ethical principles guide a model’s outputs.

What Is Claude AI?

Claude is an artificial intelligence chatbot created by the company Anthropic that is designed to generate text content and engage in conversations with users using human-like responses.

Anthropic is one of the most prominent AI companies in the world, having received billions of dollars from tech giants like Google and Amazon. Its stated goal is to make Claude — and future artificial intelligence systems — more “helpful, harmless and honest” by putting responsibility, ethics and overall safety at the forefront.

What Is Claude AI?

Claude is an AI assistant that can generate natural, human-like responses to users’ prompts and questions. It can respond to text or image-based inputs and is available on the web or through the Claude mobile app.

Claude Large Language Models

Anthropic offers a suite of AI models that have their own unique set capabilities:

- Claude Sonnet 4.6 is the most capable Sonnet model, rivaling Opus model intelligence while maintaining the speed and affordability of the Sonnet line. It features upgrades in coding, agentic planning and computer use capabilities, supported by a 1 million-token context window in beta for handling complex enterprise workflows.

- Claude Opus 4.6 is one of Anthropic’s most advanced models, featuring a 1 million-token context window in beta and an “adaptive thinking” mode that can pick up on contextual clues for how to use extended thinking. Opus 4.6 excels at complex coding, enterprise workflows and autonomous tasks, offering performance gains in research and financial analysis.

- Claude Opus 4.5 offers strong software-engineering performance as well as better handling of long-context and agentic tasks. The Opus 4.5 model uses fewer tokens than past Opus versions and introduces context compaction, a feature that summarizes and shortens long conversations to stay within the model’s context window.

- Claude Haiku 4.5 is a small model that provides similar levels of coding performance to Claude Sonnet 4.5 and Sonnet 4 models, but at one-third of the cost and over twice the speed. It showed a lower overall rate of misaligned behaviors compared to other Claude 4 models, making it Anthropic’s safest model at the time of its release.

- Claude Sonnet 4.5 is an updated Sonnet model that focuses on improved coding capabilities. With new features, the frontier model can generate production-ready applications and leverage agentic functions to complete tasks such as domain purchasing and security checks.

- Claude Opus 4.1 is an upgrade to Claude Opus 4 in terms of agentic tasks, real-world coding and overall reasoning. The Opus 4.1 model improved across most capabilities relative to Opus 4, with notable performance improvements in coding and multi-file code refactoring.

- Claude 4 Family launched with the Claude Opus 4 and Claude Sonnet 4 models, which delivered major gains in coding, reasoning and agentic performance. Opus 4 led on benchmarks like SWE-bench and Terminal-bench and excels at long, complex workflows, while Sonnet 4 (which powers GitHub Copilot) offers enhanced instruction following and efficiency.

- Claude 3.7 Sonnet was Anthropic’s most intelligent model to date at the time of its release according to the company, and its first to feature “hybrid reasoning” capabilities — with “reasoning” being the ability to break down problems into manageable steps and verify facts along the way before generating a final answer. The model can produce “near-instant” replies or take a more meticulous, step-by-step approach, making its reasoning process visible in its output. API users also have control over how long the model takes to think before it responds.

- Claude 3.5 Haiku is the fastest, most compact of the three models in the Claude 3 model family, according to Anthropic. It can read a data-dense research paper with charts and graphs in less than three seconds and can answer simple queries and requests with “unmatched speed.” It also outperforms full-size models in many coding tasks, Anthropic says.

- Claude 3 Opus outperforms peers like GPT-4 and Gemini on highly complex tasks. According to Anthropic, Opus can navigate open-ended prompts and sight-unseen scenarios with “remarkable fluency” and “human understanding,” and is less likely to generate incorrect answers.

Sonnet 4.6, Sonnet 4.5 and Haiku 4.5 are available to use for free in Claude, and most models are available to access on the Anthropic API, Amazon Bedrock and Google Cloud’s Vertex AI platforms.

Claude Agentic Tools

- Claude Code is an agentic coding tool that lives in a device’s command-line interface (CLI) or integrated development environment (IDE)Able to read, understand and write code, it can be particularly helpful in automating work tasks, allowing development teams to test and debug their codebase and generate new lines of code. Nontechnical users have also adopted Claude Code to vibe code simple applications or automate workflows.

- Claude Cowork is a scaled down version of Claude Code. Instead of working from a computer terminal, Cowork only works in designated folders and can help organize files, create reports and automate workflows. It also features a simpler user experience, allowing individuals to dictate tasks directly from the Claude desktop application.

What Can Claude AI Do?

Claude can do anything other chatbots can, including:

- Answer questions

- Proof-read cover letters and resumes

- Write song lyrics, essays and short stories

- Craft business plans

- Translate text into different languages

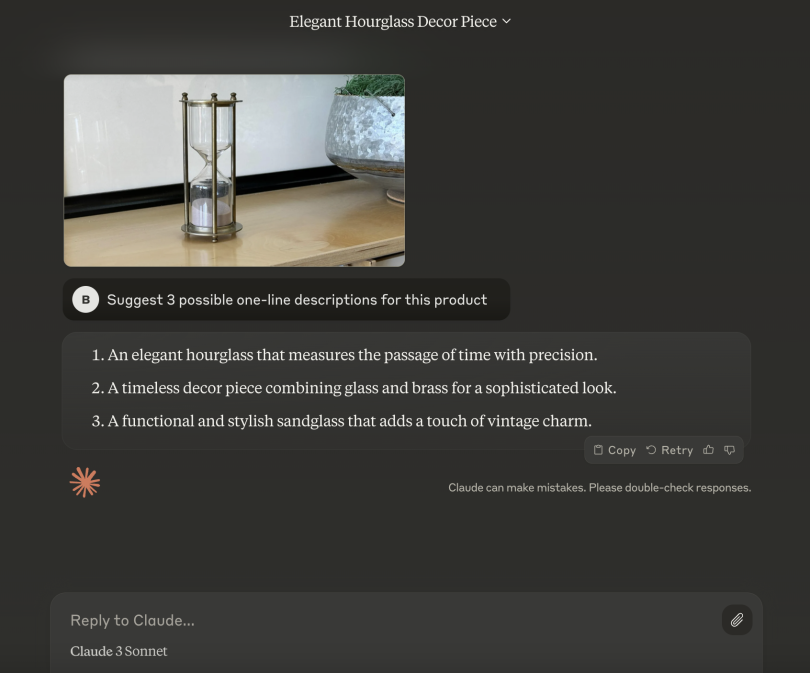

- Describe images of objects or suggest recipes from images of food

Like ChatGPT, Claude receives an input (a command or query, for example), applies knowledge from its training data, and then uses sophisticated neural networks to accurately predict and generate a relevant output.

Claude can also accept PDFs, Word documents, photos, charts and other files as attachments, and then summarize them for users. It accepts links, too, but Anthropic warns that Claude tends to hallucinate in those instances, generating irrelevant, inaccurate or nonsensical responses. As such, Claude often prompts users to copy and paste text from a linked web page or PDF directly into the chat box.

Additionally, following the rollout of its “computer use” feature, Claude can operate a computer through the graphic user interface by looking at what’s on the screen, moving the cursor around, interacting with buttons and menus, and entering text.

Claude vs. ChatGPT: How Are They Different?

In many ways, Claude is very similar to ChatGPT, which is hardly surprising given that all seven of Anthropic’s co-founders worked at OpenAI before starting their own company in 2021. Both chatbots can be creative and verbose, and are useful tools in a wide range of writing tasks. Both are also capable of generating inaccurate and biased information. But each has their own distinct differences. Here are a few:

Claude AI vs. ChatGPT

- Claude can process more words than ChatGPT.

- Claude scores better than ChatGPT on some exams.

- Claude retains user data differently than ChatGPT.

- Claude says it prioritizes safety more than ChatGPT.

1. Claude Can Process More Words Than ChatGPT

Claude Sonnet 4 and 4.5 models (which power Claude) can process significantly more words than GPT-5 (which powers ChatGPT) due to their substantially larger context window. While the GPT-5 model in the OpenAI API has a maximum context window of 400,000 tokens (the equivalent of words), Anthropic offers a 1 million-token context for its Sonnet models in its developer platform’s beta, an increase of 2.5 times the size of the GPT model.

This extended context window capacity is especially helpful in enterprise use cases, allowing developers to process and maintain context over entire codebases, thousands of pages of documents or extremely long-running agentic workflows.

2. Claude Scores Better Than ChatGPT on Some Exams

Claude Sonnet 4.5, Anthropic’s frontier model powering the Claude chatbot, outperformed GPT-5 on several common evaluation benchmarks for AI systems, including agentic coding, agentic terminal coding and agentic tool use, computer use and financial analysis.

3. Claude Retains Data Differently Than ChatGPT

Anthropic allows users to choose whether they want their chats to be used to train its AI models, with opt-in meaning new and resumed chat data can be retained by the company for up to five years. Although, opt-out of this choice means chat data will only be retained for up to 30 days.

When it comes to Anthropic and data, “it is a one way street,” Ashok Shenoy, a VP of portfolio development at tech company and Claude user Ricoh USA, told Built In. “They’re only deploying the large language model and nothing is going back into their algorithms.”

OpenAI also states that users can control how their ChatGPT data is used, including the ability to opt-out of using conversations to further train its models. However, OpenAI saves chats to user accounts indefinitely unless they are manually deleted, with deleted ChatGPT chats being permanently removed within 30 days.

4. Claude Says It Prioritizes Safety More Than ChatGPT

Perhaps the biggest difference between Claude and ChatGPT is that the former is generally considered to be better at producing consistently safe responses. This is largely thanks to Claude’s use of constitutional AI, which reduces the likelihood of the chatbot generating toxic, dangerous or unethical responses.

This makes Claude an appealing tool for more high-stakes industries like healthcare or legal, where companies can’t afford to produce wrong or harmful answers. With Claude, organizations can be more confident in the quality of their outputs, Rehl said, giving Anthropic a “really interesting niche” that no other company can really fill. “They offer the safe, consistent, big-document-processing model.”

Indeed, Anthropic’s researchers have found constitutional AI to be an effective way of not only improving the text responses of AI models, but also making them easier for people to understand and control. Because the system is, in a sense, talking to itself in a way humans can comprehend, its methods for choosing what responses to give are more “interpretable” than other models — a major, ongoing challenge with advanced AI.

Meanwhile, Anthropic’s approach is coming at a time of breakneck progress in artificial intelligence, particularly when it comes to generative AI. Content generation is altering the way we live, work and create — causing heightened concerns around everything from plagiarism to employment discrimination. And despite the U.S. government’s best efforts, legislation is having a hard time keeping up when it comes to national regulatory safety measures.

Ultimately, Anthropic hopes its focus on safety will help make generative AI a more stable and trustworthy technology, and that its enthusiasm will catch on in the rest of the artificial intelligence industry.

“We hope there’s going to be a safety race,” Anthropic co-founder Ben Mann told New York Times reporter Kevin Roose. “I want different companies to be like, ‘Our model’s the most safe.’ And then another company to be like, ‘No, our model’s the most safe.”

How to Use Claude

First, go to www.claude.ai and sign up for free using an email address and phone number. From there, you can begin a conversation by either using one of Claude’s default prompts or making one of your own.

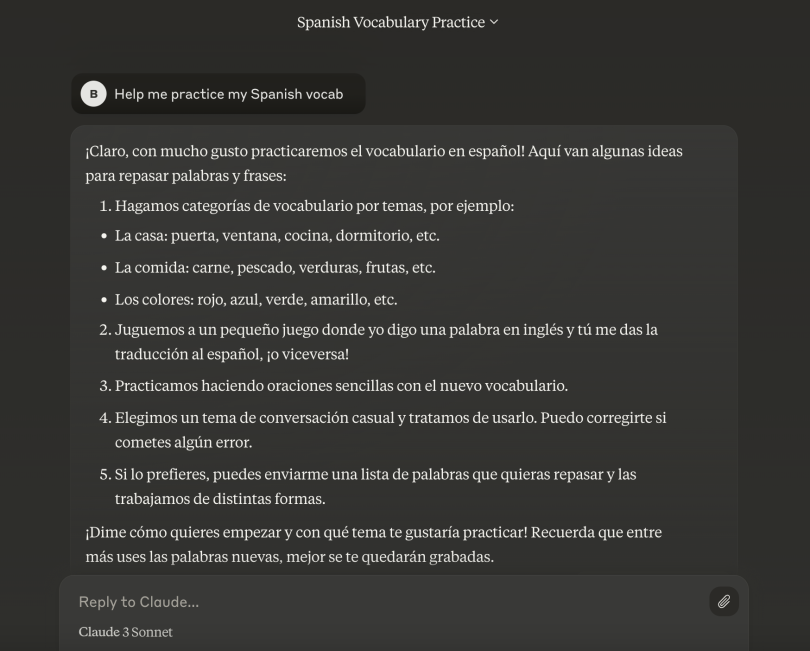

Prompts can range from, “Help me practice my Spanish vocab” to “Explain quantum computing to me in simple terms.” You can also feed Claude your own PDFs and URL links and have it summarize the contents. Keep in mind you’re only allowed a limited amount of prompts a day with Claude’s free version, which will vary based on demand.

Claude also has a Pro version for $17 a month, which allows more prompts per day and grants early access to new features as they’re released. To access Claude Pro, you can either upgrade your existing account or create a new account.

For those looking to build their own solutions using Claude, most models can be accessed through the Claude API or by using Amazon Bedrock. Sonnet, Haiku and Opus models can also be accessed through Google Cloud’s Vertex AI platform.

How Does Claude AI Work?

Like all LLMs, all the models in the Claude suite were trained on massive amounts of text data, including Wikipedia articles, news reports and books. And it relies on unsupervised learning methods to learn to predict the next most-likely word in its responses. To fine-tune the model, Anthropic used reinforcement learning with human feedback (RLHF), a process first devised by OpenAI scientists to help LLMs generate more natural and useful text by incorporating human guidance along the way.

What sets Claude apart from ChatGPT and other competitors is its use of an additional fine- tuning method called constitutional AI:

- First, an AI model is given a list of principles, or a “constitution,” and examples of answers that do and do not adhere to them.

- Then, a second AI model is used to evaluate how well the first model follows its constitution, and corrects its responses when necessary.

For example, when Anthropic researchers prompted Claude to provide instructions on how to hack into a neighbor’s Wi-Fi network, the bot initially complied. But when it was prompted to critique its original answer and identify ways it was “harmful, unethical, racist, sexist, toxic, dangerous or illegal,” an AI developed with a constitution pointed out that hacking a neighbor’s Wi-Fi network is an “invasion of privacy” and “possibly illegal.” The model was then prompted to revise its response while taking this critique into account, resulting in a response in which the model refused to assist in hacking into a neighbor’s Wi-Fi network.

In short: Rather than humans fine-tuning the model with feedback, Anthropic’s models fine-tune themselves — reinforcing responses that follow its constitution and discouraging responses that do not.

Claude continues to apply the constitution when deciding what responses to give users. Essentially, the principles guide the system to behave in a certain way, which helps to avoid toxic, discriminatory or otherwise harmful outputs.

“It’s intentionally made to be good.”

“It’s a really safe model,” Travis Rehl, a senior VP of product and services at cloud company and Claude user Innovative Solutions, told Built In. “It’s intentionally made to be good.”

Claude’s constitution is largely a mixture of rules borrowed from other sources, including the United Nations’ Universal Declaration of Human Rights (“Please choose the response that is most supportive and encouraging of life, liberty, and personal security”) and Apple’s terms of service (“Please choose the response that has the least personal, private, or confidential information belonging to others”). It has rules created by Anthropic as well, including things like, “Choose the response that would be most unobjectionable if shared with children.” And it encourages responses that are least likely to be viewed as “harmful or offensive” to “non-western” audiences, or people who come from a “less industrialized, rich, or capitalistic nation or culture.”

Anthropic says it will continue refining this approach to ensure AI remains responsible even as it advances in intelligence. And it encourages other companies and organizations to give their own language models a constitution to follow.

Notable Claude Developments and Model Updates

Claude has evolved rapidly since its launch in 2023, with each release bringing improvements in reasoning, usability and safety.

Below are some of the most significant Claude model updates to date:

Claude Sonnet 4.6 (February 2026)

Anthropic released Claude Sonnet 4.6, its most capable Sonnet model to date. It features a 1 million-token context window in beta and delivers upgrades in coding, computer use and agentic planning, enabling efficient handling for complex software navigation and high-volume enterprise workflows. Sonnet 4.6 offers performance that rivals Claude’s Opus models, while still maintaining the more affordable pricing of Sonnet models.

Claude Opus 4.6 (February 2026)

Anthropic released the Claude Opus 4.6 model, which introduces a 1 million-token context window in beta and an “adaptive thinking” mode that can pick up on contextual clues for extended thinking. Opus 4.6 is built to excel in enterprise workflows and complex areas such as autonomous coding, financial analysis and research, and can handle multi-step agentic tasks with significantly more accuracy than its predecessor models.

Anthropic Introduces Claude Cowork (January 2026)

Anthropic introduced Claude Cowork, an agentic tool that is suited for everyday tasks and requires less technical know-how to operate. Through Cowork, users can designate specific folders, allowing Claude to read and modify files. Users can also dictate specific tasks through Claude’s chatbot interface instead of a device’s terminal or integrated development environment. By reading and modifying files, Cowork can be used for file organization, document creations, data analysis and workflow automations. Claude Cowork is currently only available for Claude Pro, Max, Team and Enterprise subscribers.

Claude Code Grows in Popularity for Vibe Coding Tasks (January 2026)

Claude Code has become a central tool in the vibe coding movement, a trend where plain language instructions and project “vibes” replace traditional manual programming. The tool’s popularity surged after it received updates and crossed significant capability thresholds alongside the release of Claude Opus 4.5, enabling non-coders to build functional applications in a fraction of the time typically required by professional engineering teams. This shift has sparked a viral debate within the tech community, as developers increasingly move away from writing syntax to acting as overseers for autonomous AI workflows.

Claude Opus 4.5 (November 2025)

Anthropic released Claude Opus 4.5, a general-purpose model that excels at software engineering, long-context tasks and agentic capabilities. The model includes improvements to its context window and input processing, and it scored higher than both Haiku and Sonnet 4.5 on the SWE-Bench Verified software assessment. Alongside the release, Anthropic expanded access to its Claude for Chrome and Claude for Excel extensions, which use Claude Opus 4.5 to automate workflows in internet browsers and Microsoft Excel.

Claude Haiku 4.5 (October 2025)

Anthropic released Claude Haiku 4.5, a small model that provides similar levels of coding performance as Claude Sonnet 4 and Sonnet 4.5 models at one-third of cost and over twice the speed. Haiku 4.5 also surpasses Sonnet 4 at certain tasks like using computers, showing particular improvement in Claude for Chrome. These features make Haiku 4.5 ideal for real-time, low-latency tasks such as chat assistants, customer service agents and pair programming.

Claude Sonnet 4.5 (September 2025)

Anthropic launched a new frontier model with enhanced coding capabilities. According to the company, Sonnet 4.5 can build production-ready applications by creating more than just user interfaces and simple logic. Now, it can set up databases, purchase domains and run security checks. Sonnet 4.5 keeps the same pricing of $3 per million input tokens and $15 per million output tokens, and is available through the Claude API and Chatbot, as well as in coding applications like Cursor and Windsurf.

Claude Adds Document Creation Feature (September 2025)

A new update to Claude’s desktop app and web platform adds document creation capabilities to the chatbot. According to the company, Claude can create or edit spreadsheets, slide decks, PDFs and other documents directly through the chatbot interface. The feature gives users a new way to interact with the AI chatbot and simplifies workflows by moving beyond in-app text responses. Following the update, Microsoft announced it would begin using Claude in some Office 365 applications, ending its exclusive reliance on OpenAI products.

Claude Opus 4.1 (August 2025)

Claude Opus 4.1 is an upgrade to Claude Opus 4 in terms of agentic tasks, real-world coding and overall reasoning. The Opus 4.1 model improved across most capabilities relative to Opus 4, with notable performance improvements in coding and multi-file code refactoring. Opus 4.1 was made available to paid Claude users and in the Claude Code tool.

Claude 4 Family — Claude Opus 4 and Claude Sonnet 4 (May 2025)

The Claude 4 family launched with the Claude Opus 4 and Claude Sonnet 4 models, which delivered major gains in coding, reasoning and agentic performance. Opus 4 led on benchmarks like SWE-bench and Terminal-bench and excels at long, complex workflows, while Sonnet 4 (which powers GitHub Copilot) offers enhanced instruction following and efficiency. Both Claude 4 models support extended thinking with tool use and parallel tool execution.

Claude 3.7 Sonnet (February 2025)

Claude 3.7 Sonnet introduced faster responses, enhanced contextual understanding and improvements in coding and front-end web development. The model was designed to serve as an upgraded version of Claude 3.5 Sonnet, while expanding capabilities for developers working with complex workflows. Anthropic states Claude 3.7 Sonnet was the first hybrid reasoning model on the market.

Claude 3.5 Sonnet (June 2024)

Claude 3.5 Sonnet delivered notable performance gains on reasoning and coding benchmarks like GPQA, MMLU and HumanEval. The Claude 3.5 Sonnet model was especially strong in agentic coding tasks and tool use, positioning it as an effective model for business and developer-facing applications.

Claude 3 Family — Claude 3 Haiku, Claude 3 Sonnet and Claude 3 Opus (March 2024)

The Claude 3 model family marked Anthropic’s first foray into multimodal models. Claude 3 Haiku offered low latency, Claude 3 Sonnet was faster than Claude 2 and Claude 3 Opus served as the most capable of the three models, scoring highly on reasoning and mathematics benchmarks. All Claude 3 models expanded Claude’s reach into advanced reasoning and image-processing use cases.

Claude 2 (July 2023)

Claude 2 significantly improved conversational ability, coding performance and user accessibility from the original Claude. The Claude 2 model became available via the web and API, helping Anthropic reach a broader developer and consumer audience. It also performed better on evaluations like Codex HumanEval and GSM8K compared to earlier Claude versions.

Claude (March 2023)

Anthropic’s original Claude model introduced its signature Constitutional AI framework, which aimed to align AI behavior with predefined principles rather than relying solely on reinforcement learning from human feedback. The original Claude prioritized safety and transparency from the start, laying the foundation for the company’s alignment-first approach to language model development.

Frequently Asked Questions

How do I access Claude?

- Go to www.claude.ai and sign up for free using an email address and phone number.

- Begin a conversation, or use one of Claude’s default prompts to get started. The free version of Claude has a limited amount of prompts per day based on demand.

- To access Claude Pro, which allows more prompts per day and grants early access to new features, you can either upgrade your existing account or create a new account. Claude Pro costs $17 a month.

Is Claude better than ChatGPT?

It depends on the task and the underlying large language model being used. For example, Claude Sonnet 4.5, one of its frontier models powering the Claude chatbot outperformed GPT-5 (a model that powers ChatGPT) on several common industry benchmarks.

In general though, Claude can process more words than ChatGPT, allowing for larger, more nuanced inputs and outputs. And Claude’s outputs are normally more helpful and less harmful than those of other chatbots because it was trained using constitutional AI.

Is Claude free to use?

A limited version of Claude is available for free, with a limited amount of prompts allowed per day. For $17 a month, users can access Claude Pro, which allows more prompts per day and access to more Claude models.

Is Claude open source?

No, Claude is not open source. However, all Claude models offered in the chatbot are available through the Claude API, Amazon Bedrock and Google Vertex AI.

Does Claude have a mobile app?

Yes. Anthropic rolled out a Claude iOS app in May 2024 and an Android app in July 2024, allowing users to seamlessly continue chats from its web app and use their cameras and photo libraries to leverage Claude’s image analysis capabilities.

When did Claude 3.5 Haiku launch?

Claude 3.5 Haiku was announced on October 22, 2024.

Can Claude use a computer independently?

Yes. Anthropic rolled out computer use functionality for Claude in October 2024, allowing developers to employ Claude to perform tasks on a computer in the same way a human user would — by clicking, typing, copying and pasting.