Elasticsearch is an optimized NoSQL search engine built on Apache Lucene. It stores and indexes structured or unstructured data in near real time, enabling fast full-text search, analytics and data retrieval across large data sets. Elasticsearch is also distributed, which means that it can scale horizontally across many different machines.

In this article, you will find out out how to set up an Elasticsearch cluster locally and an example of how to use its Python client. So, let’s get started.

What Is Python Elasticsearch?

Elasticsearch is a distributed NoSQL search engine built on Apache Lucene. Python Elasticsearch is the official Python client for interacting with Elasticsearch, which allows developers to connect to clusters, create indexes and manage documents using Python code.

What Is Elasticsearch Used for?

Elastisearch can be used for handling different kinds of data, such as textual and numerical data. Unstructured data is stored in a serialized JSON format, making Elasticsearch a NoSQL database. Unlike most NoSQL databases, however, Elasticsearch is optimized for its search engine capabilities.

Elasticsearch is open-source, fast, easy-to-scale and distributed. These factors make it attractive for various applications, like websites, web applications and other applications with lots of dynamic content, as well as logging analytics.

How Elasticsearch Works

Internally, when you first set Elasticsearch up, it ingests your data into an index. Here, an index means an optimized collection of documents. Furthermore, documents are collections of fields, which are the key-value pairs that contain your data. By default, Elasticsearch indexes all data and different kinds of data have their own optimized data structure.

Elasticsearch is so fast because of the index that it creates during data ingestion, which uses optimal data structures for different fields. By querying the index instead of the original data, you can retrieve data quickly.

For example, it stores text fields in something called an inverted index (it stores numeric fields in BKD trees). In short, the inverted index is a data structure that supports fast, full-text searches. An inverted index lists every unique word that appears in any document and identifies all of the documents each word occurs in. It’s an important data structure since it enables lightning-fast full-text searches, which is one of the main benefits of Elasticsearch.

You can use Elasticsearch in a schema-less way, meaning that it can index documents automatically without explicitly specifications for how to handle different kinds of data. This principle makes it easy to start using Elasticsearch. Sometimes, however, you might want to explicitly define mappings to take full control of how your data is indexed for performance or precision purposes. Elasticsearch allows this approach as well.

In Elasticsearch, a mapping specifies the different data types of different fields in your documents, and an analyzer processes text by breaking it into terms (tokenization) and possibly transforming them (e.g., lowercasing or stemming).

How to Set Up and Use Elasticsearch

1. Install Docker and Download Data Set

You will need a couple of things to follow this example on your computer. Before beginning, you should:

- Install Docker

- Download the data set I use in this example

- Create a virtual environment and install some packages to that environment

2. Create a Virtual Environment With Python

Here’s how to create a virtual environment from the command line using Python:

$ python -m venv .venv

$ source .venv/bin/activate

$ python -m pip install pandas notebook elasticsearch==8.5.2If you’re using Windows, instead of the second command, run:

$ .venv\Scripts\activate.batHere, pip is of course the package installer for Python. It installs packages from the Python package index and other indexes as well.

3. Run an Elasticsearch Cluster With Docker

Using Docker is a straightforward way to run Elasticsearch locally, which is why it needs to be installed and running. To start a single-node Elasticsearch cluster that can be used for developing locally, you can run the following line (that might be split into multiple lines on your screen) in the terminal:

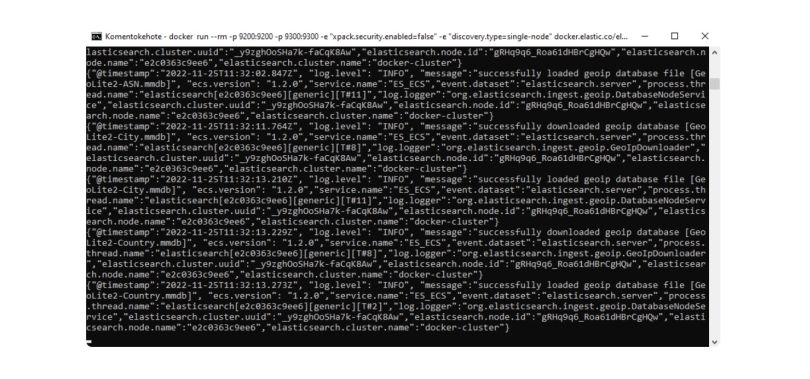

$ docker run --rm -p 9200:9200 -p 9300:9300 -e "xpack.security.enabled=false" -e "discovery.type=single-node" docker.elastic.co/elasticsearch/elasticsearch:8.5.2When you run this command, you should see a lot of text, something like this:

The command started an Elasticsearch cluster on your local computer. Here’s a rundown of what the different parts of the command do:

The docker run command is used to run an image inside a container, in this case, Elasticsearch with version 8.5.2.

The –rm parameter tells Docker to clean up and remove the file system of the container that is being run when the container exits.

The -p 9200:9200 -p 9300:9300 parameter defines the ports used. Port 9200 is used for RESTful API requests, while 9300 handles internal cluster communication between Elasticsearch nodes.

The -e "xpack.security.enabled=false" parameter disables security features. This is useful for local development but should never be used in production.

The -e "discovery.type=single-node" parameter makes Docker create a cluster with a single node.

4. Test Elasticsearch Cluster With Python

Now we can test connecting to the Elasticsearch cluster by creating and running the following Python script:

from elasticsearch import Elasticsearch

es = Elasticsearch("https://localhost:9200")

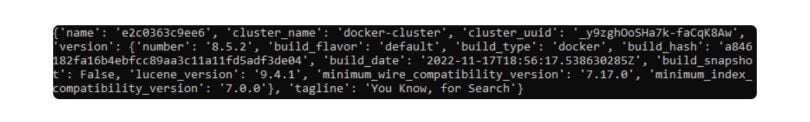

print(es.info().body)Running it in your virtual environment should return an output that looks something like this:

The output is a JSON object containing cluster metadata, such as version number and node information.

Now we are ready to create an Elasticsearch index and add some data to it!

5. Read in Data Set With Pandas

Let’s first use Pandas to read the data set we downloaded in the beginning. This can be done with the following two lines of Python code:

import pandas as pd

dataframe = pd.read_csv("netflix_titles.csv", keep_default_na=False)The keep_default_na=False parameter stores the empty values in the data set as empty strings instead of NaN, which Elasticsearch doesn’t like.

6. Create an Elasticsearch Mapping and Index

Now the data is stored in the dataframe variable. Let’s next create an index in the Elasticsearch cluster we set up earlier by creating a mapping that tells the index how to store the documents. You can create one dynamically (i.e., automatically) or explicitly. Let’s do it explicitly here to see how it’s done.

Here’s the code:

mappings = {

"properties": {

"type": {"type": "text", "analyzer": "english"},

"title": {"type": "text", "analyzer": "english"},

"director": {"type": "text", "analyzer": "standard"},

"cast": {"type": "text", "analyzer": "standard"},

"country": {"type": "text", "analyzer": "standard"},

"date_added": {"type": "text", "analyzer": "standard"},

"release_year": {"type": "integer"},

"rating": {"type": "text", "analyzer": "standard"},

"duration": {"type": "text", "analyzer": "standard"},

"listed_in": {"type": "keyword"},

"description": {"type": "text", "analyzer": "english"}

}

}

es.indices.create(index="netflix_shows", mappings=mappings)This will create a new index called netflix_shows in the Elasticsearch cluster that we set up moments ago.

7. Add Data to Elasticsearch Index

Now we can start adding data to the index. Two commands can do this: es.index() to add data one item at a time and bulk() to add multiple items at a time.

Adding Data With Es.Index()

Here’s how you can add data using es.index():

for i, row in dataframe.iterrows():

data = {

"type": row["type"],

"title": row["title"],

"director": row["director"],

"cast": row["cast"],

"country": row["country"],

"date_added": row["date_added"],

"release_year": row["release_year"],

"rating": row["rating"],

"duration": row["duration"],

"listed_in": row["listed_in"],

"description": row["description"]

}

es.index(index="netflix_shows", id=i, document=data)This code will add the data in the variable data to the Elasticsearch index netflix_shows (multiple indexes can be stored in a single cluster) with the identifier i. Since all of this is happening within a for loop that iterates through all the rows in our data set that we loaded earlier, all of the data in the set will be added one row at a time.

Adding Data With Bulk()

Here’s how you can add data using bulk():

from elasticsearch.helpers import bulk

bulk_data = []

for i,row in dataframe.iterrows():

bulk_data.append(

{

"_index": "netflix_shows",

"_id": i,

"_source": {

"type": row["type"],

"title": row["title"],

"director": row["director"],

"cast": row["cast"],

"country": row["country"],

"date_added": row["date_added"],

"release_year": row["release_year"],

"rating": row["rating"],

"duration": row["duration"],

"listed_in": row["listed_in"],

"description": row["description"]

}

}

)

bulk(es, bulk_data)This code does the exact same thing as the code for es.index() above and requires all the same information (i.e., the index’s name, an identifier, and the document). The difference is in the fact that, instead of adding documents row by row, we create a list of dictionaries with all of the documents in the data set. Then, passing this list of dictionaries and the cluster object as arguments to bulk(), all of the documents are added to the index in one command. Using the bulk() command to add multiple documents at a time is quite a bit faster than adding multiple documents one by one.

8. Check Setup By Counting Index Items

After adding the data, we can make sure everything worked by counting the number of items in the netflix_shows index with the following code:

es.indices.refresh(index="netflix_shows")

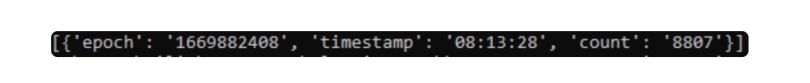

print(es.cat.count(index="netflix_shows", format="json"))You should then see something like this:

This tells us that there are a total of 8,807 documents stored in the index.

9. Use Elasticsearch With Query DSL

Finally, we can actually start using Elasticsearch for its search functionality by using its Query DSL (Domain Specific Language). This link is a good place to start building and understanding queries in Elasticsearch.

Below is an example query for finding American Netflix shows that weren’t released before 2015 in the netflix_shows index:

query = {

"bool": {

"filter": {

"match_phrase": {

"country": "United States"

}

},

"must_not": {

"range": {

"release_year": {"lte": 2015}

}

}

}

}

result = es.search(index="netflix_shows", query=query)

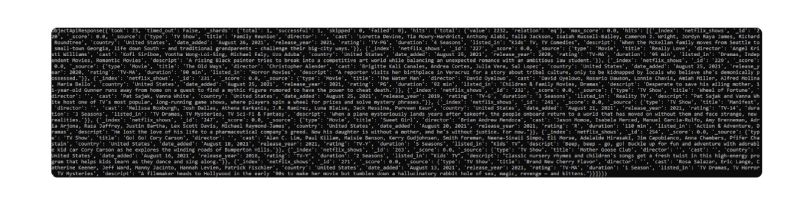

print(result)You should see something like this:

On the first row, you can see that, in total, the query found 2,232 matching documents. By default, Elasticsearch returns the 10 best matching documents when performing a query. In our example, you can see these documents printed in the terminal above.

How to Delete Elasticsearch Index Data

Finally, let’s see how you could delete data from your index or even delete the whole index. The following line of code deletes the document with the id 1337 from the index netflix_shows:

es.delete(index="netflix_shows", id="1337")And the following line of code deletes the whole netflix_shows index and all of its documents if you need to do that for whatever reason:

es.indices.delete(index="netflix_shows")

Further Elasticsearch Reading

Now you know the basics of Elasticsearch and its Python client. If you wish to delve deeper, I suggest you check out the Elasticsearch documentation and the Elasticsearch Python Client documentation.

Frequently Asked Questions

What is Elasticsearch used for?

Elasticsearch is a NoSQL search engine used for full-text search, real-time data retrieval and analytics on structured and unstructured data. It supports fast querying by indexing data efficiently.

Is Elasticsearch a NoSQL database?

Yes. Elasticsearch is a NoSQL database that stores unstructured data in a serialized JSON format and supports schema-less document indexing.

How does Elasticsearch achieve fast search performance?

Elasticsearch creates indexes during data ingestion, using data structures like inverted indexes for text and BKD trees for numeric data to enable rapid retrieval.