Think back to the last time you had a terrible consumer experience. Maybe it was bad enough that you even took the time to fill out a customer satisfaction survey. Perhaps you expected all those low marks to translate to some outreach — maybe a coupon or new perk or some other actionable olive branch. But instead you got ... crickets?

The radio silence might simply be the result of poor customer service, but there’s a good chance it’s because your scores aren’t great either. Many companies now calculate what’s called customer lifetime value (CLV) — a metric that aims to quantify how much net profit a customer will likely offer a company. How much you’re “worth” in part dictates how much effort a company is willing to extend to keep you happy.

What Is Customer Lifetime Value?

A customer’s CLV score impacts how and how much that customer will be marketed to — a high-CLV customer is worth more expense than a low-CLV customer. But it can also affect things like customer service response time and whether or not a given customer is extended a special offer. A high CLV score essentially slaps a big fat asterisk on “the customer is always right.” And, according to Peter Fader (left), a marketing professor at The Wharton School of the University of Pennsylvania and one of the foundational architects of CLV, it should.

A customer’s CLV score impacts how and how much that customer will be marketed to — a high-CLV customer is worth more expense than a low-CLV customer. But it can also affect things like customer service response time and whether or not a given customer is extended a special offer. A high CLV score essentially slaps a big fat asterisk on “the customer is always right.” And, according to Peter Fader (left), a marketing professor at The Wharton School of the University of Pennsylvania and one of the foundational architects of CLV, it should.

As he sees it, companies that bend over backwards to satisfy every unhappy customer are doing it wrong. They need to prioritize. Companies often have a bad habit of becoming obsessed with net promoter scores, trying to determine “what ails the detractors, then fix it for them,” he said. “[But] those customers suck.”

“We’re spending all this money trying to turn ugly ducklings into beautiful swans, and that just doesn’t work,” he added. “Instead, if we have visibility on what we think a customer is going to be worth, and then use that to drive decisions — about who to give the free stuff to, or whose call to answer first — we make better decisions and make more money.”

One basic CLV formula for subscription-based businesses divides a customer’s average monthly sales by the company’s churn rate. So a customer who pays $9 per month for a streaming service that sees 3 percent churn would have a CLV of $300.

The most common simple CLV formula is:

CLV = Margin x Retention Rate / 1 + Discount Rate - Retention Rate

Margin: How much profit a sale generates minus variable costs to deliver the product or service.

Retention Rate: The percentage of customers who don’t churn within a given time period.

Discount Rate: How much future revenue will be worth based on current borrowing rates. Some advice says default to 10 percent until a financial expert can provide more clarity, while others consider that an optimistic figure. Your mileage may vary.

The classic formula above can be helpful for basic, early stage assessments, but it starts to look pretty primitive for when you consider complicating factors. For one, retention rates are obviously never constant. And, for non-contractual businesses, determining whether a customer has truly churned or is just between purchases is tough. It also fails to incorporate the smorgasbord of behavioral signals produced whenever a customer interacts with a product or channel.

Needless to say, more advanced options ought to be considered. But before we do that, let’s take a closer look at some of the fundamental underpinnings.

A Brief History of Customer Lifetime Value

Customer lifetime value calculations have become dizzyingly sophisticated, often powered by machine learning and sometimes deep learning. But the metric comes from less glamorous stock. An early influence was the old-school direct marketers of the 1970s and 1980s. Think direct mail campaigns and, later, infomercials.

“Even though they’d sell schlocky stuff on late-night TV, the perspectives they had were revolutionary,” Fader said. Those marketers spent a lot of time poring over purchase patterns, a sort of training-wheels version of the data mining we have today. That led to the RFM method — recency, frequency and monetary value. Seen together, the method holds, when a customer last made a purchase, how many purchases they have made in total and their average purchase amount are a major indicator of future purchases.

“We’re spending all this money trying to turn ugly ducklings into beautiful swans, and that just doesn’t work.”

Then, in the mid-’80s, Dave Schmittlein (now dean of the MIT Sloan School of Management) and two colleagues derived a mathematical method to turn “backwards looking” RFM data into “forward looking” estimates of future CLV. This was the birth of the seminal Pareto/NBD model.

A simple way of explaining the Pareto/NBD framework without getting lost in the probability weeds is to think of it as if people have two coins to flip. One coin determines if a customer continues to consider making a purchase, or walks away for good, and the second determines if they decide to buy or not buy if they are still considering. The framework also accounts for variance in buying frequency: some will keep making purchases over time; others will be one and done.

The method didn’t make much real-world impact at first — it was greeted as “pure academic ivory tower” stuff, Fader said — until some 15 years later, when Fader and his colleagues introduced a more widely applicable, Excel-friendly version and evangelized the value of the model across the corporate landscape.

That work became “the fundamental research upon which [all future customer-lifetime value advances] was done,” said Emad Hasan, of Retina, an analytics software company and CLV platform provider.

CLV, Meet Machine Learning

After the advent of Big Data, CLV got a booster shot. Data-drenched e-commerce companies turned to machine learning methods as their data sets grew too unwieldy for parametric models. “It is particularly difficult to incorporate automatically learned or highly sparse features,” wrote data scientists at ASOS in 2017.

There are plenty of different approaches out there to be explored. (The Lifetimes Python package, developed by Cameron Davidson-Pilon, former director of data science at Shopify, is a popular one.) But companies tend to be mum about how exactly their in-house versions of models function, lest they risk losing competitive advantage.

That said, some companies do offer instructive peeks under the hood.

In 2016, Groupon published an outline of how it applies machine learning to determine CLV. Data scientists at the e-commerce site developed and implemented an approach that uses separate, two-stage random forest models for each of the six different groups into which it subsets customers, ranging from “unactivated” to “power users.” It applies those models and groups across three different time periods — short (90 days), medium (180 days) and long (365 days).

“You cannot just give a black box to the end user.”

The model also weighs more than 40 features for each score. The most important is user engagement, such as when a user opens the app or clicks on the site. But user experience factors, such as number of returns or customer service tickets, and — no surprise — recency of purchase, are also heavily weighted. The feature set updates daily for every user. (A Groupon spokesperson declined to comment on whether the company’s CLV approach had changed since this overview was published.)

Addhyan Pandey, who helped build the Groupon model, is now director of data science at Cars.com. There, he uses a different decision tree-based model, Light Gradient Boosting Machine (LightGBM), which performs faster than random forests and, according to Pandey, proved easier to tune and optimize. (His team also uses rolling, as opposed to fixed, timeframes.)

But both ML models have a standout advantage that Pandey said should be a priority for CLV frameworks: explainability. “You cannot just give a black box to the end user,” he said.

Should You Jump Into the Neural Nets?

Neural networks are always dicier when it comes to explainability than decision trees, but at the same time, they can also be far more accurate.

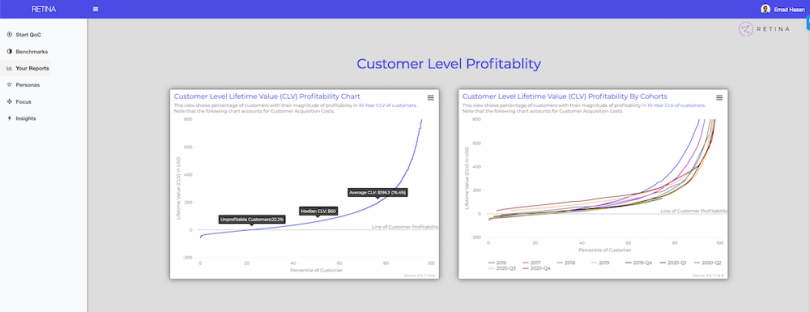

Emad Hasan (left) is co-founder and CEO of Retina, which sells a CLV platform that incorporates deep learning to score customer values, even for those that have little or no transaction history. The company’s algorithm is based on a Generalized Low Rank Model (GLRM) and a neural network.

Emad Hasan (left) is co-founder and CEO of Retina, which sells a CLV platform that incorporates deep learning to score customer values, even for those that have little or no transaction history. The company’s algorithm is based on a Generalized Low Rank Model (GLRM) and a neural network.

The system analyzes two data sets: customer order history, and customer attributes, such as age, location and pages viewed. (It does not track gender or race.) A set of common behaviors emerges from the purchase history, which allows Retina to build different customer profiles.

“And then for customers that walk in the door for whom we don’t have any transaction history, by looking at the understanding of their attributes, we can determine what archetype they might belong to — or a combination of archetypes they might belong to — and estimate their lifetime value early on,” Hasan said. (Machine learning approaches to the so-called cold-start problem — building profiles for consumers for which companies have little to no data — are becoming ever-more sophisticated.)

The Retina system eschews third-party cookies in favor of first-party data that a merchant might already be directly collecting. One of Retina’s clients, Madison Reed, for instance, has a short customer quiz with questions about things like hair type and intent (covering roots or adding highlights?).

“The moment a customer decides, ‘Hey, I don’t want this data to be used for any kind of behavioral tracking or measurement,’ they can file a GDPR-style request, and they’ll be removed from that database,” Hasan said.

Is It Better to Go Off-the-Shelf or DIY?

The approach put forth by Retina aims to give a window on short- and long-term projections. Whereas complex deep learning methods — like those being developed by data-rich video game companies — have become staggeringly sophisticated at short-term predictions, and more traditional BTYD models can provide reliable years-out projections, Retina’s GLRM/neural network hybrid looks to provide a bit of both.

But companies also have to consider a more basic question: Does it make more sense to go with an off-the-shelf option or invest in a data science department that can tweak a model precisely to the business context?

There are a couple of factors companies should consider, Hasan said. Some businesses are so complex, with such unique modeling factors, that “you can’t just plug and play a model.” He pointed to the ride-hailing industry, where driver CLV and rider CLV might each influence the other. The second is essentially a time and cost analysis. Is the company mature enough to take on a data science team — typically four or five hires — and commit to the 18-month process of data preparation and model building?

Startups might also consider focusing on proxy metrics until they have a greater pool of data to analyze or are prepared to tackle heavier-lift CLV modeling, Pandey said.

Regardless of what path a company chooses, it first has to answer a broader question about how it categorizes its business. As Fader sees it, the corporate landscape is littered with artificial dichotomies: B2B vs. B2C, product vs. service, complex new product vs. traditional. You’re better off thinking in terms of five “buckets,” Fader said.

- Pareto NBD: Good for non-contractual contexts, like an online storefront without subscription options. It considers the simple question, How often will people buy until they stop?

- Multi-level non-contractual: Two separate Pareto NBDs are layered together. Gaming could be an example — one model might gauge frequency of play, while another gauges how often a player makes a purchase.

- Contractual: The simplest “bucket,” commonly applied to software-as-a-service companies. Here, you’re projecting churn rates within a subscription model.

- Multi-service contractual: Clients open or close multiple accounts — such as professional and personal — within a service.

- Hybrid: These companies operate in both contractual and non-contractual contexts. Think Amazon Prime, where the customer choosing to remain subscribed or not is one model consideration and the customer choosing whether to buy X product within that subscription is another.

Should Customers Know Their CLV Scores?

The more companies become vigilant about asking the right questions and refining the interpretability and accuracy of their models, the more internal confidence they’ll inspire across the board. Would they ever be confident enough to actually tell customers their individual CLV rank if requested?

“If we believe in the models ... then we shouldn’t be afraid to look a customer in the eye and say, ‘You’re not getting that special, premium service she is, and here’s why.’”

It’s difficult to imagine, but it’s a vision Fader would like to see realized. There could be ethical issues that need to be addressed. For instance, if a retailer generally ranks customers who, say, open a store credit card more highly than those who do not, would that lead to consumers signing up for high-interest cards they’d probably be better off without?

But at the very least, it would signal a more universal trust in the process. “If we believe in the models — if they’re really working well, and they validate, and we’re making decisions based on them — then we shouldn’t be afraid to look a customer in the eye and say, ‘You’re not getting that special, premium service she is, and here’s why,’” Fader said.

In other words, companies should be able to explain why a particular customer’s cries of dissatisfaction didn’t have quite the tree-shaking effect that was expected.

“But right now, companies just aren’t accustomed to doing that, nor are they comfortable enough with the models to actually look the customer in the eye and believe what they’re saying,” he said.

“But I hope we get there.”