The most remarkable thing about shopping in a smart store is how unremarkable it feels — once you get over the whole no-checkout thing, of course.

At the same time, there’s a whole mess of complex computer vision modeling going on all around you to facilitate the experience. Those models have to be sophisticated enough to handle all manner of image challenges: object recognition, activity recognition, pose estimation. Asking a system to differentiate between two similar bags of chips, grabbed by people in similar coats and gloves, with no margin for error, is a big ask.

Getting it right requires data. Lots of it. But it’s not just a size consideration; scope is also key. Think about the countless variables possible even in controlled environments — light sharply reflecting off clothing, misplaced items, customers kneeling awkwardly to grab products. Even if a data set vast enough to encompass such variance existed, could it easily be assembled? After all, people aren’t exactly thrilled about being carefully tracked.

One way around the problem? Fake it, so to speak.

Ofir Chakon is the CEO and founder of Datagen, a company that creates what’s known as synthetic data. Datagen has generated synthetic data used to train models for smart-store projects, among other similar challenges. It synthesizes “very large-scale data sets [for domains] that have zero tolerance for errors, where we need to solve all possible edge cases, in the best way possible,” Chakon said.

Put simply, creating synthetic data means using a variety of techniques — often involving machine learning, sometimes employing neural networks — to make large sets of synthetic data from small sets of real data, in order to train models.

As synthetic techniques have quickly matured in recent years, it’s become an increasingly high-profile method not just for image-data challenges like smart stores, but for text, tabular and structured data also. (Chakon declined to name clients, but said his “prestigious” customer pool includes some of “the largest tech companies in the world.”)

It’s All About Scale and Annotation

Synthetic data’s great promise — generating data sets where existing ones are too small or sensitive to be leveraged — has drawn attention from industries with strict privacy regulations, like healthcare and finance. But another selling point is that it can be a huge time saver.

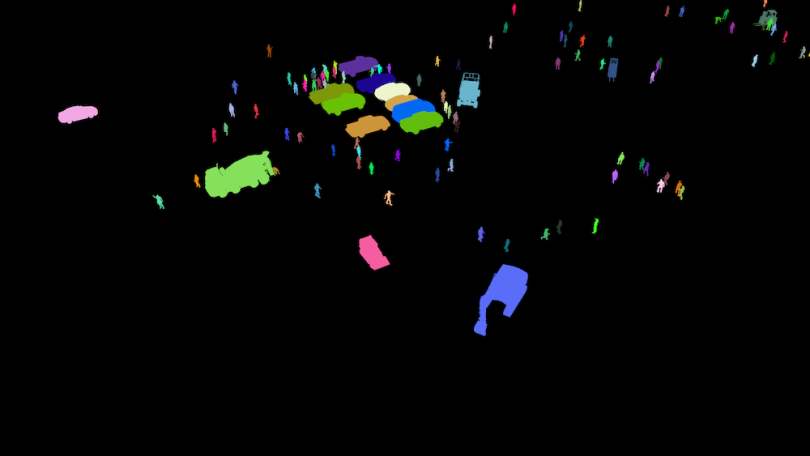

For computer vision, image data needs to be annotated with information. That includes things like segmentation masks, depth maps and bounding boxes — the squares or rectangles that surround objects like cars or people in self-driving car POVs. That annotation work, which can take several months, is often contracted to third parties. With synthetic data, annotations are self-generated as the data is made.

“When you want to annotate, say, 100 sequential frames, it takes a lot of time for people to do that — and there’s a lot of inaccuracy,” said Paul Walborsky, co-founder and chief operating officer of AI.Reverie, which also creates synthetic image data. His company is also in the smart-store game. AI.Reverie is working with 7-Eleven on cashierless, computer vision-enabled stores.

“You can run 10 different experiments a day, instead of three experiments a year.”

At Datagen, Chakon pointed out the flexibility afforded by the approach. “Instead of capturing and annotating data for three months, every cycle, now you can do it in hours or minutes,” Chakon said. “You can run 10 different experiments a day, instead of three experiments a year.”

Of course, even if it were possible to “auto-annotate” real-world data as it’s collected, the challenges being tackled by companies like Datagen and AI.Reverie have data-volume demands that are essentially prohibitive without synthetic assistance.

Neither Chakon and Walborsky put a number on the amount of data required by challenges on which they work. (Datagen focuses on interior-environment use cases, like smart stores, in-home robotics and augmented reality; AI.Reverie’s focus has included wide-scope scenes like smart cities, rare plane identification and agriculture, along with smart-store retail.)

But Chakon noted that state-of-the-art models vary between a few million annotated images all the way up to tens of millions. And data access is a greater challenge for image models than, say, NLP. OpenAI’s GPT-3 text generation model, for instance, was trained on some 45 terabytes of text data, but more than half of that corpus came from Common Crawl and Wikipedia text.

Walborsky added that, while the number of images required to achieve a moderate level of accuracy is relatively low, the number required to clear even just a few percentage points once accuracy reaches higher levels increases significantly. Call it the inverse of the Hemingway rule that otherwise governs so much of AI: here, change happens suddenly, then gradually.

“Because the task is so difficult, that means more and more diversity,” Walborsky said.

Here’s How It Works

There are four components that synthetic image data needs to have in order to be effective, according to Chakon: photorealism, variance, annotations and benchmarking. The latter means training some state-of-the-art neural networks on the data to test it against the real data provided by the client.

The fragmented nature of computer vision calls for case-specific evaluation. “It’s an iterative process,” Chakon said. “Whoever says they can create the perfect synthetic data for every computer vision task most likely lies.”

“Whoever says they can create the perfect synthetic data for every computer vision task most likely lies.”

He walked us through the process of how his company works.

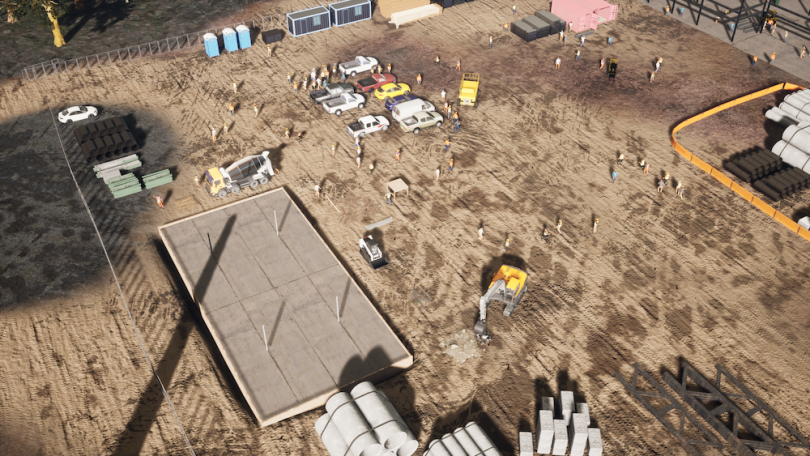

Datagen starts with a combination of 3D scans of actual people and objects, plus 3D assets modeled by artists. The team then “algorithmically extends” that collection even further, using deep learning methods like generative and latent space models.

“Algorithmically, we’re able to, to turn, say, 1,000 identities that we captured from the real world all the way to a million new identities that don’t really exist in the real world,” Chakon said.

Then they use an in-houses composition algorithm to logically position the multitude of assets into a virtual environment, using an architecture similar to Unreal Engine or Blender. (At AI.Reverie, the environment simulator is built atop Unreal, with multiple in-house modifications built atop it.) Those 3D scenes can be rendered into 2D images, which are automatically annotated when exported.

At that point, potentially millions of annotated synthetic images have been produced, but some may not exactly be photorealistic. This is where the GANs come in. That means it’s also “where we need real data embedded into the process,” Chakon said.

Generative Adversarial Networks (GANs) is a deep learning framework composed of battling neural networks, a generator and a discriminator. The generator creates data while the discriminator tries to distinguish between real and generated data. The generator also tries to fool the discriminator. The symmetric, symbiotic process — and its results — get better as it goes along.

There Are More Ways Than 1 to Get at the Problem

The GAN may be the sexiest step in the process — this is the technique, after all, that can turn you into anime or create Pac-Man nearly out of whole cloth — but it’s just one step of many. And, in fact, sometimes it’s not used at all.

AI.Reverie’s machine learning team might use GANs to add nuance and boost performance if benchmarking shows lower-than-desired accuracy.

“But that doesn’t mean you always need GANs,” Walborsky said. “Sometimes just synthetic images alone can provide the performance necessary.”

A similar dynamic plays out when it comes to tabular, structured data. Khaled El Emam, is co-author of Practical Synthetic Data Generation and co-founder and director of Replica Analytics, which generates synthetic structured data for hospitals and healthcare firms.

Health data sets are sensitive, and often small. Think clinical trials for rare diseases. But deep learning methods — be they GANs or variational autoencoders (VAEs), the other deep learning architecture commonly associated with synthetic data — are better suited toward very large data sets.

Replica Analytics’ platform more commonly uses what El Emam describes in his book as sequential machine learning synthesis, which you might think of as an amplification of regression or classification, by sequentially synthesizing variables.

He demoed an application with a quick drag of a CSV file. He then punched a few of the utility assessment options on the dashboard, which would produce automated comparisons between the real and synthetic data. Within seconds, the program had generated a data set. The whole process was significantly shorter than the time it took a popular AI service to transcribe our conversation.

Granted, the original data set was small — some 5,000 records. But the sequential synthesis method would also work well, and quickly, for large data sets, El Emam said. “It’s not GAN for the sake of GAN,” he said. “You have to be smart and use the most suitable generative modeling techniques.”

Sometimes the most suitable technique is in fact deep learning. “We just built a synthetic data set for a health system with a lot of different domains — claims, emergency hospital admissions, drugs, labs, demographics,” he said.

That kind of complexity did require deep learning firepower.

“You need to have a toolbox of techniques,” he said.

Avoiding Bias

Whenever discussing model training, the issue of algorithmic bias is never far off. Biased models are often rooted in biased data sets, so the necessity for diverse data — real and synthetic alike — is paramount.

As noted, Chakon considers variance a bedrock component of Datagen’s process. Walborsky similarly pointed to synthetic image data’s capacity to fill diversity holes in data sets. But, as a recent Slate article explored, synthetic data that fails to adequately compensate for latent biases within data sets will simply perpetuate bias, even if the synthetic data correctly reflects the real data.

Slate pointed to valuable research done by Mostly AI, which focuses on insurance and finance sectors and was one of the first companies to trade in synthetic structured data. The research looked at two data sets that reflected reality, but that, if applied to make prediction or decision models, would perpetuate bias: 1994 Census data in which men who earned $50,000 or more outnumbered women in that compensation range by 20 percent; and the COMPAS data set, used in a criminal justice context, in which Black people were deemed 24 percent more likely to have high recidivism “scores” than whites.

Mostly AI then developed a statistical parity technique that reduced those percentages to 2 percent and 1 percent, respectively, when generating synthetic data based on each set.

The research is still fresh, so the fairness constraint has not been built into its data synthesis product, and whether the market for synthetic structured data will prioritize fairness remains to be seen, Mostly AI chief executive officer Tobias Hann told Built In. The topic becomes only more complicated when considering issues like tradeoffs between fairness and accuracy, and the ways in which our notions about fairness are evolving.

Fairness issues in synthetic data aren’t just confined to structured data. To circle back to smart stores, Amazon Go does not use facial recognition, but some smart stores do, though often in limited capacities. Should synthetic image data companies pressure clients to use their data with strict limits on facial recognition modeling, or disallow it altogether?

Back in the world of structured data, Hann said Mostly AI proactively addresses fairness when speaking with potential clients and urged the synthetic-data universe at large to do the same.

“It’s definitely not a trivial topic,” he said. “It’s something we take seriously, and we think other companies should as well.”