Indexing is a data structure or database technique that allows you to retrieve records from a central database. Search engines often use indexing to map search queries to documents, allowing users to quickly find information based on a search query. This is known as an index-based search engine.

What Is an Index-Based Search Engine in Python?

An index-based search engine in Python is a search engine created in Python that relies on data stored in an inverted index to find answers. When a user enters a search query, it will scan the appropriate index to surface the closest matching result, rather than searching one-by-one through an entire set of document lists.

In a database, an index is a small table with only two columns. The first column comprises a copy of the primary or candidate key of a table. Its second column contains a set of pointers for holding the address of the disk block where that specific key value is stored.

A forward index maps from files to content, like words and keywords, while an inverted index maps from content to files. Search engine applications typically use an inverted index to find relevant file results depending on the content in a search query.

In this article, we will go over how to create an index-based search engine with Python, the Scrapy framework and the Laravel framework.

What Is an Inverted Index?

An inverted index is an index data structure or database index built to facilitate search queries. It works by storing data mapping from the content of a document list, such as words or numbers, to its locations in the list of documents. With the words or numbers that have been mapped, the search process will be faster and more efficient than searching line-by-line through all of the document lists.

What Is a Query?

A query, or a search query as it’s called in a search engine, is a query based on a specific search term that a user enters into a search engine to satisfy information needs.

It’s a request for information that is made using a search engine. Every time a user puts a string of characters in a search engine and presses “Enter,” a search engine query is made. The web application will display the search query results.

Requirements to Build an Index-Based Search Engine in Python

You should have:

- Python 3.x.x

- The Python package manager PIP

- Code editor (in this tutorial I will use Visual Studio Code, which is optional)

- Pickle module

How to Install Pickle Module in Python

Pickle is a default module installed on both Python 2 and Python 3. If your pickle module is not found, run this command in your terminal to install this library:

pip install pickle-mixinWe’ll use the pickle module to save our index file and load the index file when the query is called. The pickle module is useful for storing Python objects into a file. With this function, we will use pickle to save our dictionary Python to file, thus allowing us to read files faster.

How to Create an Index-Based Search Engine in Python

1. Create an Inverted Index

In order to make an inverted index — which is required for an index-based search engine — we’ll use a Python dictionary. The dictionary will save the term as a key and the document’s score as a value. This way we can save the data document and score document for each word.

To do that, we will also use the term frequency inverse dense frequency (TF-IDF) technique to calculate the score from the document.

TF-IDF is a technique that’s used to find the meaning of sentences and cancels out the shortcomings of the bag of words technique. The bag of words technique is good for text classification or for helping a machine read words in numbers.

There are three terms you should know:

- Term frequency (TF): This is the frequency of term for all of the document.

- Document frequency (DF): This is the frequency of term of a specific document.

- Inverse document frequency (IDF): This is the value you get from calculating the DF with the formula:

IDF = Log[(# Number of documents) / (Number of documents containing the word (DF))]

With this TF-IDF technique, we can get the weight for each word against the document. The weight of each word is what we’ll use as our score value. The formula to get this weight is: WEIGHT = TF * IDF

Script Indexing With TF-IDF

import re

import sys

import json

import pickle

import math

#Argument check

if len(sys.argv) !=3 :

print ("\n\\Use python \n\t tf-idf.py [data.json] [output]\n")

sys.exit(1)

#data argument

input_data = sys.argv[1]

output_data = sys.argv[2]

data = open(input_data).read()

list_data = data.split("\n")

sw = open("stopword.txt").read().split("\n")

content=[]

for x in list_data:

try:

content.append(json.loads(x))

except:

continue

# Clean string function

def clean_str(text):

text = (text.encode('ascii', 'ignore')).decode("utf-8")

text = re.sub("&.*?;", "", text)

text = re.sub(">", "", text)

text = re.sub("[\]\|\[\@\,\$\%\*\&\\\(\)\":]", "", text)

text = re.sub("-", " ", text)

text = re.sub("\.+", "", text)

text = re.sub("^\s+","" ,text)

text = text.lower()

return text

df_data={}

tf_data={}

idf_data={}

i=0;

for data in content:

tf={}

#clean and list word

clean_title = clean_str(data['book_title'])

list_word = clean_title.split(" ")

for word in list_word:

if word in sw:

continue

#tf term frequency

if word in tf:

tf[word] += 1

else:

tf[word] = 1

#df document frequency

if word in df_data:

df_data[word] += 1

else:

df_data[word] = 1

tf_data[data['url']] = tf

# Calculate Idf

for x in df_data:

idf_data[x] = 1 + math.log10(len(tf_data)/df_data[x])

tf_idf = {}

for word in df_data:

list_doc = []

for data in content:

tf_value = 0

if word in tf_data[data['url']]:

tf_value = tf_data[data['url']][word]

weight = tf_value * idf_data[word]

doc = {

'url' : data['url'],

'title' : data['book_title'],

'image' : data['image-url'],

'price' : data['price'],

'score' : weight

}

if doc['score'] != 0:

if doc not in list_doc:

list_doc.append(doc)

tf_idf[word] = list_doc

# Write and save dictionary to file using pickle module

with open(output_data, 'wb') as file:

pickle.dump(tf_idf, file)Argument Line Script

if len(sys.argv) !=3 :

print ("\n\nPenggunaan\n\ttf-idf.py [data.json] [output]\n")

sys.exit(1)

input_data = sys.argv[1]

output_data = sys.argv[2]

data = open(input_data).read()

list_data = data.split("\n")

#load Stopword

sw = open("stopword.txt").read().split("\n")

content=[]

for x in list_data :

try:

content.append(json.loads(x))

except:

continueIn the code above, I loaded the data that we returned in the scraping process and entered a “stopword” term that we don’t use to index. To run the script, type the following command:

python3 tf-idf.py <data_json> <index_name>The code above is the data set that we get when doing the scraping process, and is the output from this script that makes it an index file.

Cleaning the String Data Sets

# Clean string function

def clean_str(text) :

text = (text.encode('ascii', 'ignore')).decode("utf-8")

text = text.lower()

text = re.sub("&.*?;", "", text)

text = re.sub(">", "", text)

text = re.sub("[\]\|\[\@\,\$\%\*\&\\\(\)\":]", "", text)

text = re.sub("-", " ", text)

text = re.sub("\.+", "", text)

text = re.sub("^\s+","" ,text)

return textAs the name implies, this function works to clean the book_title from data sets that come from useless punctuation.

2. Create a Search Query Script

The query script that will be created must be able to read the dictionary stored in the earlier file. Of course, if you saved it using the pickle module, then we’ll use the pickle module to read it.

import re

import sys

import json

import pickle

#Argument check

if len(sys.argv) != 4 :

print ("\n\nPenggunaan\n\tquery.py [index] [n] [query]..\n")

sys.exit(1)

query = sys.argv[3].split(" ")

n = int(sys.argv[2])

#load and read index file with pickle module

with open(sys.argv[1], 'rb') as indexdb:

indexFile = pickle.load(indexdb)

#query

list_doc = {}

for q in query:

try :

for doc in indexFile[q]:

if doc['url'] in list_doc :

list_doc[doc['url']]['score'] += doc['score']

else :

list_doc[doc['url']] = doc

except :

continue

#convert to list

list_data=[]

for data in list_doc :

list_data.append(list_doc[data])

#sorting list descending

count=1;

for data in sorted(list_data, key=lambda k: k['score'], reverse=True):

y = json.dumps(data)

print(y)

if (count == n) :

break

count+=1How to Run the Query Script

To run this script, you just must type this command in your terminal:

python3 query.py <index_file> <n-query> <string-query><index_file> is the index_file we created earlier in pickle module.

<n-query> is the number of results we want to display.

<string-query> is a keyword for the information the user wants.

3. Calculate the Score of a Document Toward a Search Query

The score we get on the index can’t be a ranking reference because the index we make consists of only one gram of word. As a result, phrases that are made up of more than one word can’t be fixed on the score of the index.

To solve this problem, we’ll divide the words in the query and calculate the score for each query word.

for q in query:

try :

for doc in indexFile[q]:

if doc['url'] in list_doc :

list_doc[doc['url']]['score'] += doc['score']

else :

list_doc[doc['url']] = doc

except :

continue

4. Rank Search Query Results

To make a rank, we only need to sort the search results based on the highest score.

count=1;

for data in sorted(list_data, key=lambda k: k['score'], reverse=True):

y = json.dumps(data)

print(y)

if (count == n) :

break

count+=1

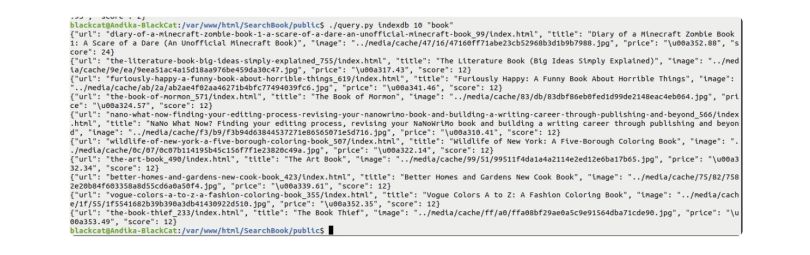

Query Script Example for Index-Based Search Engine in Python

You can see in the output of a query script that the data set is related to querying keywords in the JSON format. JSON format will make reading the results easier on the search engine interface we will create.

Frequently Asked Questions

Can I create my own search engine?

Yes, you can create your own search engine using search engine creator tools from providers, or by programming a search engine from scratch.

Can I create a search engine using Python?

Yes, you can create your own search engine in Python through programming and by using the Scrapy web crawling framework.