Buying a Picasso is like buying a mansion.

There’s not that many of them, so it can be hard to know what a fair price should be. In real estate, if the house last sold in 2008 — right before the lending crisis devastated the real estate market — basing today’s price on the last sale doesn’t make sense.

Paintings are also affected by market conditions and a lack of data. Kyle Waters, a data scientist at Artnome, explained to us how his Boston-area firm is addressing this dilemma — and, in doing so, aims to do for the art world what Zillow did for real estate.

“If only 3 percent of houses are on the market at a time, we only see the prices for those 3 percent. But what about the rest of the market?” Waters said. “It’s similar for art too. We want to price the entire market and give transparency.”

“We want to price the entire market and give transparency.”

Artnome is building the world’s largest database of paintings by blue-chip artists like Georgia O’Keeffe, including her super-famous works, lesser-known items, those privately held and artworks publicly displayed. Waters is tinkering with the data to create a machine learning model that predicts how much people will pay for these works at auctions. Because this model includes an artist’s entire collection, and not just those works that have been publicly sold before, Artnome claims its machine learning model will be more accurate than the auction industry’s previous practice of simply basing current prices on previous sales.

The company’s goal is to bring transparency to the auction house industry. But Artnome’s new model faces the old problem: Its machine learning system performs poorly on the works that typically sell for the most — the ones that people are the most interested in — since it’s hard to predict the price of a one-of-a-kind masterpiece.

“With a limited data set, it’s just harder to generalize,” Waters said.

We talked to Waters about how he compiled, cleaned and created Artnome’s machine learning model for predicting auction prices, which launched in late January.

Types of data included in the model

Most of the information about artists included in Artnome’s model comes from the dusty basement libraries of auction houses, where they store their catalog raissons, which are books that serve as complete records of an artist’s work. Artnome is compiling and digitizing these records — representing the first time these books have ever been brought online, Waters said.

Artnome’s model currently includes information from about 5,000 artists whose works have been sold over the last 15 years. Prices in the data set range from $100 at the low end to Leonardo DaVinci’s record-breaking “Salvator Mundi” — a painting that sold for $450.3 million in 2017, making it the most expensive work of art ever sold.

How hard was it to predict what DaVinci’s 500-year-old “Mundi” would sell for? Before the sale, Christie’s auction house estimated his portrait of Jesus Christ was worth around $100 million — less than a quarter of the price.

“It was unbelievable,” Alex Rotter, chairman of Christie’s postwar and contemporary art department, told The Art Newspaper after the sale. Rotter reported the winning phone bid.

“I tried to look casual up there, but it was very nerve-wracking. All I can say is, the buyer really wanted the painting and it was very adrenaline-driven.”

“The buyer really wanted the painting and it was very adrenaline-driven.”

A piece like “Salvatore Mundi” could come to market in 2017 and then not go up for auction again for 50 years. And because a machine learning model is only as good as the quality and quantity of the data it is trained on, market, condition and changes in availability make it hard to predict a future price for a painting.

These variables are categorized into two types of data: structured and unstructured. And cleaning all of it represents a major challenge.

What is structured data?

Structured data includes information like what artist painted which painting on what medium, and in which year.

Waters intentionally limited the types of structured information he included in the model to keep the system from becoming too unruly to work with. But defining paintings as solely two-dimensional works on only certain mediums proved difficult, since there are so many different types of paintings (Salvador Dali famously painted on a cigar box, after all). Artnome’s problem represents an issue of “high cardinality,” Waters said, since there are so many different categorical variables he could include in the machine learning system.

“You want the model to be narrow enough so that you can figure out the nuances between really specific mediums, but you also don’t want it to be so narrow that you’re going to overfit.”

“You want the model to be narrow enough so that you can figure out the nuances between really specific mediums, but you also don’t want it to be so narrow that you’re going to overfit,” Waters said, adding that large models also become more unruly to work with.

Other structured data focuses on the artist herself, denoting details like when the creator was born or if they were alive during the time of auction. Waters also built a natural language processing system that analyzes the type and frequency of the words an artist used in her paintings’ titles, noting trends like Georgia O’Keeffe using the word “white” in many of her famous works.

Including information on market conditions, like current stock prices or real estate data, was important from a structured perspective too.

“How popular is an artist, are they exhibiting right now? How many people are interested in this artist? What’s the state of the market?” Waters said. “Really getting those trends and quantifying those could be just as important as more data.”

Using standard practices to quantify subjective points

Another type of data included in the model is unstructured data — which, as the name might suggest, is a little less concrete than the structured items. This type of data is mined from the actual painting, and includes information like the artwork’s dominant color, number of corner points and if faces are pictured.

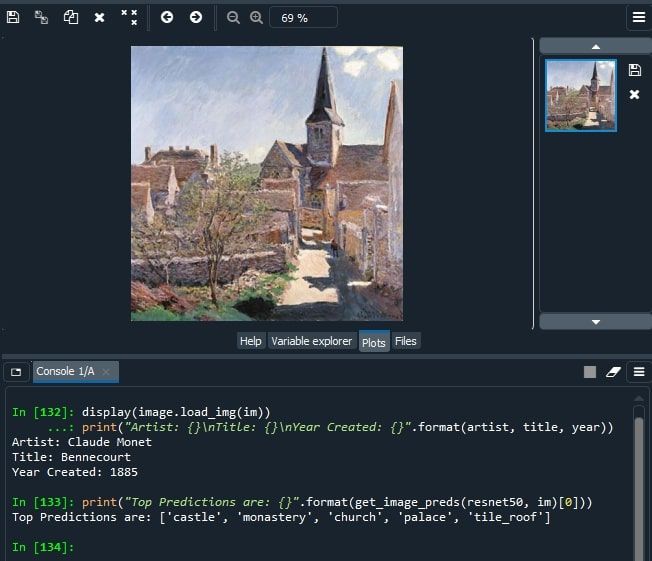

Waters created a pre-trained convolutional neural network to look for these variables, modeling the project after the ResNet 50 model, which famously won the ImageNet Large Scale Visual Recognition Challenge in 2012 after it correctly identified and classified nearly all of the 14 billion objects featured.

Including unstructured data helps quantify the complexity of an image, Waters said, giving it what he called an “edge score.”

An edge score helps the machine learning system quantify the subjective points of a painting that seem intuitive to humans, Waters said. An example might be Vincent Van Gogh’s series of paintings of red-haired men posing in front of a blue background. When you’re looking at the painting, it’s not hard to see you’re looking at self portraits of Van Gogh, by Van Gogh.

Including unstructured data in Artnome’s system helps the machine spot visual cues that suggest images are part of a series, which has an impact on their value, Waters said.

“When you start interacting with different variables, then you can start getting into more granular details.”

“Knowing that that’s a self-portrait would be important for that artist,” Waters said. “When you start interacting with different variables, then you can start getting into more granular details that, for some paintings by different artists, might be more important than others.”

What data’s missing from the model?

Artnome’s convoluted neural network is good at analyzing paintings for data that tells a deeper story about the work. But sometimes, there are holes in the story being told.

In its current iteration, Artnome’s model includes both paintings with and without frames — it doesn’t specify which work falls into which category. Not identifying the frame could affect the dominant color the system discovers, Waters said, adding an error to its results.

“That could maybe skew your results and say, like, the dominant color was yellow when really the painting was a landscape and it was green,” Waters said.

The model also lacks information on the condition of the painting, which, again, could impact the artwork’s price. If the model can’t detect a crease in the painting, it might overestimate its value. Also missing is data on an artwork’s provenance, or its ownership history. Some evidence suggests that paintings that have been displayed by prominent institutions sell for more. There’s also the issue of popularity. Waters hasn’t found a concrete way to tell the system that people like the work of O’Keeffe more than the paintings by artist and actor James Franco.

“I’m trying to think of a way to come up with a popularity score for these very popular artists,” Waters said.

Clean data is good data

An auctioneer hits the hammer to indicate a sale has been made. But the last price the bidder shouts isn’t what they actually pay.

Buyers also must pay the auction house a commission, which varies between auction houses and has changed over time. Waters has had to dig up the commission rates for these outlets over the years and add them to the sales price listed. He’s also had to make sure all sales prices are listed in dollars, converting those listed in other currencies. Standardizing each sale ensures the predictions the model makes are accurate, Waters said.

“You’d introduce a lot of bias into the model if some things didn’t have the commission, but some things did.”

“You’d introduce a lot of bias into the model if some things didn’t have the commission, but some things did,” Waters said. “It would be clearly wrong to start comparing the two.”

Once Artnome’s data has been gleaned and cleaned, information is input into the machine learning system, which Waters structured into a random forest model, an algorithm that builds and merges multiple decision trees to arrive at an accurate prediction. Waters said using a random forest model keeps the system from “overfitting” paintings into one category, and also offers a level of “explainability” through its permutation score — a metric that basically decides the most important aspects of a painting.

Waters doesn’t weigh the data he puts into the model. Instead, he lets the machine learning system tell him what’s important, with the model weighing factors like today’s S&P prices more heavily than the dominant color of a work.

“That’s kind of one way to get the feature importance, for kind of a black box estimator,” Waters said.

From upstart to standard

Although Artnome has been approached by private collectors, gallery owners and startups in the art tech world interested in its machine learning system, Waters said it’s important this data set and model remain open to the public.

His aim is for Artnome’s machine learning model to eventually function like Zillow’s “Zestimate,” which estimates real estate prices for homes on and off the market, and act as a general starting point for those interested in finding out the price of an artwork.

“When it gets to the point where people see it as a respectable starting point, then that’s when I’ll be really satisfied.”

“We might not catch a specific genre, or era, or point in the art history movement,” Waters said. “I don’t think it’ll ever be perfect. But when it gets to the point where people see it as a respectable starting point, then that’s when I’ll be really satisfied.”