Database normalization refers to organizing data into tables to make a database easier to navigate and to ensure the data remains accurate and consistent. Through this process, database administrators, data engineers and data architects can model and design a framework for storing an application’s data in such a way that the database layer of the application functions at maximum efficiency.

In this context, the data model refers to the set of relationships and conceptual entities specific to the business use case that the data represents. For example, in the case of retail banking data, this may include financial transactions and customer savings account information.

Since most websites and software applications rely on the storage of data to operate efficiently, database normalization remains a crucial step in data management.

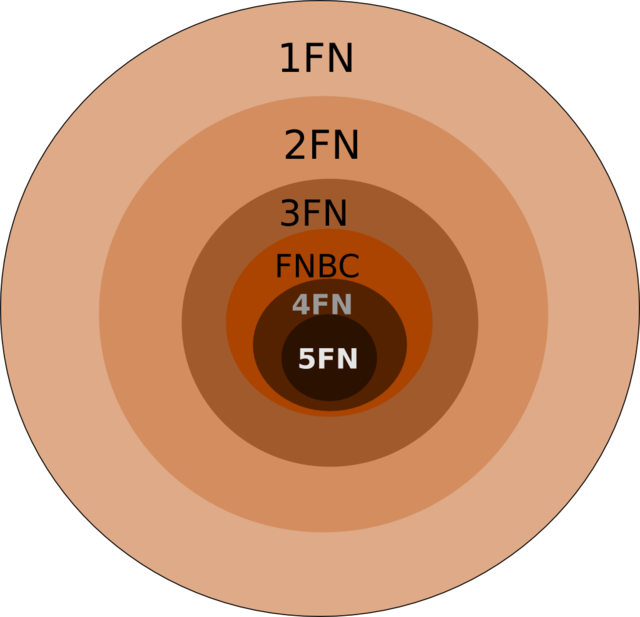

Database Normal Forms

- Zero Normal Form (0NF): Data that is not yet normalized.

- First Normal Form (1NF): Data that is de-duplicated and with key attributes identified.

- Second Normal Form (2NF): Achieves full functional dependencies on the key attributes.

- Third Normal Form (3NF): Removes transitive dependencies.

- Boyce-Codd Normal Form: Eliminates all functional dependencies on non-key attributes that can be used as candidate key(s).

- Fourth Normal Form (4NF) and Fifth Normal Form (5NF): Removes multi-value dependencies and join dependencies.

It’s worth noting there is also a sixth normal form (6NF). That said, 6NF is not yet standardized so I haven’t included it in this definition.

Why Do We Need Database Normalization?

The database normalization process is a critical step in database management for many reasons. Database normalization helps us do the following:

1. Avoid Database Anomalies

Database anomalies are errors that occur when modifying data, such as inserting, updating or deleting specific data values or attributes. These changes can lead to incorrect or missing data. Normalizing the data can prevent the database from suffering from these problems during daily operations.

2. Manage Functional Dependencies of Data

Functional dependencies are relationships between database attributes, which are the conceptual and physical entities the data represents. A customer’s attribute could be their phone number or home address.

Here’s how it works: One attribute (A) is functionally dependent on another attribute (B) when, by knowing the value of B, you can uniquely determine the value of A. This process does not work in reverse. In other words, functional dependencies represent relationships between data. These are best organized or managed through normalization, a process that I’ll demonstrate below.

3. Achieve a Loosely Coupled Database Model

In database design, we want to avoid having many parts of the database (and its data) depend on several other parts of the database. In other words, we want to avoid a tightly coupled system.

In a tightly coupled system, one part of the system can break or stop functioning, thereby leading to many other parts breaking and negatively affecting database performance.

Normalization helps database administrators achieve a loosely coupled system that’s more modular and less interdependent. This loose coupling helps with operational diagnostics and root-cause analysis of problems when they inevitably occur.

4. Avoid Redundancies

In database management, redundancies mostly refer to having duplicate data in the system, which we can avoid through normalization. Avoiding duplicate data is important since it can save us the additional money and storage resources required to maintain it, in addition to the risk of having to maintain data consistency in multiple places instead of simplifying the data model.

5. Avoid Bugs

A positive side effect of all the above is the avoidance of bugs, system issues and ultimately even potential downtime of the software application. System downtime can happen due to failures at the database layer if the data is not properly normalized. This aspect directly connects the proper management and storage of application data in the back-end systems with the customer experience on the front end — and ultimately the market success of the company that owns the application.

6. Achieve Operational Efficiency and Performance

A normalized database also helps achieve efficiency gains. For example, your system may need to execute a smaller set of operations to model, serve and process the data for customers. A smaller set of operations due to well-normalized data also requires less computing power, thereby leading to cost savings on the software infrastructure (or stack) on which the database application runs. For this reason, database normalization is also directly tied to savings in operational costs.

Database Normalization With Examples

Now that we understand the benefits of normalization, let’s go through the steps for normalizing our databases. We refer to these as the normal forms.

Zero Normal Form (0NF)

At this step, our data is not yet normalized, so it’s full of redundancies and still in its raw form without any structure or modeling logic applied to it. For this reason, 0NF technically is not a normal form, but an informal term to describe unstructured data.

First Normal Form (1NF)

Moving from 0NF to 1NF involves:

- Eliminating duplicate columns of repetitive data.

- Making sure each attribute of the data contains a single value instead of multiple values.

- Identifying key attributes that we can use as the data’s unique identifiers (or primary keys).

How to Go From 0NF to 1NF

Let’s look at an example of what it takes to move data from 0NF to 1NF using books as our sample data set.

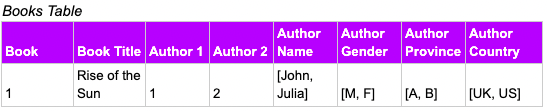

Below you can see that the table represents data in zero normal form because each book stores multiple author information across different attributes (Author 1 and Author 2), which are repeating columns of the same data. In addition, there are columns such as Author Name that store multiple values, and this needs to be corrected.

Book Data in 0NF

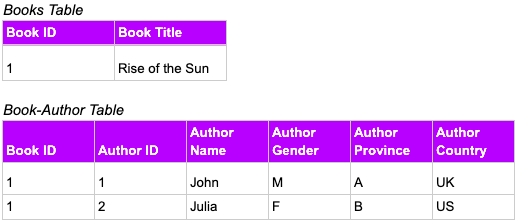

Book Data in 1NF

We eliminate redundancies by splitting the data into a Books Table (and creating a Book ID identifier) and a Book-Author table. The Books Table now has a Book ID identifier, while the Book-Author Table stores all author ID information in one single column (the Author ID), plus additional author attributes (author’s name, gender and geographical location).

We only have one book in this example but the structure holds as we increase the number of books we store.

Second Normal Form (2NF)

Moving from 1NF to 2NF involves:

- Making sure the data starts in the first normal form.

- Making sure all data is fully functionally dependent on the key identifiers.

How to Go From 1NF to 2NF

This data is not fully functionally dependent since, per the functional dependency definition we discussed above, we can’t uniquely determine the author’s name based on certain other attributes, such as Book ID. This is because Book ID has two separate authors, which means we need to correctly take the data to its second normal form.

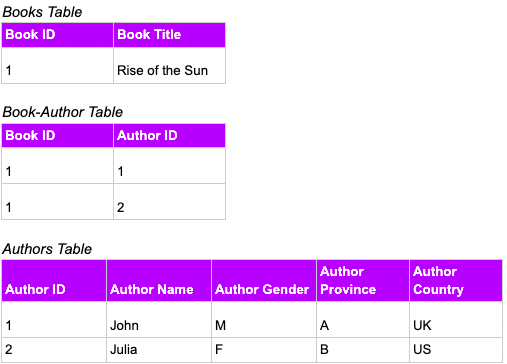

Book Data in 2NF

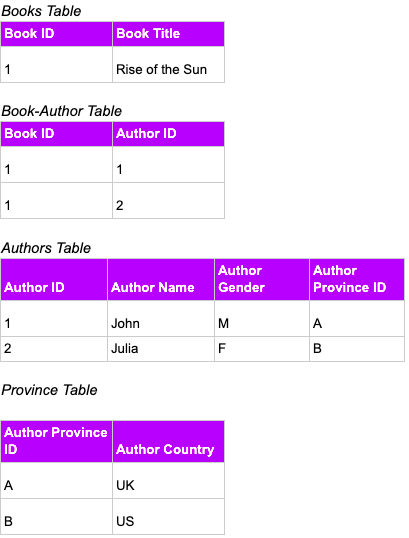

To uniquely determine the value of one attribute from the value of its key, we split the data further and create the Authors table. With the data in this form, we are now able to:

- Uniquely identify an author in the Authors table by knowing its Author ID.

- Uniquely identify a book in the Books table by knowing its Book ID.

- Uniquely identify a Book-Author value by knowing its Book-Author ID from the Book-Author Table.

Third Normal Form (3NF)

Moving from 2NF to 3NF involves:

- Making sure the data starts in the second normal form.

- Making sure there are no transitive dependencies in the data. This means we need to ensure no other attribute (beyond the key identifiers or ID columns) can uniquely determine an attribute’s output.

How to Go From 2NF to 3NF

Once we re-evaluate the data under the third normal form rules, we notice there’s a non-ID field (Province) that uniquely determines the value of the Country attribute. Therefore, the Country is functionally dependent on Province, a non-ID column.

Book Data in 3NF

By eliminating this dependency, we can get the data in the third normal form. To do this, we create a Province table with the Province values set as the Province ID and keep the Province ID in the Authors table. Finally, we link the data and avoid the transitive dependency we saw in the second normal form.

Boyce-Codd Normal Form

Moving from 3NF to Boyce-Codd normal form involves:

- Making sure the data is in the third normal form.

- Eliminating all functional dependencies on attributes that are not the primary key (but we could still potentially use as one).

The difference between this form and the third normal form is subtle. As in the third normal form, we’re primarily concerned with removing functional dependencies on non-key attributes, whereas here we are concerned with removing functional dependencies on potential key attributes.

At this point, our data is already extremely normalized so going beyond this point gets us into the realm of over-normalization, meaning normalizing to the point of introducing performance issues on the database since the data is overly separated and siloed.

Fourth Normal Form (4NF) and Fifth Normal Form (5NF)

In practice, these last two forms are less commonly used. In theory, they are set to reduce the number of database anomalies even further. If you want to dig deeper into the theoretical details, here are a couple of helpful resources:

In common practice, the first normal form through the Boyce-Codd normal form defines the set of steps most commonly used to achieve normalization benefits. The fourth and fifth forms are more rarely found in practice.

Frequently Asked Questions

What is database normalization?

Database normalization is the process of organizing data into tables to help maintain data accuracy and consistency. The goal is to make a database simpler to navigate, allowing it to operate at peak efficiency.

Why is database normalization important?

Database normalization makes it easier to catch bugs, duplicate data and other anomalies. In addition, the process can make it easier to manage data and enhance the performance of a database.

What are the different normal forms in database normalization?

The normal forms in database normalization are zero normal form (0NF), first normal form (1NF), second normal form (2NF), third normal form (3NF), Boyce-Codd normal form, fourth normal form (4NF) and fifth normal form (5NF).