The computational demands of various sectors, such as drug discovery, materials science, and AI are skyrocketing. Graphics processing units (GPUs) have been at the forefront of this journey, serving as the backbone for tasks demanding high parallel processing capabilities. Their integration into data centers has marked a significant advancement in computational technology.

As we push the boundaries of what's computationally possible, however, the limitations of GPUs become apparent, especially when facing problems that classical computing struggles to solve efficiently. Enter the quantum processing unit (QPU), a technology that promises not just to complement but potentially transcend the capabilities of GPUs, heralding a new era in computational science.

What Is a Quantum Processing Unit (QPU)?

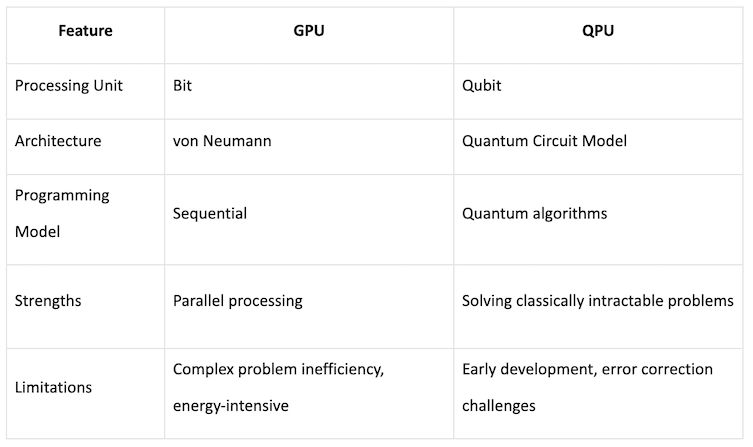

A quantum processing unit, or QPU, uses qubits and quantum circuit model architecture to solve problems that are too computationally intensive for classical computing. Its potential is analogous to the transformational impact the GPU had on computing in the 2000s.

The Rise of the GPU

The binary system is at the core of classical computing, with bits that exist in one of two states: zero or one. Through logic gates within the von Neumann architecture (an architecture that includes a CPU, memory, I/O, and data bus), this binary processing has propelled technological progress for decades. GPUs, enhancing this system, offer parallel processing by managing thousands of threads simultaneously, significantly outpacing traditional CPUs for specific tasks.

Despite their prowess, GPUs are still bound by the linear progression of classical algorithms and the binary limitation of bits, making some complex problems inefficient and energy-intensive to solve. A key reason for this linear progression limitation is that a classical algorithm can only process one possible solution at a time.

The Evolution of the GPU in the Data Center

The integration of GPUs into data centers began in the late 1990s and early 2000s, initially focused on graphics rendering. NVIDIA’s GeForce 256, released in 1999 and billed as the “world’s first GPU,” marked a significant shift towards GPUs as programmable units rather than merely graphics accelerators. Their general-purpose computing potential was realized in the mid-2000s with NVIDIA’s introduction of CUDA in 2006, enabling GPUs to handle computational tasks beyond graphics, such as simulations and financial modeling.

The democratization of GPU computing spurred its adoption for scientific computing and AI, particularly benefiting from GPUs’ parallel processing capabilities. This led to wider use in research and high-performance computing, driving significant advancements in GPU architecture.

By the early 2010s, the demand for big data processing and AI applications accelerated GPU adoption in cloud services. This period also saw the rise of specialized AI data centers optimized for GPU clusters, enhancing the training of complex neural networks.

The 2020s have seen continued growth in GPU demand, driven by deep learning applications in natural language processing, computer vision, and speech recognition. Modern deep learning frameworks and the introduction of specialized AI accelerators, such as Google’s TPU and NVIDIA’s Tensor Core GPUs, underscore the critical role of GPUs in AI development and the evolving landscape of computational hardware in data centers.

Despite these developments, GPUs did not displace traditional CPUs. Rather, they ran side by side. We saw the rise of heterogeneous computing: the increasingly popular integration of GPUs with CPUs and other specialized hardware within a single system. This allows different processors to handle tasks best suited to their strengths, leading to improved overall efficiency and performance.

Quantum Computing and the QPU

Quantum computing introduces a transformative approach to computing with the concept of qubits. Unlike classical bits, qubits can exist in a state of superposition, embodying both zero and one simultaneously. This characteristic, along with quantum entanglement, enables quantum computers to process information on a scale that classical machines can’t match. Quantum gates manipulate these qubits, facilitating parallel processing across exponentially larger data sets.

What Are Quantum Gates?

Quantum gates are the fundamental building blocks of quantum circuits, analogous to logic gates in classical computing, but designed for operations on qubits instead of classical bits. Quantum gates manipulate the state of qubits according to the principles of quantum mechanics, enabling the execution of quantum algorithms. Some quantum gates operate only on a single qubit, whereas others operate on two or more qubits. Multi-qubit gates are critical to exploiting the entangle and superposition properties of quantum computing.

The quantum computing field is grappling with challenges like qubit stability and effective quantum error correction, however, which are crucial for achieving scalable quantum computing. Qubits are inherently fragile and can be affected by a variety of environmental conditions. Therefore, maintaining a stable qubit state is challenging, and researchers still must develop special techniques to detect and correct unwanted changes in the qubit state.

GPUs Vs. QPUs: What’s the Difference?

The Potential of QPUs

QPU technology is poised to revolutionize areas where classical computing reaches its limits. In drug discovery, for instance, QPUs could simulate molecular interactions at scales never before possible, expediting the creation of new therapeutics. Materials science could benefit from the design of novel materials with tailored properties. In finance, QPUs could enhance complex model optimizations and risk analysis. In AI, they could lead to algorithms that learn more efficiently from less data. QPUs are thus able to tackle problems that CPUs and GPUs cannot and never will, and thus open new frontiers of discovery and innovation.

QPUs Solve the Challenges GPUs Can’t

Although GPUs have revolutionized data center operations, they also bring formidable challenges. The voracious GPU appetite for power generates significant heat, which demands sophisticated and often expensive cooling systems to maintain optimal performance levels. This not only increases the operational costs but also raises environmental concerns due to the high energy consumption required for both running the units and cooling them.

In addition to these physical constraints, the technological landscape in which GPUs operate is rapidly evolving. The constant need for updates and upgrades to accommodate new software demands and improve processing capabilities presents substantial logistical and financial hurdles. This strains resources and complicates long-term planning for data center infrastructure.

QPUs promise to address many of these challenges. QPUs perform computations in ways fundamentally different from classical systems. Specifically, the intrinsic ability of qubits to exist in multiple states simultaneously allows QPUs to tackle complex problems more effectively, reducing the need for constant hardware upgrades. This promises not only a leap in computational power but also a move towards more sustainable and cost-effective computing solutions, directly addressing the critical limitations faced by GPUs in today’s data centers.

The Path to QPU Integration

The journey toward QPU adoption in computational infrastructures is laden with hurdles, though. Achieving stable, large-scale quantum systems and ensuring reliable computations through quantum error correction are paramount challenges. Some types of quantum computers require special cooling and environmental conditions that are uncommon in data centers and thus require adaptation.

Additionally, the quantum software development field is in its infancy, necessitating the creation of new programming tools and languages. To make use of the quantum properties of QPUs, just translating classical algorithms is insufficient. Instead, we will need to invent new types of algorithms. Just like GPUs allow us to leverage parallel processing, QPUs allow us to execute code differently. Despite these obstacles, ongoing research and development are gradually paving the way for QPUs to play a central role in future computational tasks.

Today, QPU integration into broader computational infrastructures and their practical application in industry and research is still in the nascent stages. The development and commercial availability of quantum computers is growing, with several companies and research institutions demonstrating quantum advantage and offering cloud-based quantum computing services.

How close are QPUs to taking a prime position next to GPUs? In other words, if we were to compare the development of QPUs with the historical development of GPUs, what year would we be in now?

Drawing a parallel with the GPU timeline, the current stage of QPU integration closely mirrors the GPU landscape in the mid-2000s, when GPUs became general-purpose computing machines that were adopted for niche applications.

Given these considerations, the current stage of QPU integration might be analogous to the GPU industry around 2006-2007. That was a time of pivotal change, where the foundational technologies and programming models that would enable widespread adoption were just being established. For QPUs, the development of quantum algorithms, error correction techniques, and qubit coherence are akin to the early challenges faced by GPUs in transitioning to general-purpose computing.

Save Room in the Data Center for the QPU

In summary, although GPUs continue to play a critical role in advancing computational capacities, the integration of QPUs into data centers holds the promise of overcoming the operational and environmental challenges posed by current technologies. With their potential for lower power consumption, reduced heat output, and diminished need for frequent upgrades, QPUs represent a hopeful horizon in the quest for more efficient, sustainable, and powerful computing solutions. QPUs won’t replace GPUs, just like GPUs did not eliminate classical CPUs. Instead, the data center of the future will include all three computing methods.