It was Monday at 3 a.m. The team spent the entire sleepless weekend digging into a major production failure. In just a few hours, the work week would begin, and we all knew the world needed this application to work. It would be a disaster if we didn’t fix this issue — and fast.

But why did it fail? It worked last week. Why didn’t it work now? Was it a recent code change? Nope. That was absolutely unrelated. We tested the system rigorously. We set up the best test automation tools in addition to some manual tests. We even scanned and reviewed our microservices with effective tools. Why then did it fail?

We cursed our fate…and our tools.

Management started yelling at us. “You said Kubernetes is a system built for resilience. It enables applications that can never fail! This is why we shouldn’t use open source!” Blah blah blah…

After hours of struggling, we finally discovered the problem with the YML configuration. Some genius developer didn’t specify the version of the embedded open source database they used. Instead, they only specified “latest,” hoping to get the best features as they were released. A new version was available and that broke some existing functionality.

Our jobs were saved. We managed to resolve the crash — and then we crashed as the world started using our once-again functioning application.

Common Sources of Kubernetes Configuration Errors

- Default Namespace

- Deprecated APIs

- Naked Pods

- Namespace Sharing

- Not Respecting the Abstraction

- Incorrect Image Tags

- Believing in Defaults

The Root Cause Analysis

Does this sound familiar? I’m sure it does. We’ve all seen disasters caused by misconfiguration in Kubernetes. The problem rests with the power of the tool. As the old saying goes, with great power comes great responsibility.

There is so much that we configure in Kubernetes and there are few developers who understand these configurations well enough. Most of them make these configurations using other existing configurations — such as copying and pasting while modifying some parts that make sense to them. The adventurous ones pick the YML files straight from some online tutorials — which were good to prove a point, but not mature enough for the production.

When we use infrastructure as code we tend to forget it’s also a piece of code that should go through all the same validation as any other application code. It’s tempting to use shortcuts that work today. We deploy these shortcuts into production and we assume they’ll work forever. Unfortunately, that’s just not the case with Kubernetes configurations.

The world is still new to the Kubernetes patterns. Developers are still struggling their way through it — so are the admins. As too much configuration goes into the system, it becomes easy for errors to creep in. Worse still, most of these errors won’t show their impact after a few days…or even months. The system works perfectly until it just collapses without any warning. This makes finding the problem even more difficult.

Common Kubernetes Configuration Problems

There’s no end to our ability to introduce new defects into the system — we developers are creative like that. But there are some common issues that show up. Let’s look at them one by one. As you’re troubleshooting, these are good places to start.

Default Namespace

This is a common mistake new developers make when they pick up configurations from tutorials and blogs. Most of the configurations are meant to explain a specific concept and syntax. To keep it simple, most of them skip the complexities and nuances of namespace.

Any Kubernetes deployment should have a meaningful namespace in the architecture. If we miss the namespace, it can result in name clashes in future.

Deprecated APIs

Kubernetes is going through active development. Lot of developers across the globe are working hard on improving the system and making it more resilient. An unfortunate consequence is that we have some APIs becoming deprecated with newer releases. We need to identify and remove these APIs before they begin failing in production.

Naked Pods

Kubernetes provides for deployments as a way to encapsulate pods and all they need. But some lazy developers may want to skip this and deploy just the pod into Kubernetes.

Well, this may work today. But it’s a bad practice and will definitely lead to a weekend-ruining disaster some day.

Namespace Sharing

When entities at different levels share a namespace, the lower entity can access neighbors of the higher entity in order to probe the network.

For example, when a container is allowed to share the host’s network namespace, it can access local network listeners and leverage them to probe the host’s local network.

This is a security risk. Even if it’s our own code, the principle of least privilege recommends we shouldn’t allow this. When it comes to system security, we should never trust anyone — not even ourselves.

Not Respecting the Abstraction

All communications between the containers should go through the layers of abstraction provided by Kubernetes. Communication between services has to go through the abstractions of ingress and service defined in the deployments.

Never run containers with root privilege. Never expose node ports from services, or access host files directly in code (using UID). Ingress should forward traffic to the service, not directly to individual pods.

Hardcoding IP addresses, or directly accessing the docker sockets, etc. can lead to problems down the line. Again, these won’t break the system on the very first day. But it will show up sometime someday — probably when you can least afford it.

Incorrect Image Tags

This is a common problem. Developers are often tempted to use “latest” as the version number. They hope this will lead to a continuously improving system, as it will always pick the latest and best versions. Such a configuration can live in our system for many months but it can (and will) lead to a nasty surprise.

When an image tag is not descriptive (e.g., lacking the version tag like 1.19.8), every time the system pulls that image, you’ll get a different version that might break our code. Also, a non-descriptive image tag doesn’t allow us to easily roll back (or forward) to different image versions. It’s better to just use concrete and meaningful tags such as version strings or an image SHA.

Believing in Defaults

This is another problem that shows up often — when we are not very confident of a configuration, we tend to believe in the defaults. The memory/CPU allocation, min/max replica counts for auto scaling…the list goes on. These are just some of the properties that we miss too often.

Datree: Kubernetes Configuration Solution

As I mentioned earlier, there are too many configurations that go into the Kubernetes cluster. Helm tries to reduce this complexity, but makes things worse, when we try to configure Helm itself. It’s impossible for a human to ensure the quality we need in our production deployments. We need quality tools that can automate this process.

After some discussion on tech platforms, and a lot of Google / StackOverflow / YouTube, we found a few interesting tools. After comparing them, we chose Datree.

Datree is simple to set up and use. You can integrate it easily with most CI/CD tools. This SaaS tool helps us identify such issues before they can cause a problem. It has a lavish free tier and doesn’t lock in — all that we need in an ideal tool.

Let’s check it out.

Installation

Installing Datree is fast and easy. Run the command below. We need the sudo permissions.

curl https://get.datree.io | /bin/bash

We’re ready to go in just a few seconds! Most of us are uncomfortable running such a command and we should be. Running an unknown third party script with sudo is like inviting a hacker to ruin your system. If you prefer, you can just check out the actual code executed by the script.

#!/bin/bash

osName=$(uname -s)

DOWNLOAD_URL=$(curl --silent "https://api.github.com/repos/datreeio/datree/releases/latest" | grep -o "browser_download_url.*\_${osName}_x86_64.zip")

DOWNLOAD_URL=${DOWNLOAD_URL//\"}

DOWNLOAD_URL=${DOWNLOAD_URL/browser_download_url: /}

OUTPUT_BASENAME=datree-latest

OUTPUT_BASENAME_WITH_POSTFIX=$OUTPUT_BASENAME.zip

echo "Installing Datree..."

echo

curl -sL $DOWNLOAD_URL -o $OUTPUT_BASENAME_WITH_POSTFIX

echo -e "\033[32m[V] Downloaded Datree"

unzip -qq $OUTPUT_BASENAME_WITH_POSTFIX -d $OUTPUT_BASENAME

mkdir -p ~/.datree

rm -f /usr/local/bin/datree || sudo rm -f /usr/local/bin/datree

cp $OUTPUT_BASENAME/datree /usr/local/bin || sudo cp $OUTPUT_BASENAME/datree /usr/local/bin

rm $OUTPUT_BASENAME_WITH_POSTFIX

rm -rf $OUTPUT_BASENAME

curl -s https://get.datree.io/k8s-demo.yaml > ~/.datree/k8s-demo.yaml

echo -e "[V] Finished Installation"

echo

echo -e "\033[35m Usage: $ datree test ~/.datree/k8s-demo.yaml"

echo -e " Using Helm? => https://hub.datree.io/helm-plugin"

tput init

echoThe code downloads a zip file and expands it into the specified locations. It places the binary into the /usr/local/bin folder — for which it needs a sudo. It doesn’t mess with any system configuration, so it’s simple to remove.

A Simple Demo

The Datree installation provides a sample YAML file for a POC.

datree test ~/.datree/k8s-demo.yaml

>> File: ../../.datree/k8s-demo.yaml

❌ Ensure each container has a configured memory limit [1 occurrences]

? Missing property object `limits.memory` - value should be within the accepted boundaries recommended

by the organization

❌ Ensure each container has a configured liveness probe [1 occurrences]

? Missing property object `livenessProbe` - add a properly configured livenessProbe to catch possible d

eadlocks

❌ Ensure workload has valid label values [1 occurrences]

? Incorrect value for key(s) under `labels` - the vales syntax is not valid so it will not be accepted

by the Kuberenetes engine

❌ Ensure each container image has a pinned (tag) version [1 occurrences]

? Incorrect value for key `image` - specify an image version to avoid unpleasant "version surprises" in

the future

+-----------------------------------+-------------------------------------------------+

| Enabled rules in policy "default" | 21 |

| Configs tested against policy | 1 |

| Total rules evaluated | 21 |

| Total rules failed | 4 |

| Total rules passed | 17 |

| See all rules in policy | https://app.datree.io/login?cliId=GStykNg6GkUAS8LfaEt2B8 |

+-----------------------------------+-------------------------------------------------+Interesting? Well that was just a glimpse.

Online View

Note the URL it generates at the end of the table.

https://app.datree.io/login?cliId=GStykNg6GkUAS8LfaEt2B8

This is a unique ID assigned to your system. It’s stored in a config file in your home folder.

# cat .datree/config.yaml

token: t4e73q9ZxkXhKhcg4vYHDF

Open the link in a browser. It prompts us to log in with Google / GitHub. As we log in, this unique ID is connected with the new account on Datree. We can now tailor the tool from the web UI. Also, you’ll see the reports from all the tests

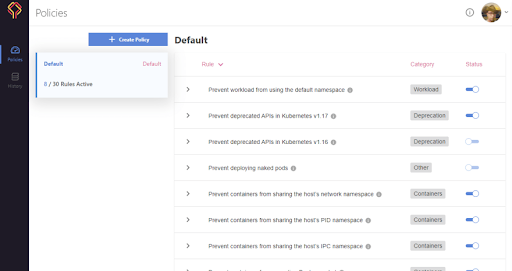

Filters and Policies

Here we can see a detailed setup for the tests, which we can alter and use when we evaluate the YAML files. We can choose what we feel is important and skip what we feel can be ignored. If we want, we can be a rebel and allow a class of errors to go through. Datree gives us that flexibility as well.

Apart from the default policy available to us, we can define more custom policies that can be triggered as needed.

Datree provides filters for all the above mentioned potential issues, and many more.

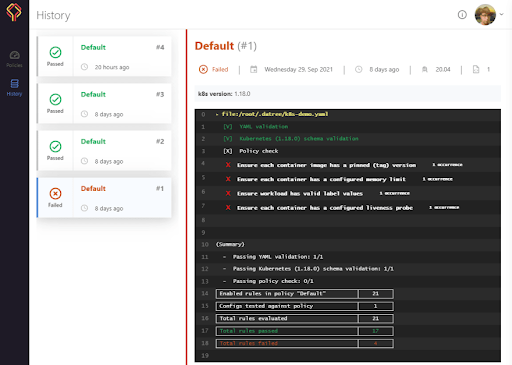

History

On the left panel, we can see a link for History. Click on it and we’ll see the history of all the validations it’s performed so far. We can just view the status of policy checks right here without having to open the dreaded black screen.

Every time we invoke the Datree command, it connects to the cloud with this ID, pulls the required configuration and then uploads the report for the run.

Compatible With Tools

As we saw above, we can trigger the Datree in a single command. Moreover, Datree also provides wonderful compatibility with most of the configuration management tools and managed Kubernetes deployments like AKS, EKS and GKS. Of course, we can’t forget Helm when we’re working with Kubernetes. Datree provides a simple plugin on Helm.

Datree has elaborate documentation and tutorials on their website. You can refer to them for quickly setting up any feature you want.

Don’t Wait for a Disaster

Most of the applications across the globe have similar misconfigurations that will surely (eventually) ruin someone’s day. We knew our application had this problem but we underestimated the extent of damage it could cause. We were just procrastinating and sitting on a time bomb.

Don’t do what we did. Don’t wait for the disaster. Automate the configuration checks and enjoy your weekend.