For those of you who are looking to dig deep into the technical details of XR (extended reality), here’s an overview of the technology’s different manifestations.

4 Main Areas of VR and Metaverse Tech

- Head-mounted displays (HMDs)

- Handheld devices

- Projection systems

- Large screens

1. Head-Mounted Displays (HMDs)

This is the most common of devices and the image that generally springs to mind whenever anyone thinks of virtual reality or augmented reality. Also colloquially known as headsets, goggles or glasses, this category encompasses any form of technology worn on the head that displays digital objects or environments for the user to see.

Applications for Head-Mounted Displays (HMDs)

- Virtual Reality

- Augmented Reality

- Assisted Realty

Virtual Reality

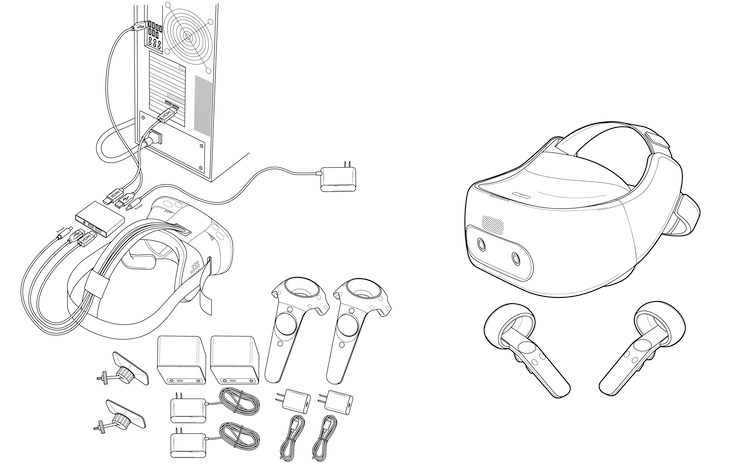

All VR headsets are composed of a tracking system, display, processing system and power source. As users move and look around a virtual environment, the tracking system keeps tabs on these movements and sends them to the processing system which works out where they should be and what they should be seeing and updates the display accordingly. On modern VR headsets, these updates to the display occur up to 120 times every second creating the illusion that you are actually navigating the virtual world.

When the processing system is external to the headset — for example, a desktop computer — a cable is used to link the two together and the VR headset is referred to as a tethered headset. Being tied down in this way is inconvenient at best (consider users wrapping themselves up in cables as they turn around in virtual environments) and hazardous at worst (tripping over an unseen cable isn’t pleasant!). This system defined VR headsets until companies like Google and Samsung considered alternative processing sources, creating headsets that were powered by mobile phones. What followed was the birth of the Google Cardboard and Samsung Gear VR in 2014 with Google claiming to have shipped over 15 million units of the former by November 2019.

These efforts evolved ecosystems around them with Google creating Daydream, which ran on select, high-end phones, to support consumer interest further in VR. In October 2019, Google discontinued the Daydream project stating “we noticed some clear limitations constraining smartphone VR from being a viable long-term solution. Most notably, asking people to put their phone in a headset and lose access to the apps they use throughout the day causes immense friction.”

By this time, a new breed of headset had entered the market that promised to give users a more streamlined experience: A portable device that didn’t require a mobile phone, computer or any other external system. Thus, standalone headsets were born.

Standalone headsets could initially only track orientation but not position. In other words, you could look around but not move around the virtual space. These are referred to as three DoF (degrees of freedom) headsets as you can look:

- Up and down

- Left and right

- Clockwise and anti-clockwise

Soon enough, advances in technology meant it became possible for even standalone headsets to track a user’s change in position. This enabled not only portability but full six DoF functionality. Users could now physically look and move around a virtual environment without having to get caught up in cables.

VR Form Factors

Thanks to advances in VR optics, some VR headsets look like a supersized pair of goggles rather than a hefty, front-heavy headset. They are worn like a pair of glasses with arms on either side that rest over the ears. Generally, they are powered by an external processor such as a smartphone, creating a tethered but portable experience.

Most recognizable, though, is the larger box-type VR headset that can either be tethered or standalone.

Augmented Reality

You can trace augmented reality technology back to 1968 when Ivan Sutherland, an American computer scientist working at the University of Utah, with the help of his student Bob Sproull, created the Sword of Damocles. This headset could overlay outlines of basic 3D objects like a cube on the physical world. A user could move around the room and see the virtual object from different angles — the first six DoF headset.

The Sword of Damocles was named after the ancient tale of the same name, made famous by the Roman philosopher Cicero. As the story goes, Damocles, a courtier of King Dionysius II of Syracuse was offered the opportunity to experience the life of a king which he readily accepted. While Damocles made himself comfortable on the King’s throne, Dionysius arranged for a sword to hang above his head, held up only by a single strand of horsetail hair. Damocles, upon noticing this, quickly relinquished his title, having understood the lesson that with great power also comes great peril.

The ancient sword and Sutherland’s pioneering AR hardware share one thing in common — both were hung from the ceiling above the user’s head, though the latter was thankfully secured with a safer material than horsehair.

Like virtual reality, there are also augmented reality headsets that are powered externally by mobile phones which work by projecting content from your phone onto a transparent visor. These are generally more inexpensive compared to other options. Some of these are even made of cardboard — an AR equivalent to the Google Cardboard. At the higher end of the AR headset market are standalone models that require no phone or other device as they have all the processing power and computer vision hardware self-contained.

Assisted Reality

Assisted reality is augmented reality at its most basic level: an opaque screen in your field of view that gives you hands-free access to digital information. The screen can either be opaque or transparent and is usually monocular (i.e., information is only presented to one eye through a small single display on one side of the head). Assisted reality differs from augmented reality in that these devices do not have any onboard computer vision capabilities. As a result, the digital elements displayed are not anchored to the physical world — they are merely presented in the user’s field of view for easy and convenient access. While assisted reality devices may not garner the same level of attention as more fully-fledged AR hardware, they are often far cheaper while still offering valuable hands-free access to users.

AR Form Factors

AR headsets come in many shapes and sizes. The form factor most of us are familiar with is smart glasses — these look like regular, if slightly bulkier, eyeglasses. You can usually find at at least the battery and projection system squeezed into the arms of the glasses on either side.

This form factor is the bridge between the consumer and corporate world due to the comfortably familiar design. In order of increasing size, next up are smart glasses that are clearly built for industry. They are significantly bulkier than regular glasses and sometimes even have an attachment on one side: an arm that holds a display in your field of view. This display is either a transparent rectangular pane or prism on which the digital elements are projected, or an opaque screen. The third type of form factor is the headset which is the largest of them all, usually confined to industry and is strapped or tightened to the user’s head.

Tracking Technology

Tracking in VR

Tracking three degrees of freedom (rotation) on VR headsets is relatively simple and can be done with a set of sensors that you would find on most smartphones. Tracking six degrees of freedom is a more complicated and challenging problem that originally required external hardware to monitor a headset’s position from the outside (outside-in tracking).

Thanks to advances in SLAM (Simultaneous Localization and Mapping) technology, modern six DoF VR headsets have cameras that note recognizable points in your physical environment (such as the corners of your dining table) and, combined with the three DoF sensor data, form an understanding of where you are in that environment and how you’re moving within it. When tracking technology like this is embedded within the headset, it is referred to as inside-out tracking.

Tracking in AR

If you’re looking to anchor digital objects in the physical world convincingly, you can do this in three main ways:

- Marker — Marker-based tracking involves using a static pattern or image which acts as a visual cue for a device to place the digital object.

- Markerless — Markerless tracking uses an AR device’s sensors to map the physical environment, allowing a user to anchor a digital object to a certain point within an environment without the need for a visual marker.

- Location — This uses an AR device’s GPS system primarily to identify its location. Based on that, it can place digital information in the right place in the user’s view. This is mainly used for macro-level AR experiences where, for example, a user can point their phone during a crowded music festival for a visual indicator of where the toilets are; at a historical building, structure or landmark in the distance to get more information on it; or even at the night sky to identify different planets and stars.

Application Technology

XR experiences can be launched on devices through a dedicated app or via a web browser. These are referred to as native and web apps respectively. AR/VR/XR web apps are sometimes specifically called webAR/webVR/webXR apps. Both methods come with pros and cons. Native apps generally support a greater level of functionality and run more smoothly when there is a lot of information to process including high quality graphics. WebXR apps are easier to distribute and offer a more seamless user experience as nothing needs to be formally downloaded from a store and installed on a user’s device. Coupled with the wide availability of smartphones, webAR, in particular, is a popular method of distributing AR experiences as, for the end user, it requires little more than a web link to access.

Input Technology

There are many ways of controlling an XR headset and its applications:

- Hand controllers

- Headset buttons

- Gaze

- Voice

- Hand tracking

Different headsets and software will support different methods.

Hand controllers are the most popular and familiar method for VR headsets. Almost every VR headset will ship with at least one (in the case of three DoF headsets) or two (for six DoF headsets). They usually come with a combination of buttons, thumbsticks, triggers and trackpads. Some of them can detect the position and pressure of your individual fingers allowing you to express an extensive set of gestures in the virtual world through the controller. This can be helpful when using tools or operating machines during training scenarios, or for enhancing non-verbal communication and building rapport during virtual meetings.

Voice is already an accepted method of interfacing with digital devices outside of XR through smart speakers such as Amazon Alexa and Google Home, and can also be used in some instances to control XR headset and application functionality.

Hand tracking is used on high-end AR headsets and is fast becoming a popular input method for VR as well. It was originally made possible by using an attached system of cameras and infrared LEDs which could track the 3D position of your hands and fingers, recreating them digitally in real time and making them available in VR applications. That functionality is now built-in to some VR headsets.

2. Handheld Devices

Virtual Reality

While smartphones have been used with other accessories to create VR experiences, on their own, they are generally too small to effectively immerse users in virtual worlds so these devices are primarily used for AR scenarios.

Some minimalist solutions exist to convert a smartphone into a basic VR viewing device through a pocket-sized set of foldable lenses that clip onto the phone. When an application is displayed in the right format, where the images are side-by-side on the phone, each eye receives one of the images through each lens, creating a stereoscopic effect in the same way that more substantial VR headsets achieve this. When high-end headsets are unavailable or unattainable, and the application only requires a brief glimpse, the pocket lens solution can be useful to provide stakeholders with a quick preview of products and environments in a more immersive manner.

Augmented Reality

Using augmented reality on smartphones made sense once cameras were integrated. By using the live feed from the phone’s camera, you can overlay digital imagery and objects to create a basic augmented reality experience.

With the advent of Google’s ARCore and Apple’s ARKit — software development kits that enable augmented reality applications to be built — more advanced forms of augmented reality were made possible on smartphones. Using the same SLAM technology VR headsets use for inside-out tracking, an advanced AR-enabled smartphone can see your environment in three dimensions through its regular camera, recognize surfaces, and therefore place digital objects in context with the physical environment (for example, placing digital furniture in an office to assess how it looks before buying it) or provide information about the environment itself (for example, being able to measure the width of a recessed section of wall).

3. Projection Systems

Cave Environments

Before headsets became the stereotypical representation of VR, projection systems were used in scientific and industrial applications to immerse users in a three-dimensional digital environment. These systems comprised a series of projectors that cast images onto the interior walls, floor and ceiling of a room. In conjunction with a set of positionally tracked 3D glasses, user movements in the room would cause the perspective of the digital environment to change accordingly.

A six DoF controller provides interaction capabilities. These systems were called CAVEs, a recursive acronym which stands for CAVE Automatic Virtual Environment. The name is also a nod to Plato’s “Allegory of the Cave” thought experiment which discusses themes such as human perception, illusion and reality. The first CAVE was deployed at the University of Illinois in Chicago in 1992. Nowadays, the word CAVE is used colloquially as a generic term to refer to an interior projection-based immersive environment.

CAVE systems have the advantage of being able to support multiple users without isolating them from the physical environment as is the case with VR headsets. Users are able to see their own physical body as well as those of others while immersed in a digital environment. With the right expertise and software, projectors can even be used in differently sized and even oddly shaped rooms.

The interior of dome structures has become a favorite type of room to use projection systems in due to the smooth 360 digital environment they create which multiple users can inhabit. These systems comprise a number of high quality projectors, a powerful computer system and accompanying software. They require a dedicated room which once fixed cannot be easily moved. Despite the complexity of CAVEs, most of the equipment can be hidden out of sight — even the projectors can be rear projection units that cast an image from behind the (translucent) walls.

Projection Mapping

A niche technology, projection mapping is the act of projecting digital imagery onto the surface of objects, sometimes to create an artistic visual effect, but it can also be used to present information in the right place. This technique used to be called spatial augmented reality as it is a form of AR that enhances a physical environment with digital information.

As with other projection techniques, projection mapping negates the need for individuals to wear a headset or take out their mobile phone as the technology is contained within the environment. However, that is also its disadvantage as the effect is only available in a room in which it has been installed and portability can be an issue. As a result, projection mapping is often seen in product advertising, art installations, events and museums where the solution, once implemented, is not expected to be moved.

Projection mapping can be static or interactive. Users can tap on parts of a projection to see more information about an exhibit, or picking up a shoe in a retail store can activate a new projection offering customization options, for example.

4. Large Screens

When it comes to experiencing digital worlds, they have always been displayed on screens. In the consumer world, this includes movies and video games that we watch and play on a television. In the business world, we might be examining 3D models and digital twins (digital recreations of real equipment and environments) on our laptops. Using this medium of viewing has obvious disadvantages in that the immersion is not as strong compared to being absorbed in an environment at the right scale within a VR headset. There is also limited ability to look around the world naturally — instead, a keyboard and mouse is used to navigate the environment.

Large screens have been used for some time in academia and industry to achieve immersion. Many provide a good enough level of presence to qualify as a virtual reality experience, albeit a more limited one. This is part of the reason why many people are drawn to IMAX theaters whose screens are many times larger than regular theater screens.

As well as providing a powerful visual experience with sharp and vivid images, IMAX theaters also offer a high quality audio system that contributes equally to the immersive experience. But given the size and complexity of such a set up, this immersion comes at a cost, both financially and in terms of its portability, which is understandably limited compared to a VR headset.

XR Devices in a Snap

- There are four different types of XR device: headsets, handheld devices, projection systems and screens — each has advantages and disadvantages.

- XR headsets can be portable and provide deep immersion, but that comes at the cost of isolating the user from the physical world, making it uncomfortable for some.

- Many handheld devices are capable of running advanced AR applications; however, they occupy the user’s hand, making it inefficient for some

- applications and unviable for lengthy use.

- VR projection systems create a good level of immersion and can support multiple users without isolating them from the real world but can be complex, costly and immovable.

- AR projection systems require no preparation or hardware setup from the user making them very convenient to access but only when in the same location as the user. As a result, their applications are more limited than handheld devices or headsets and they cannot be easily moved to other locations.

- Screens are a technology we are used to and so are very comfortable with. However, they do not provide a strong sense of immersion without being physically large — and, at that point, suffer from limited portability and large expenses.

* * *

This is an excerpt from REALITY CHECK by Jeremy Dalton is ©2021 and is reproduced and adapted with permission from Kogan Page Ltd.