The origins of deep learning and neural networks date back to the 1950s, when British mathematician and computer scientist Alan Turing predicted the future existence of a supercomputer with human-like intelligence and scientists began trying to rudimentarily simulate the human brain. Here’s an excellent summary of how that process worked, courtesy of the very smart MIT Technology Review:

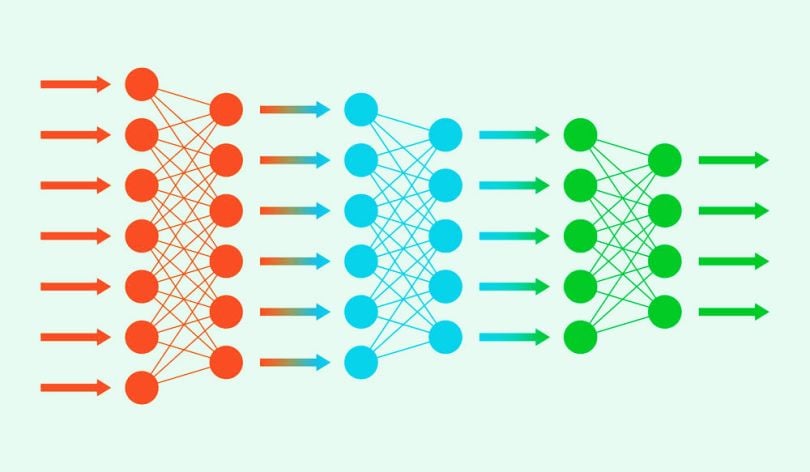

A program maps out a set of virtual neurons and then assigns random numerical values, or “weights,” to connections between them. These weights determine how each simulated neuron responds—with a mathematical output between 0 and 1—to a digitized feature such as an edge or a shade of blue in an image, or a particular energy level at one frequency in a phoneme, the individual unit of sound in spoken syllables. Programmers would train a neural network to detect an object or phoneme by blitzing the network with digitized versions of images containing those objects or sound waves containing those phonemes.

If the network didn’t accurately recognize a particular pattern, an algorithm would adjust the weights. The eventual goal of this training was to get the network to consistently recognize the patterns in speech or sets of images that we humans know as, say, the phoneme “d” or the image of a dog. This is much the same way a child learns what a dog is by noticing the details of head shape, behavior, and the like in furry, barking animals that other people call dogs.

Here's a quick look at the history of deep learning and some of the formative moments that have shaped the technology into what it is today.

Early Days

The first serious deep learning breakthrough came in the mid-1960s, when Soviet mathematician Alexey Ivakhnenko (helped by his associate V.G. Lapa) created small but functional neural networks.

When Was Machine Learning Invented?

In the early 1980s, John Hopfield’s recurrent neural networks made a splash, followed by Terry Sejnowski’s program NetTalk that could pronounce English words.

Rise of Neural Networks & Backpropagation

In 1986, Carnegie Mellon professor and computer scientist Geoffrey Hinton — now a Google researcher and long known as the “Godfather of Deep Learning” — was among several researchers who helped make neural networks cool again, scientifically speaking, by demonstrating that more than just a few of them could be trained using backpropagation for improved shape recognition and word prediction. Hinton went on to coin the term “deep learning” in 2006.

Yann LeCun’s invention of a machine that could read handwritten digits came next, trailed by a slew of other discoveries that mostly fell beneath the wider world’s radar. Hinton and LeCun recently were among three AI pioneers to win the 2019 Turing Award.

Breakthroughs & Widespread Adoption

As they do now, the media played up developments viewers could better relate to — such as computers that learned to play backgammon, the vanquishing of world chess champion Garry Kasparov by IBM’s Deep Blue and the dominance of IBM’s Watson on Jeopardy!

By 2012, deep learning had already been used to help people turn left at Albuquerque (Google Street View) and inquire about the estimated average airspeed velocity of an unladen swallow (Apple’s Siri). In June of that year, Google linked 16,000 computer processors, gave them Internet access and watched as the machines taught themselves (by watching millions of randomly selected YouTube videos) how to identify...cats. What may seem laughably simplistic, though, was actually quite earth-shattering as scientific progress goes.

Despite the heady achievement — proof that deep learning programs were growing faster and more accurate — Google’s researchers knew it was only a start, a sliver of the iceberg’s tip.

“It is worth noting that our network is still tiny compared to the human visual cortex, which is a million times larger in terms of the number of neurons and synapses,” they wrote.

About four months later, Hinton and a team of grad students won first prize in a contest sponsored by the pharmaceutical giant Merck. The software that garnered them top honors used deep learning find the most effective drug agent from a surprisingly small data set “describing the chemical structure of thousands of different molecules.” Folks were duly impressed by this important discovery in pattern recognition, which also had applications in other areas like marketing and law enforcement.

“The point about this approach is that it scales beautifully,” Hinton told the Times. “Basically you just need to keep making it bigger and faster, and it will get better. There’s no looking back now.”