In This Article

- What is Deep Learning?

- How Deep Learning Works

- Scope & Impact of Deep Learning

- Challenges in Deep Learning

- Future of Deep Learning

It's a busy day in 2039 and you’re watching a movie while being shuttled around by one of the countless autonomous vehicles that prowl the world’s roadways. It brakes and accelerates when necessary, avoids smashing into things (other cars, cyclists, stray cats), obeys all traffic signals and always stays within the lane markers.

Not long ago such a scenario would have seemed ludicrous. Now it’s ever more firmly in the realm of possible. In fact, autonomous vehicles may one day become so highly aware of their surroundings that accidents will be virtually nonexistent. Getting to that point, however, requires clearing a host of hurdles using a variety of complex processes — including deep learning. But how far can the technology take us?

"Deep learning is solving a problem and is a useful tool for us," says Xiang Ma, a machine learning expert and veteran research manager at HERE Technologies in Chicago who works on developing sophisticated navigation systems for autonomous vehicles. "And we know it's working. But it could just be a stop-gap technology. We don’t know what’s next."

What is Deep Learning?

A newly re-invigorated form of machine learning, which is itself a subset of artificial intelligence, deep learning employs powerful computers, massive data sets, “supervised” (trained) neural networks and an algorithm called back-propagation (backprop for short) to recognize objects and translate speech in real time by mimicking the layers of neurons in a human brain’s neocortex.

Deep Learning: A Quick Explanation

Deep learning has been around since the 1950s, but its elevation to star player in the artificial intelligence field is relatively recent. In 1986, pioneering computer scientist Geoffrey Hinton — now a Google researcher and long known as the "Godfather of Deep Learning" — was among several researchers who helped make neural networks cool again, scientifically speaking, by demonstrating that more than just a few of them could be trained using backpropagation for improved shape recognition and word prediction. By 2012, deep learning was being used in everything from consumer applications like Apple's Siri to pharmaceutical research.

“The point about this approach is that it scales beautifully,” Hinton told the New York Times. “Basically you just need to keep making it bigger and faster, and it will get better. There’s no looking back now."

How Deep Learning Works

Making the Next Generation Self-Driving Car

Ma's team at HERE creates high-definition maps that greatly enhance a vehicle’s onboard perception capabilities and build the navigation system for future rides. Deep learning is crucial to that process.

“Some people say we can live without HD maps, that we can just put a camera on the vehicle,” Ma says after proudly leading a tour of his office space and showing off the company’s futuristic coffee machine (try the latte!). “But no matter how good your camera is, it will always have a failure case. No matter how good your algorithm is, you always miss something. So in the case that your sensors are broken, the map is your last resort.”

Through their development of automated algorithms and a scalable data pipeline (a system — in this case tens of thousands of cloud-based computers running parallel algorithms — that can handle an increasing amount of input data while still performing as expected sans crashing or slowing down), he and his team build high-definition maps using various data sources culled by sensors on the cars of HERE’s principal owners BMW, Mercedes and Audi.

Because it’s important to start with precise training data, Ma explains, human labeling is a crucial first step in the process. Street-view imagery and Lidar (a radar-like detection system that uses laser light to collect accurate 3D shapes of the world) combined with lane marking information that’s at first coded manually is fed into a deep learning engine that’s repeatedly (“iteratively,” goes the jargon) improved and retrained. The deep learning model is then “deployed to” (used in — more jargon) in a production pipeline to automatically detect lane markings down to the centimeter. Humans again enter the equation to verify that all measurements are correct.

Ideally, Ma says, many more vehicle manufacturers will consent to sharing their sensor data (but no personally identifiable information that would raise privacy concerns) so autonomous vehicles can learn from each other and benefit from live updates. For instance, say there’s a six-car pile-up five miles ahead of your current location. The first vehicle to spot that pile-up would relay the information back to a central source, HERE, which would in turn relay it to every vehicle approaching the accident site. It’s still a work in progress, but Ma is confident of its efficacy.

The Scope & Impact of Deep Learning

Revolutionary or Just a Useful Tool?

In a 2012 New Yorker piece, NYU professor and machine learning researcher Gary Marcus expressed a reluctance to hail deep learning as some kind of revolution in artificial intelligence. While it was “important work, with immediate practical applications,” it nonetheless represented “only a small step toward the creation of truly intelligent machines.”

“Realistically, deep learning is only part of the larger challenge of building intelligent machines,” Marcus explained. “Such techniques lack ways of representing causal relationships (such as between diseases and their symptoms), and are likely to face challenges in acquiring abstract ideas like 'sibling' or 'identical to.' They have no obvious ways of performing logical inferences, and they are also still a long way from integrating abstract knowledge, such as information about what objects are, what they are for, and how they are typically used.”

It was like Thor’s golden hammer. Where it applies, it’s just so much more effective. In some cases, certain applications can turn up the dial multiple notches to get super-human performance.

Now, six years later, deep learning is more sophisticated thanks largely to increased compute power (graphics processing, in particular) and algorithmic advances (particularly in the field of neural networks). It’s also more widely applied — in medical diagnostics, voice search, automatic text generation and even weather prediction, to name a handful of areas.

“The potency of it was really when people discovered that you could apply deep learning to a lot of problems,” says Guild AI founder Garrett Smith, who also runs the machine learning organization Chicago ML. “It was like Thor’s golden hammer. Where it applies, it’s just so much more effective. In some cases, certain applications can turn up the dial multiple notches to get super-human performance.”

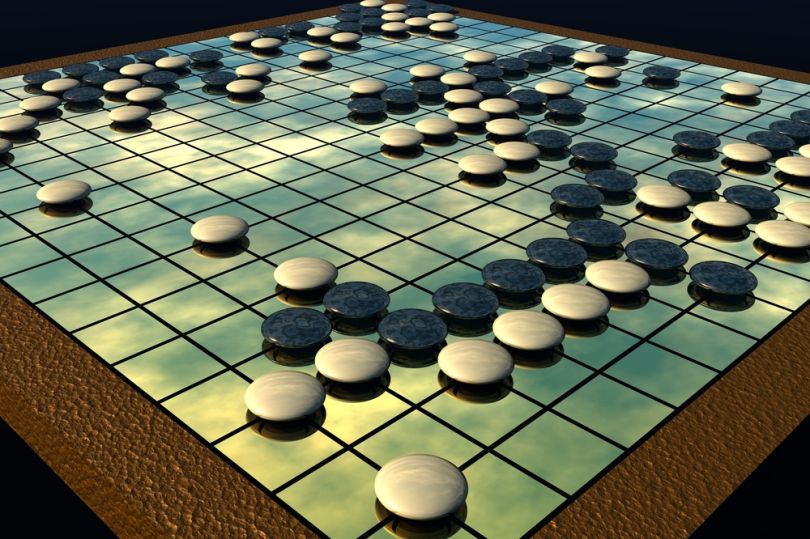

Google’s nine-year old DeepMind, which it acquired in 2014, is a leader in that realm, and its goal is nothing less than to “solve intelligence” by melding machine learning and neuroscience. The British-based company’s studies in “deep reinforcement learning” — a combination of neural network-based deep learning and trial-and-error-based reinforcement learning — led to the development of software called AlphaGo and its more advanced sibling AlphaGo Zero, both of which handily pummeled human world champions in the ancient Chinese game of Go in 2016.

Recently, DeepMind and the nonprofit AI research organization OpenAI joined forces to publish a paper titled, “Reward learning from human preferences and demonstrations in Atari.” Their thesis in short: human communication of objectives works better than a system of rewards when it comes to solving complex real-world problems. In that vein, the companies employed a combination of human feedback and a process called “reinforcement learning optimization” to achieve superhuman performance in two of the games and baseline-exceeding results in the other seven. Meaning, essentially, that machines learned to play video games by watching people play video games and, in two cases, vastly outperformed their human counterparts. And we can’t forget Facebook, whose social media and AI juggernaut devised the technology behind DeepFace, which can identify faces in photos with human-like accuracy.

Science, too, has continued to benefit from machine (deep) learning.

“There is now a more organic connection between machine learning and science,” says University of Chicago computer science professor Risi Kondor. “It's not just an annoying thing you have to do on the side because there’s too much data on your hard drive; it’s really becoming an integral part of the scientific discovery process.”

Molecular dynamics — which involves simulating the trajectories of atoms and molecules to learn how a solid or a biological system behaves under stress, or how drug molecules bind to their receptors — is one example. Before long, experts predict, molecular design will be fully automated, which will speed up drug development.

The Promise of Neural Networks

The biggest recent shift in deep learning is the depth of neural networks, which have progressed from a few layers to many hundreds of them. More depth means a greater capacity to recognize patterns, which augments object recognition and natural language processing. The former has more far-reaching ramifications than the latter.

“[Language] translation is a big deal, and there have been amazing applications,” Smith says. “You’ve got more flexibility in terms of networks being able to make predictions on languages they've never seen before. But there’s a limit to the general applicability of translation. We've been doing it for a while, and it's not a huge game changer. The vision stuff is what’s really driving a lot of remarkable innovation. Putting a detector on a car so the car can accurately judge its environment — that changes everything.”

Challenges in Deep Learning

How Do We Solve the Data Problem?

The “glaring elephant in the room” when it comes to deep learning, Smith says, is data — not so much quality as quantity, as machine/deep learning models are “remarkably resilient to lousy data.”

“You’re basically representing knowledge, the ability to do complex processing,” he says. “To do that, you need more neurons and more capacity. You need data that isn’t generally available.”

Which means pre-trained models (created by someone else and readily available online) and public datasets (ditto) won’t cut it. That’s where the multi-billion-dollar machine learning behemoths have a distinct advantage.

One-Shot Learning & NAS: A Powerful Pairing

A couple of potentially transformative developments, however, might serve to make deep learning quicker and more egalitarian. A crucial one is the ability for models to learn using far less data — aka “one-shot learning,” which is still in its nascency.

That’s huge, Smith says.

There’s also “neural architecture search” (NAS), whereby an algorithm finds the best neural network architecture. Its combination with one-shot learning makes for a powerful pairing.

GANs: The Cat & Mouse Approach

In the quest to outperform deep learning, there’s been considerable hubbub around Generative Adversarial Networks, too. Typically referred to as GANs, they consist of two neural nets (a “generator” net that creates new data and a “discriminator” one that decides if that data is authentic) that are pitted against each other to affect something akin to artificial imagination. As one explanation goes:

“You can think of a GAN as the combination of a counterfeiter and a cop in a game of cat and mouse, where the counterfeiter is learning to pass false notes, and the cop is learning to detect them. Both are dynamic; i.e. the cop is in training, too (maybe the central bank is flagging bills that slipped through), and each side comes to learn the other’s methods in a constant escalation.”

AutoML: A New Way of Doing Deep Learning?

Then there’s the application of machine learning to machine learning. Called AutoML, it’s rooted in a “learn-to-learn” instruction that prompts computers, through a learning process, to devise breakthrough architectures (rules and methods) on their own.

“Many people are calling AutoML the new way of doing deep learning, a change in the entire system,” a recent essay on towarddatascience.com explains. “Instead of designing complex deep networks, we’ll just run a preset NAS algorithm. This idea of AutoML is to simply abstract away all of the complex parts of deep learning. All you need is data. Just let AutoML do the hard part of network design! Deep learning then becomes quite literally a plugin tool like any other. Grab some data and automatically create a decision function powered by a complex neural network.”

The Future of Deep Learning

Evolution or Extinction?

But while Kondor, Smith, Ma and others appreciate the progress deep learning has made, they’re also realistic — in Ma’s case, matter-of-factly fatalistic — about its limits.

“These tasks where deep learning has really been spectacularly powerful are exactly the types of tasks that computer scientists have been working on for a long time because they're well-defined and there's a lot of commercial interest behind them,” Kondor says. “So it’s true that object recognition is completely different than what it was 12 years ago, but it’s still just object recognition; it’s not exactly high-level, deep cognitive tasks. To what extent we’re actually talking about intelligence is a matter of speculation.”

In a Medium essay published last December and titled, “The deepest problem with deep learning,” Gary Marcus offered an updated take on his 2012 New Yorker examination of the subject. In it, he referenced an interview with University of Montreal computer science professor Yoshua Bengio, who suggested the “need to consider the hard challenges of AI and not be satisfied with short-term, incremental advances. I’m not saying I want to forget deep learning. On the contrary, I want to build on it. But we need to be able to extend it to do things like reasoning, learning causality and exploring the world in order to learn and acquire information.”

Marcus “agreed with virtually every word" and when he published the scientific paper “Deep Learning: A Critical Appraisal” in January 2018, his thoughts on why problems with deep learning are impeding the development of artificial general intelligence (AGI) caused an online backlash. In a string of tweets, Thomas G. Dietterich, Oregon State University distinguished professor emeritus and former President of the Association for the Advancement of Artificial Intelligence, defended deep learning and noted that it “works better than anything GOFAI [Good Old-Fashioned Artificial Intelligence] ever produced.”

Still, even the "Godfather of Deep Learning" has changed his tune, telling Axios that he has become “deeply suspicious” of back-propagation, which underlies deep learning.

“I don’t think it’s how the brain works,” Hinton said. “We [humans] clearly don’t need all the labeled data.”

His solution: “throw it all away and start again.”

Are such drastic measures really necessary, though? It may just be, as Facebook AI Research Director Yann LeCunn told VentureBeat, that deep learning should ditch the popular but sometimes problematic coding language Python in favor of one that’s simpler and more malleable. Then again, LeCun added, perhaps new hardware is needed. Whatever the case, one thing is clear: deep learning must evolve or risk dying out. Although achieving the former, insiders say, doesn’t preclude the latter.

The Human Brain is Complex. Deep Learning is Not.

“Current deep learning is just a data-driven tool,” HERE’s Ma says. “But it’s definitely not self-learning yet.”

Not only that, but no one yet knows how many neurons are necessary to make it self-learning. Also, from a biological standpoint, relatively little is known about the human brain — certainly not enough to create a system that comes anywhere close to mimicking it. At this point, Ma says, even his three-year-old daughter has deep learning beat.

“If I show her a [single] picture of an elephant and then later she sees a different picture of an elephant, she can immediately recognize it’s an elephant because she already knew the shape and can imagine it in her brain. But deep learning would fail at this problem, because it lacks the capacity to learn from a few samples. We’re still relying on massive amounts of training data.”

For now, Ma says, deep learning is merely a useful method that can be deployed to solve various problems. As such, he thinks, its extinction isn’t imminent. It is, however, probable.

“As soon as we know what’s next, we'll switch to that. Because this is not the ultimate solution.”