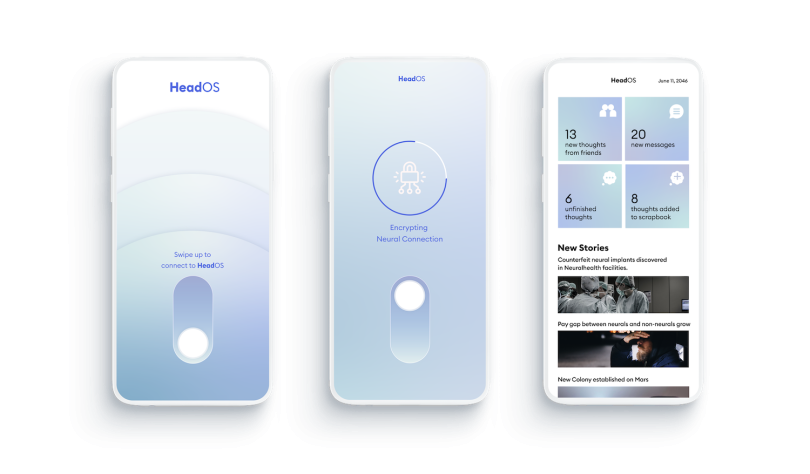

Picture this: It’s June 11, 2046 and a young designer, Vance, wakes up and puts on an earpiece called Eva. The device, a brain-computer interface (BCI) of the future, decodes neural signals in his brain. Using only his thoughts, he asks the device to report his daily notifications and 13 new “thought messages” appear on his phone.

Later, at work, a barrage of notifications are announced through the earpiece until he asks all except phone calls and messages to be silenced until 11:30 a.m. Using his mind to operate an imagined desktop application called Neural Sculptor, he designs a three-dimensional animated figure, mentally narrating the creation of the eyes, ears, hair, mouth and beard.

Back at home, with Eva’s voice as a guide, he cooks ratatouille and makes plans with a friend. Before signing off for the evening, he reads part of a book and silently asks Eva to save inspirational passages. She tells him, “Based on your serotonin levels, the most impactful quote was, ‘You can’t wait for inspiration, you have to go after it with a club.’”

This scenario is pure fantasy, of course — a speculative YouTube video about the future of BCIs created by the product design studio Card79. But Afshin Mehin, design director at the company, which has worked on everything from the industrial design of implantable brain-computer interfaces for Elon Musk’s Neuralink to futuristic wearable tech for companies like Lululemon, said it’s a future that’s starting to come into focus.

“I think the implications are super broad and expansive at this point because we have a lot of imagination about what BCIs could do,” Mehin said. “And I think this is a fun time to imagine what’s possible.”

If Mehin’s predictions come true, as the uses of brain-computer interfaces progress from highly regulated clinical and experimental research trials for people with neuromuscular conditions — such as spinal cord injuries, amyotrophic lateral sclerosis (ALS), cerebral palsy and brain stem stroke — to public health and consumer applications, UX and UI designers will play an important role in imagining their potential uses and ethical implications.

And indeed, the results of recent research demonstrations are impressive. Last month, a team at the University of California, San Francisco (UCSF), led by neurosurgeon Edward Chang, used a high-density electrode array to decode words from brain activity. As reported in a study published in the New England Journal of Medicine, the trial’s first participant was a 36-year-old man who experienced a stroke that left him unable to form intelligible words. With an electrode array surgically implanted in his sensorimotor cortex, an area of the brain involved in tactile perception and the planning and execution of physical movement, the man was able to form words on a computer screen at a rate of roughly 15 words per minute.

“I think the implications are super broad and expansive at this point because we have a lot of imagination about what BCIs could do.”

While BCIs take many forms and target different brain regions, the subdural implant Chang’s team used maps groups of neurons linked to muscle movements in the vocal tract. The researchers asked the participant, dubbed Bravo-1, to imagine saying 50 common words, each almost 10,000 times, according to a report in MIT Technology Review.

By training a deep learning model to detect and classify words by neural signals, the researchers could correctly identify Bravo-1’s words with 47 percent accuracy. When they fed his sentences through a natural language model that predicts the probability of word sequences from their syntax and usage — think auto-correct and auto-complete — the accuracy rate jumped to 75 percent.

Still, for as much promise as BCIs hold for enhancing communication in people with severe paralysis, there are plenty of kinks to be worked out.

In the UCSF study, the system’s performance is restricted to a highly limited vocabulary range, nowhere near the more than 170, 000 words in the English language. Plus, it took 22 hours of brain recordings in 48 sessions to produce.

“I don’t want to downplay it by calling it a baby step,” Dean Krusienski, a professor of biomedical engineering at Virginia Commonwealth University who has spent decades studying BCIs, said. “It’s a very major advance, but there’s still a long way to go to achieve more natural verbal communication.”

How Well Can We Interpret Brain Signals Outside the Skull?

For Facebook, which helped fund the project led by Chang, the study apparently failed to provide compelling evidence to move forward with a separate but related project: an AR/VR headset that would let users send text messages by thought dictation. In a blog post where the company expressed its goal in funding the research — “to determine whether a silent interface capable of typing 100 words per minute is possible, and if so, what neural signals are required” — it announced it was discontinuing prototype development of the headset in favor of a wrist-based product with a more conceivable path to market.

The bigger setback for Facebook, though, may be that an optical headset of the type the company envisioned for consumer use is far different than a prosthetic surgically inserted inside the brain.

“It’s still like listening to the stadium a block away,” Cynthia Chestek, an associate professor who researches brain-machine interfaces at the University of Michigan, said of the former. “You can tell something’s happening, but you wouldn’t attempt to overhear a conversation.”

When neurons fire, she explained, they create “a puff of voltage.” The closer a device is to the neurons, the better the data. Yet, “the signal falls off [in a ratio of] one over the distance, which is a super, super steep fall off,” Chestek said. “The reason you can record anything at all from outside of the head is because lots of things are happening at the same time.”

So what can you measure externally?

“For example, from outside the scalp you can determine what side of the brain a seizure is on or tell what sleep cycle somebody is in,” Chestek said.

These deductions can be instructive, but they’re a far cry from deciphering signals from individual neurons to help people type telepathically at 100 words per minute.

“It’s still like listening to the stadium a block away. You can tell something’s happening, but you wouldn’t attempt to overhear a conversation.”

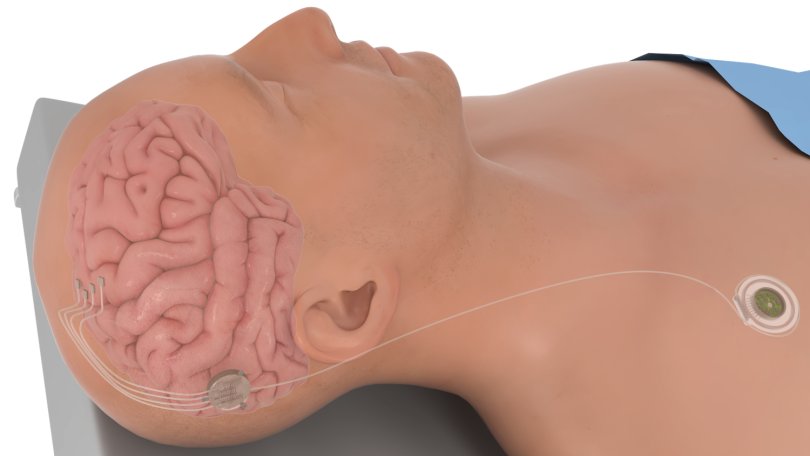

On the other hand, brain implants are unlikely to take hold as a consumer option in the near future due to U.S. Food and Drug Administration (FDA) regulations and the surgical risks involved. Invasive procedures like electrocorticography, as reported in the McGill Daily, “usually involve the implantation of electrodes epidurally (under the skin), subdurally (under the scalp) or intracortically (recording within the cerebral cortex).” These risks, the report notes, include infections, hemorrhage, tissue damage, personality changes and immune reactions that diminish the device’s effectiveness.

Still, for neuroscientists developing BCIs and testing their potential to restore communication and mobility in people who have lost bodily movement due to paralysis or neurodegenerative disease, the field is full of promise.

“A lot of really exciting stuff is happening,” Chestek said. “People are finding ways of recording or stimulating thousands of individual neurons. People are also figuring out how to create electrodes that are smaller than neurons so they can go into the nerves and not do damage. So everybody’s excited.”

As an illustration of what’s possible, Krusienski pointed me to a 2012 video of Jan Scheuermann, a woman with quadriplegia, who used her mind, assisted by an implanted BCI, to control a robotic arm to raise a chocolate bar to her mouth and take a bite.

“One small nibble for a woman. One giant bite for BCIs,” Scheuermann said.

And after that breakthrough and similar demonstrations at universities across the country, the field has continued to advance — with roughly 30 people implanted to date. Just last year, Chestek and her colleagues trained electrode-implanted monkeys to telepathically operate distinct digits of their hands — a potential pathway to use BCIs to animate individuated finger movements in people with artificial hands.

A ‘Home Assistant That Lives in the Brain’

Developments such as these are encouraging to Mehin. As part of San Francisco Design Week, he premiered Card79’s “Day in the Mind” video as part of a panel discussion about the future UX of BCIs. Speaking to me by phone after the event, he said one possibility is that a BCI could serve as a “home assistant that lives in the brain,” giving people the capacity to do things they already do — like order food or compose a memo — faster and with a greater degree of privacy.

“The other obvious superpower is that you’re able to access data that you don’t, literally, have to hold inside your head. And I think that’s the one people get excited about,” he said. “Assuming the bandwidth is high enough, it’s easy to start to imagine queries like, ‘What’s the capital of Angola? What was our revenue last quarter?’ Things you can easily get back in a qualitative way.”

Mehin admits these scenarios are somewhat far off, especially if they are to be achieved non-invasively, as Facebook had imagined. But advances in machine learning applications with narrow intelligence could accelerate development. The scenario presented in the “Day in the Mind” video, for example — when Vance mentally constructs a cartoon head — was inspired by an existing Figma plug-in, GPT-3, that can create layout templates for scrollable windows, buttons and profile pictures by making inferences about a user’s intentions from common patterns associated with their text commands.

“Assuming the bandwidth is high enough, it’s easy to start to imagine queries, like, ‘What’s the capital of Angola? What was our revenue last quarter?’ Things you can easily get back in a qualitative way.”

“Machine learning will start to pick up on your specific neural fingerprint and then be able to accommodate it,” Mehin said. “It’s got to get faster, deciphering the nuances of how a version of a word sounds in your brain versus someone else’s.”

The first consumer products will likely be much less sophisticated, something resembling the flagship product of the Montreal-based company eno: noise-canceling headphones designed to improve concentration by using electroencephalography (EEGs) to track electrical brain activity. In 2016, Card79 worked on the desktop UX of the company’s website, and from 2016 to 2018, they collaborated with a London-based company, Kokoon, on the design of headphones purported to enable better sleep.

The products work in much the same way: data from EEGs is fed into algorithms that infer users’ mental states and generate complementary soundscapes — either to sustain focus, in eno’s case, or to help people sleep more restfully, as Kokoon maintains its product can do.

But Chestek and Krusienski remain skeptical of the capabilities of such devices.

“EEGs may be fun for video games, but even for that, it may or may not work,” Chestek said. “You have to do something like use the Force. Train hard enough, right? It’s not nothing, but you’re never going to use [EEGs] to drive a car,” she said.

Yet even if the data these devices generate is relatively crude, it could present an immediate, low-risk opportunity for software companies to pursue BCI development. That UX is even part of the conversation surrounding BCIs suggests they are moving closer to reality.

“Like any user experience,” Mehin said, “You’re going to try and create value up front and say, ‘Okay, right out of the gate, we can get you to, say, press that cursor without having to move your arm or mentally turn on a light’ — something that gets [people] to understand how the system works and appreciate its value, even if it might not be that powerful.”

High-Bandwidth BCIs: From 100 Electrodes to 1,000

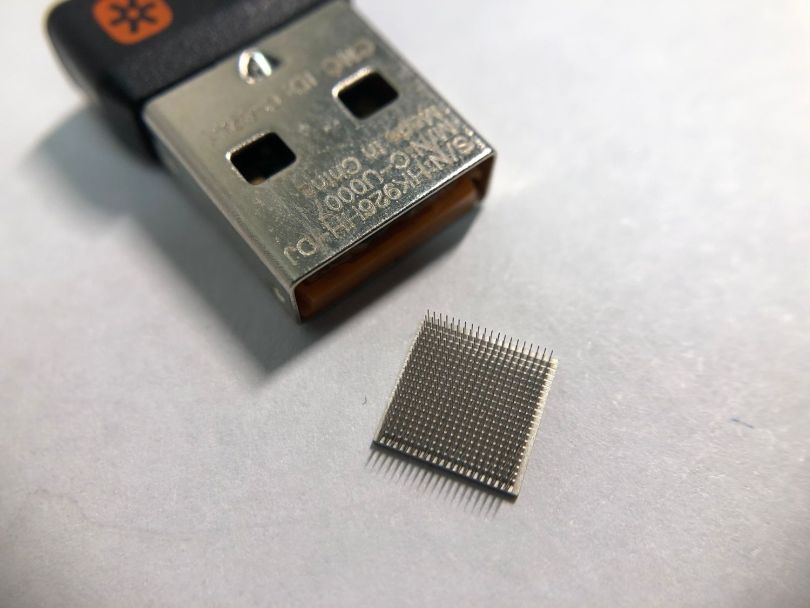

Meanwhile, the design of BCIs continues to improve. Early brain implants, such as the Utah intracortical electrode array built by Salt Lake City-based Blackrock Neurotech, Chestek told me, were limited in the strength of the neural signals they could detect. The 20 mm-wide, razor-thin chips contained about 100 metal electrode filaments sprouting from a silicon base. BrainGate, a multi-institutional U.S. research collaborative, used them in the first clinical tests of BCIs in people with paralysis and neurodegenerative diseases.

While the Utah array continues to perform well, Chestek said, newer implants being developed by software companies like Neuralink contain thousands of electrodes, meaning they can sample from larger collections of the brain’s roughly 85 billion neurons.

“Each neuron individually doesn’t tell us much,” Krusienski explained. “We need to know what’s happening in groups of neurons and interconnected networks of neurons to really decode high-level function in the brain.”

“The performance has gone up,” Chestek said. “[Even with] the original 100-channel sensor, we are much better at interpreting the signals. If somebody ever hands us 1,000 channels like Neuralink is trying to do, it’s going to get better by leaps and bounds.”

Shrinking the size of implants is another promising area of experimental research, which could make electrocorticography surgery less invasive by minimizing the risk of damage to vascular tissue.

“Each neuron individually doesn’t tell us much. We need to know what’s happening in groups of neurons and interconnected networks of neurons to really decode high-level function in the brain.”

“There’s a movement within neurotech of building smaller and smaller electric devices,” Matt Angle, CEO of Paradromics, an Austin-based neurotech company, said. “The brain is a network of blood vessels, almost like a loosely woven blanket.” If the device is small enough — under 50 microns or about the size of a cross-section of a human hair, he told me — it can be inserted in the gaps between these blood vessels with less risk of damage to surrounding brain tissue.

Moreover, recent findings in medical journals, such as those reviewed in a paper Krusienski and his colleagues published in Frontiers of Human Neuroscience, have shown the potential of inserting electrodes into deeper brain structures through stereotactic guidance — in essence, inserting electrodes through a small hole drilled in the skull. This technique appears to yield less trauma and better outcomes than electrocorticogram implants, and also has the potential to reach new brain regions.

Angle is one of the people keenly interested the technology’s development. His company is developing an ultra-thin, wireless BCI implant featuring four, 400-electrode modules for clinical use in patients with severe paralysis. The device, scheduled for completion by the end of the year, will be tested on sheep in early 2022, he told me, with the hope of securing FDA approval for testing in humans thereafter. In the meantime, the company unveiled what it’s calling “the largest ever electrical recording of cortical activity,” from “over 30,000 electrode channels in sheep cortex.”

If high-bandwidth, bi-directional devices like the ones being developed by Neuralink and Paradromics become commercially accessible, Angle said, they could provide new avenues to treat medical conditions that purely biological approaches cannot yet address. In effect, these BCIs become modems for the brain, connecting cortical structures to computers that circumvent traditional signal pathways to trigger sensory and motor responses.

“We’re really, really far from being able to regrow a retina or reattach an eye to the brain,” Angle said. “But we’re at a point now where we can put visual data into the visual cortex [to recreate image sensation]. We’re really far away from being able to repair a spinal cord and allow a person who’s quadriplegic to walk. But what we can do, right now, is put devices in the motor cortex of a patient who’s paralyzed and allow them to use the signals in their motor cortex to control a mouse on a screen, to type, even, we think, to produce speech.”

A Los Angeles company called Second Sight might offer a glimpse of what lies ahead. It gained approval by the FDA for the treatment of the degenerative eye disease retinitis pigmentosa with a retinal implant called Argus II. The 60-electrode device, Angle said, is being used to reconstruct a low-pixel visual display for people who experience decreased vision in low light or have limited peripheral vision. The device has been implanted in more than 350 people, according to the company’s website.

Will UX Designers Become ‘De Facto Ethicists’?

All this raises some important ethical questions. Assuming BCIs can be installed safely, who will have access to these devices? Could having neural “superpowers” divide society into different classes? And crucially, how will users’ privacy be protected once their brains become quasi-data streams?

Ario Jafarzadeh, head of player experience design at Roblox, speaking to me after a panel discussion on BCIs at San Francisco Design Week where he was a guest, said that while touching on many topics, the conversation centered on the role UX designers could have in shaping BCI development.

“What resonated with me, if [brain-computer interfaces] are inevitable, and we know about Black Mirror scenarios — at least three episodes of the show involved BCIs — it behooves the design community to get ahead of it and have this be a force of good in the world and not a dystopia,” he said.

If commercial BCIs take hold, Mehin sees UX designers becoming “de facto ethicists” who sit between the interests of product marketing teams who wish to collect user data and consumers who wish to keep their thoughts to themselves.

“What resonated with me, if [brain-computer interfaces] are inevitable ... it behooves the design community to get ahead of it and have this be a force of good in the world and not a dystopia.”

In much the same way regulations in the European Union governing cookies under the General Data Protection Regulation and ePrivacy Directive required designers to surface messages letting users know how their data was being tracked, government mandates will likely dictate the protections designers are required to build into their systems.

High visibility displays of a BCI’s “on” or “off” status are one way designers might begin to build consumer trust. The speculative BCI operating system in the “Day in the Mind” video, for instance, would require the user to swipe up on an auxiliary phone screen display to connect to the earpiece through an encrypted neural connection. Giving the system access to one’s thoughts, in other words, would necessitate a high degree of intentionality.

“It shouldn’t be like Chrome for your brain,” Mehin said. “There should be this entire level of protection that’s giving you the sense that things are actually not that easy to access.”

Easily accessible permissions settings could offer another layer of protection.

“In the video, there’s a point where the main character says, ‘Eva, turn off all notifications until 11:30 am.’ And that level of control has to be built into the experience so someone, as soon as they feel overwhelmed, can either shut off the device or tune it down,” Mehin said.

But to have a real impact, BCIs will need to be psychologically safe for users who don’t change the default settings at all.

In the book Nudge: Improving Decisions About Health, Wealth, and Happiness, authors Richard Thaler and Cass Sunstein suggest default settings often dictate what people want. Facebook Portal, a video calling device that keeps the microphone and camera turned off by default, acknowledges users’ desire for privacy, and BCIs, presumably, could be similarly gated to prevent involuntary oversharing. But consenting to show your face on camera or share your views verbally is obviously much different than letting a device mine your thoughts.

What’s more, the impact on those who don’t wish to use BCIs would need to be considered. Just imagine how it might work in the context of online dating if one person has a BCI and the other doesn’t.

“How would you initially meet someone?” Mehin asked. “How would you flirt with them? At what point would you invite them into your thoughts? What would dating look like if you’re not having a good date? Would you just kind of start chatting with friends in your head?”

And that’s only the tip of the iceberg.

“Elon Musk is talking about other things like psychotherapies or psychiatric disorders,” Krusienski said. “Pretty much, any neurological disorder, he’s claiming there’s a possibility to do this with [Neuralink’s] technology or brain-computer interface technology, in general.”

If theoretically feasible, that’s a long way off. Before broaching the ethical dilemmas inherent in using BCIs to manage social interactions or lay the foundation for mind-altering psychiatric treatments, Mehin believes UX and UI designers may be implored to address a more fundamental question: Whose needs do BCIs best serve?

To that question, Angle has a clear answer.

“Especially with respect to implants, I think we should be thinking about what we can deliver to people with severe disabilities. And, I think, people in the UI/UX domain could have a huge impact, moving into assistive communication,” Angle said. “We want to build things that are seamless, or natural, for patients to use. And that’s a skill set you don’t necessarily find in the person who’s doing the signal processing or the person who did their Ph.D. in the motor cortex. It’s a skill set of people that don’t normally think about neurotechnology, but should.”