After years of wrangling high-definition video content into virtual reality experiences, Voodle’s engineering team was confident it could tackle any video challenge that came its way.

But when the company pivoted from VR to short-form video sharing for the workplace, video quality was no longer the problem.

Customer expectations were.

“There’s this immediacy we all expect when we’re using any app nowadays,” Voodle senior architect Mike Swanson told Built In. “When you record a video or take a photo on your device, you want that thing to be available or shared almost instantaneously.”

Until that point, “instantaneous” had not been in the immersive video team’s vocabulary. And the move from video sizes that Swanson described as “ridiculous by anybody’s standards” to much smaller files didn’t solve the problem by itself.

In addition to its minute-long videos, Voodle offers on-the-spot transcriptions, as well as a feature that searches through videos for keywords and then splices each keyword mention into a sort of highlight reel — Swanson calls it “juicy bits.” Between calling Google for the transcriptions and AWS Lambda for video processing and juicy bits, that’s a lot of processing, and it slowed things down considerably.

Out of the gate, each user video was taking about 45 seconds to process — so much for immediacy.

A Memory Balancing Act

Swanson and his team members decided to tackle the problem by splitting it in two: the client app and the back end.

They asked: What could the Voodle app on iPhone, Android or web do to relieve some of the load on the back end? One solution was video sizes. The video quality on smartphones outpaced what Voodle needed, and the team found that videos could be easily downsized on the client side.

Processing time got better, but not good enough.

“We were at a point where we’d done the easy things,” Swanson said. “We’d made the client be smart. We’d made the back end be a little bit smarter. But still, it was taking 20 to 30 seconds, and that just didn’t seem fast enough for us to do what we needed to do.”

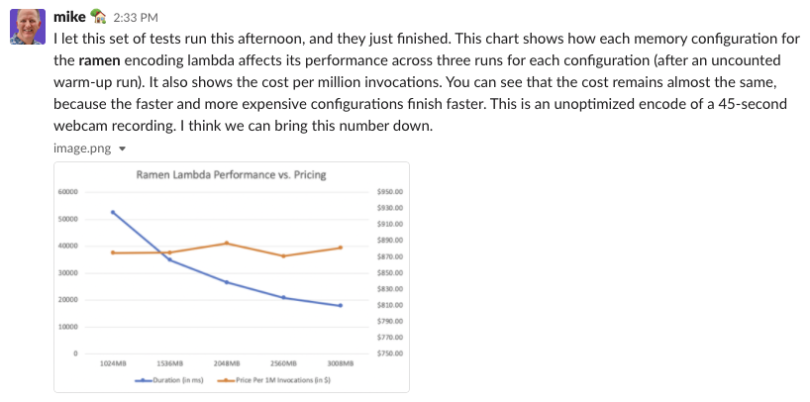

“And as soon as you looked at the chart, you had the answer.”

The team decided to look closer at its AWS Lambda functions — specifically, at the slider-like feature that lets Lambda customers control how much memory their functions should use. The more memory used, the more compute time the customer pays for. In other words, RAM is directly connected to CPU — and to cost.

“Then the red flags got raised in our internal Slack. Like, ‘Wait a minute, how much is this going to cost?’ Swanson said.

After many internal discussions about how to save money by, in essence, keeping that RAM sliding scale to the left, Swanson had a bit of an epiphany, inspired by a basic programming principle.

“Why don’t we just test it?’”

So, he wrote a script. The input was memory level, the outputs were processing time and cost, and the raw material was a 45-second Voodle video. The script ran the video at the lowest memory level all the way up to the largest and most expensive instance. The test took half a day to run.

“Then, it produced this chart,” Swanson said. “And as soon as you looked at the chart, you had the answer.”

‘Let’s Stop Talking About This’

The orange cost line was flat. Every instance cost almost the exact same.

That’s because, unbeknownst to Swanson and his team, the RAM and CPU connection actually worked in Voodle’s favor. As memory got higher, the instances ran so much faster that the cost savings perfectly offset the price of the extra memory.

In hindsight, it’s crystal clear. But without the test, the team would have continued trying to optimize memory.

“So the answer was, let’s stop talking about this, we’re just going to crank it all the way up to the top — get the most expensive, fastest instance with the most memory,” Swanson said. “We thought we could never afford that as a startup with all the videos that are being uploaded. But in fact, it ends up costing us the same amount of money.”

Coders to the Rescue

The proliferation of cloud computing and cloud-native applications changed the game for tech companies. Technical problems turned into business problems, as companies began paying for computing power based on performance, time or storage needs. For Swanson, memories of how straightforward it was — at least in terms of cost — to write software for on-premises servers are just that: memories.

“It’s this new slurry that I never had to think about in the past,” he said.

“It’s this new slurry that I never had to think about in the past.”

Before, programmers may have gone years without considering the cost of running what they built, and terms like “code per encode” weren’t uttered. Meanwhile, business types may have looked at development teams like black boxes — requirements go in, software comes out. Now, the lines between the codebase and the balance sheet have blurred significantly.

The good news is: Coders have special skills to bring to this table. As Swanson found, a routine script solved a cost question faster and more definitively than any meeting could. The Voodle engineering Slack channel went from a flurry of competing takes to complete consensus in a matter of seconds.

“There was no more discussion. There was no more argument,” he said.

The Voodle team’s discovery has broader implications. For any problem that involves balancing cost against performance — so, most of them — writing a test is a good first step. Not every cost-versus-performance chart will look like Swanson’s, but any test should give a software team a clearer view of the optimal RAM or CPU for the task at hand.

“The next function we need to deploy, whether it’s Lambda or Azure or whatever — anywhere I’m paying for the amount of time it takes something to do an operation and there are variables like RAM and CPU — I’m going to write a script a lot earlier,” Swanson said.