Deepgram is the leading platform underpinning the emerging trillion-dollar Voice AI economy, providing real-time APIs for speech-to-text (STT), text-to-speech (TTS), and building production-grade voice agents at scale. More than 200,000 developers and 1,300+ organizations build voice offerings that are ‘Powered by Deepgram’, including Twilio, Cloudflare, Sierra, Decagon, Vapi, Daily, Cresta, Granola, and Jack in the Box. Deepgram’s voice-native foundation models are accessed through cloud APIs or as self-hosted and on-premises software, with unmatched accuracy, low latency, and cost efficiency. Backed by a recent Series C led by leading global investors and strategic partners, Deepgram has processed over 50,000 years of audio and transcribed more than 1 trillion words. There is no organization in the world that understands voice better than Deepgram.

Company Operating RhythmAt Deepgram, we expect an AI-first mindset—AI use and comfort aren’t optional, they’re core to how we operate, innovate, and measure performance.

Every team member who works at Deepgram is expected to actively use and experiment with advanced AI tools, and even build your own into your everyday work. We measure how effectively AI is applied to deliver results, and consistent, creative use of the latest AI capabilities is key to success here. Candidates should be comfortable adopting new models and modes quickly, integrating AI into their workflows, and continuously pushing the boundaries of what these technologies can do.

Additionally, we move at the pace of AI. Change is rapid, and you can expect your day-to-day work to evolve just as quickly. This may not be the right role if you’re not excited to experiment, adapt, think on your feet, and learn constantly, or if you’re seeking something highly prescriptive with a traditional 9-to-5.

The OpportunityAs Model Evaluation QA Lead, you’ll be the technical owner of model quality assurance across Deepgram’s AI pipeline—from pre-training data validation and provenance through post-deployment monitoring. Reporting to the QA Engineering Manager, you will partner directly with our Active Learning and Data Ops teams to build and operate the evaluation infrastructure that ensures every model Deepgram ships meets objective quality bars across languages, domains, and deployment contexts.

This is a hands-on, high-impact role at the intersection of QA engineering and ML operations. You will design automated evaluation frameworks, integrate model quality gates into release pipelines, and drive industry-standard benchmarking—ensuring Deepgram maintains its position as the accuracy and latency leader in voice AI.

What You’ll DoModel Evaluation Automation: Design, build, and maintain automated model evaluation pipelines that run against every candidate model before release. Implement objective and subjective quality metrics (WER, SER, MOS, latency/throughput) across STT, TTS, and STS product lines.

Release Gate Integration: Embed model quality checkpoints into CI/CD and release pipelines. Define pass/fail criteria, build dashboards for model comparison, and own the go/no-go signal for model promotions to production.

Agent & Model Evaluation Frameworks: Stand up and operate evaluation tooling (Coval, Braintrust, Blue Jay, custom harnesses) for end-to-end voice agent testing—covering accuracy, latency, turn-taking, and conversational quality and custom metrics across real-world scenarios.

Active Learning & Data Ingestion Testing: Partner with the Active Learning team to validate data ingestion infrastructure, annotation pipelines, and retraining automation. Ensure data quality standards are met at every stage of the flywheel.

Industry Benchmark Automation: Automate execution and reporting of industry-standard benchmarks (e.g., LibriSpeech, CommonVoice, internal production-traffic evals). Maintain reproducible benchmark environments and publish results for internal consumption.

Language & Domain Validation: Build and maintain test suites for multi-language and domain-specific model validation. Design coverage matrices that ensure new languages and acoustic domains are systematically evaluated before GA.

Retraining Automation Support: Validate the end-to-end retraining pipeline across all data sources—from data selection and preprocessing through training, evaluation, and promotion—ensuring automation reliability and correctness.

Manual Test Feedback Loop: Design and operate human-in-the-loop evaluation workflows for subjective quality assessment. Build the tooling and processes that translate human feedback into actionable quality signals for the ML team.

4–7 years of experience in QA engineering, ML evaluation, or a related technical role with a focus on predictive and generative model and data quality.

Hands-on experience building automated test/evaluation pipelines for ML models and connecting software features.

Strong programming skills in Python; experience with ML evaluation libraries, data processing frameworks (Pandas, NumPy), and scripting for pipeline automation.

Familiarity with speech/audio ML concepts: WER, SER, MOS, acoustic models, language models, or similar evaluation metrics.

Experience with CI/CD integration for ML workflows (e.g., GitHub Actions, Jenkins, Argo, MLflow, or equivalent).

Ability to design and maintain reproducible benchmark environments across multiple model versions and configurations.

Strong communication skills—you can translate model quality metrics into actionable insights for engineering, research, and product stakeholders.

Detail-oriented and systematic, with a bias toward automation over manual process.

Experience with model evaluation platforms (Coval, Braintrust, Weights & Biases, or custom evaluation harnesses).

Background in speech recognition, NLP, or audio processing domains.

Experience with distributed evaluation at scale—running evals across GPU clusters or large dataset partitions.

Familiarity with human-in-the-loop evaluation design and annotation pipeline tooling.

Experience with multi-language model evaluation and localization quality assurance.

Prior work in a company where ML model quality directly impacted revenue or customer SLAs.

Deepgram’s competitive advantage is built on model quality—accuracy, latency, and reliability across languages and domains. As Model Evaluation QA Lead, you’ll be the person who ensures that advantage is measured, maintained, and continuously improved. You’ll build the evaluation infrastructure that gives our Research and Active Learning teams the confidence to ship faster while raising the quality bar with every release. This role directly protects customer trust and accelerates Deepgram’s ability to lead the voice AI market.

Benefits & Perks*Holistic healthMedical, dental, vision benefits

Annual wellness stipend

Mental health support

Life, STD, LTD Income Insurance Plans

Unlimited PTO

Generous paid parental leave

Flexible schedule

12 Paid US company holidays

Quarterly personal productivity stipend

One-time stipend for home office upgrades

401(k) plan with company match

Tax Savings Programs

Learning / Education stipend

Participation in talks and conferences

Employee Resource Groups

AI enablement workshops / sessions

*For candidates outside of the US, we use an Employer of Record model in many countries, which means benefits are administered locally and governed by country-specific regulations. Because of this, benefits will differ by region — in some cases international employees receive benefits US employees do not, and vice versa. As we scale, we will continue to evaluate where we can create more alignment, but a 1:1 global benefits structure is not always legally or operationally possible.

Backed by prominent investors including Y Combinator, Madrona, Tiger Global, Wing VC and NVIDIA, Deepgram has raised over $215M in total funding. If you're looking to work on cutting-edge technology and make a significant impact in the AI industry, we'd love to hear from you!

Deepgram is an equal opportunity employer. We want all voices and perspectives represented in our workforce. We are a curious bunch focused on collaboration and doing the right thing. We put our customers first, grow together and move quickly. We do not discriminate on the basis of race, religion, color, national origin, gender, sexual orientation, gender identity or expression, age, marital status, veteran status, disability status, pregnancy, parental status, genetic information, political affiliation, or any other status protected by the laws or regulations in the locations where we operate.

We are happy to provide accommodations for applicants who need them.

Top Skills

What We Do

Deepgram is the leading voice AI platform for developers building speech-to-text (STT), text-to-speech (TTS) and full speech-to-speech (STS) offerings. 200,000+ developers build with Deepgram’s voice-native foundational models – accessed through APIs or as self-managed software – due to our unmatched accuracy, latency and pricing. Customers include software companies building voice products, co-sell partners working with large enterprises, and enterprises solving internal voice AI use cases.

The company ended 2024 cash-flow positive with 400+ enterprise customers, 3.3x annual usage growth across the past 4 years, over 50,000 years of audio processed and over 1 trillion words transcribed. There is no organization in the world that understands voice better than Deepgram.

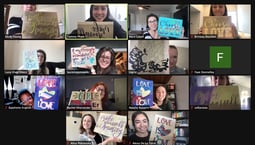

Why Work With Us

Our culture, like our product, is constantly learning and evolving, but the heart of our team is enduring. We are a self-motivated, positive, passionate, and competitive group of people. At Deepgram, we put an emphasis on being ourselves, being curious, growing together, and being human. We are a unique bunch who celebrate our differences.

Gallery

Deepgram Offices

Remote Workspace

Employees work remotely.