Building stable, accurate and interpretable machine learning models is an important task for companies across many different industries. Machine learning model predictions have to be stable in time as the underlying training data is updated. Drastic changes in data due to unforeseen events can lead to significant deterioration in model performance.

Model hyperparameter tuning can make necessary changes to machine learning models that account for statistical changes in data over time. It is also important to understand the various ways of testing your models depending on how much data you have and, consequently, the stability of your model predictions. Further, the most useful models use inputs or features that are actionable and interpretable.

Given this requirement, data scientists need to have a good understanding of how to select the best features. Of course, domain expertise allows for the best feature selection. Additional methods of feature selection like model testing, feature selection and model tuning can help build accurate models that can be used to produce actionable insights. Combining domain expertise with deep knowledge of feature selection allows companies to get the most out of machine learning model predictions.

Model Testing, Feature Selection and Hyperparameter Tuning

- Model testing is a key part of model building. When done correctly, testing ensures your model is stable and isn’t overfit. The three most well-known methods of model testing are randomized train-test split, K-fold cross-validation, and leave one out cross-validation.

- Feature selection is another important part of model building as it directly impacts model performance and interpretability. The simplest method of feature selection is manual, which is ideally guided by domain expertise. The data scientist picks different sets of features until satisfied with the performance on the validation set. Typically you have a hold-out test set, separate from the validation set, that you test on once at the end of model development, to avoid overfitting. There are additional methods, such as SelectKBest, that automate the process.

- Hyperparameter tuning is the final important part of model building. Although most machine learning packages come with default parameters that typically give decent performance, additional tuning is typically necessary to build highly accurate models. This process can be done manually by selecting different parameter values and testing the model using in-sample validation on the training data until you are satisfied with the performance.

Model testing is a key part of model building. When done correctly, testing ensures your model is stable and isn’t overfit. The three most well-known methods of model testing are randomized train-test split, K-fold cross-validation, and leave one out cross-validation.

Randomized train-test split is the simplest method: A random sample is taken from the data to make up a testing data set, while the remaining data is used for training. K-fold cross-validation randomly splits the data up into K parts (called folds) wherein one fold is used for testing and the remaining folds are used for testing. The method is to iterate over each fold until all of the data has been used to train and test the model. The performance across the folds is then averaged. Finally, leave one out is similar to K-folds, but it uses a single data point for testing and the remaining data for training. It iterates over each data point until all of the data has been used for testing and training. Depending on the size of your data and how much you’d like to minimize bias, you may prefer one method over another.

Feature selection is another important part of model building as it directly impacts model performance and interpretability. The simplest method of feature selection is manual, which is ideally guided by domain expertise. The data scientist picks different sets of features until satisfied with the performance on the validation set. Typically you have a hold-out test set, separate from the validation set, that you test on once at the end of model development, to avoid overfitting. There are additional methods, such as SelectKBest, that automate the process. These are particularly useful if your data has many columns where manual feature selection can become a cumbersome task. Filtering the size of the input set can help make model prediction easier to interpret and subsequently make predictions more actionable.

Hyperparameter tuning is the final important part of model building. Although most machine learning packages come with default parameters that typically give decent performance, additional tuning is typically necessary to build highly accurate models. This process can be done manually by selecting different parameter values and testing the model using in-sample validation on the training data until you are satisfied with the performance. Specific Python packages can also allow you to search the hyperparameter space for most algorithms and select the parameters that give the best performance.

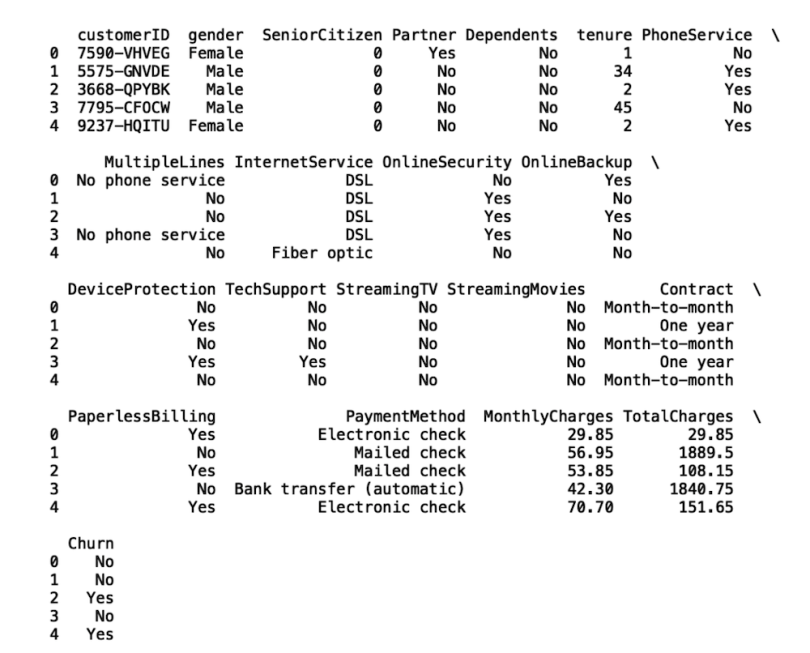

We will discuss how to apply these methods to test, tune and select a machine learning model for the task of classification. Specifically, we will consider the task of predicting customer churn, where churn is defined as the event of a customer leaving a company. We will be using the publicly available fictitious Telco churn data.

Reading and Displaying Telco Data

To start, let’s import the Pandas library and read in the telco data into a Pandas data frame:

import pandas as pd

df = pd.read_csv(“telco.csv”)

print(df.head())

Methods for Splitting Data for Training and Testing

Randomized Train-Test Split

The first testing method we will discuss is randomized train-test split. Let’s build a simple model that takes customer tenure and monthly charges as inputs and predicts whether or not the customer will churn. Here, our inputs will be tenure and monthly charges and our output will be churn. First, let’s convert the churn values to machine-readable binary integers using the np.where() method from the numpy package:

import numpy as np

df['Churn_binary'] = np.where(df['Churn'] == 'Yes', 1, 0)Now, let’s import the train_test_split method from the model selection module in Scikit-learn:

from sklearn.model_selection import train_test_splitAs explained in the documentation, the train_test_split method splits the data into random training and testing subsets. To perform the split, we first define our input and output in terms of variables called X and y, respectively:

X = df[['tenure', 'MonthlyCharges']]

y = df['churn']Next, we pass in these variables into the train_test_split method, which returns random subsets for training input and testing input as well as training output and testing output. In Python, when a method or function returns multiple values, they are typically tuples. We will need to unpack the return tuples and store the correct values in our training and testing variables:

X_train, X_test, y_train, y_test = train_test_split(X, y)We can also specify a parameter called random_state. This is a shuffling parameter that controls how the data is randomly split. If we give it an integer value, we ensure that we reproduce the same split upon each run:

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state =42)It is then straightforward to train and test a model using training and testing subsets. For example, to train a random forest:

from sklearn.ensemble import RandomForestClassifier

model = RandomForestClassifier()

model.fit(X_train, y_train)

y_pred = model.predict(X_test)You can then use y_test and y_pred to evaluate the performance of your model. Remember to consider whether or not you have time-dependent data. If this is the case, instead of a randomized split, split your data along a date. If you use a randomized split, you will include future data in your training set and your predictions will be biased.

In practice, a randomized train-test split is useful for generating a hold-out validation set that you use for testing once after feature selection and model tuning has been completed. This ensures that your model isn’t overfit and reduces the chance of badly biasing your model.

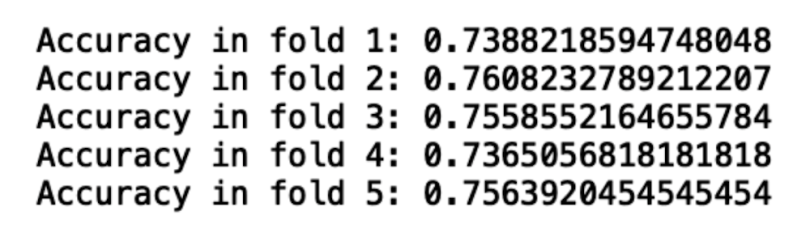

K-fold Cross-Validation

K-fold cross-validation is the process of splitting data into an integer number (K) parts and using one part for testing and the rest for training. This process is done iteratively until all data has been used for training and testing. The documentation for the cross-validation method can be found here.

To implement K folds, we import KFold from the model selection module in Scikit-learn:

from sklearn.model_selection import KFold

folds = KFold(n_splits=5)

folds.get_n_splits(X)

for train_index, test_index in folds.split(X):

X_train, X_test, y_train, y_test = X.iloc[train_index], X.iloc[test_index], y.iloc[train_index], y.iloc[test_index]

model = RandomForestClassifier()

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

fold+=1

print(f"Accurac in fold {fold}:", accuracy_score(y_pred, y_test))

Within the for loop, you can train and test your model on different folds. Here, we only used five folds, but you can change the n_splits parameter in the KFold method to be whatever number you’d like. The more folds you use the less bias there will be in your model output.

This process allows you to analyze the stability of your model’s performance through metrics such as variance. It’s also typically used for tasks such as model tuning and feature selection, which we will cover shortly.

Ideally, you want the most accurate model with the lowest variance in performance. Low performance variance means the model is more stable and consequently more reliable.

Leave One Out Cross-Validation

Leave one out is similar to K-fold, but instead of using a randomly sampled subset for training and test, a single data point is used for testing while the rest are used for training. This process is also done iteratively until all of the data has been used for training and testing:

loo = LeaveOneOut()

for train_index, test_index in loo.split(X):

X_train, X_test, y_train, y_test = X.iloc[train_index], X.iloc[test_index], y.iloc[train_index], y.iloc[test_index]This method is typically used for smaller data sets. In my experience, I have found this to be particularly useful for small imbalance data sets. Note that, since you are training your model n times, where n is the size of the data, this approach can be computationally intensive for large data sets.

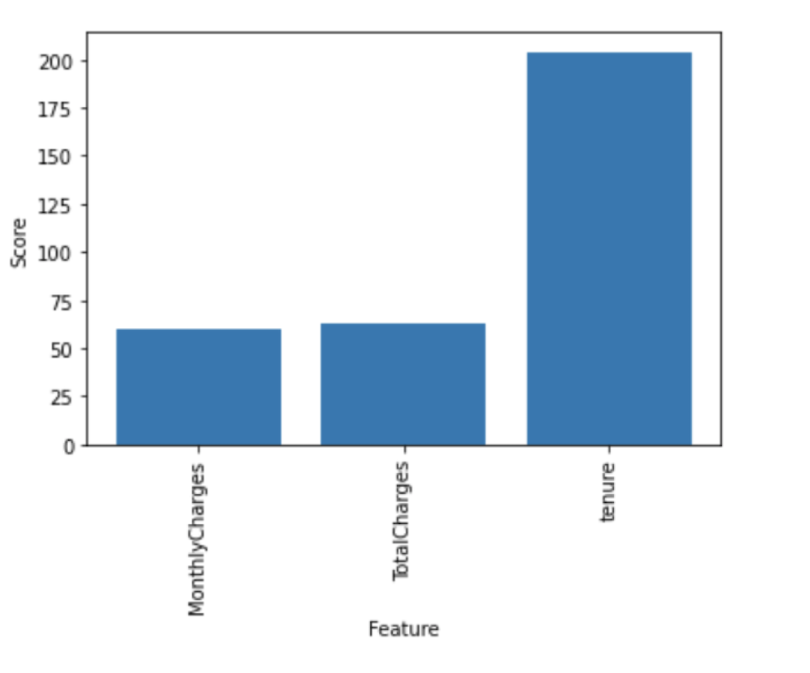

Methods of Feature Selection for Model Building

Other than manual feature selection, which is typically done through exploratory data analysis and using domain expertise, you can use some Python packages for feature selection. Here, we will discuss the SelectKBest method. The documentation for SelectKBest can be found here. First, let’s import the necessary packages:

from sklearn.feature_selection import SelectKBest, f_classif

import matplotlib.pyplot as pltWe will select from monthly charges, tenure and total charges. First, we need to clean the total charges column:

df['TotalCharges'] = df['TotalCharges'].replace(' ', np.nan)

df['TotalCharges'].fillna(0, inplace = True)

df['TotalCharges'] = df['TotalCharges'].astype(float)We should select features on a training set, so that we don’t bias our model. Let’s redefine our input and output:

X = df[['tenure', 'MonthlyCharges', 'TotalCharges']]

y = df['Churn']Now, let’s perform our randomized train-test split:

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state= 42)Next, let’s define our selector object. We will pass in our features and the output:

numerical_predictors = ["MonthlyCharges", "TotalCharges", "tenure" ]

numerical_selector = SelectKBest(f_classif, k=3)

numerical_selector.fit(X_train[numerical_predictors], y_train)Now, we can plot the scores for our features. We generate scores for each feature by taking a negative log of the p values for each feature:

num_scores = -np.log10(numerical_selector.pvalues_)

plt.bar(range(len(numerical_predictors)), num_scores)

plt.xticks(range(len(numerical_predictors)), numerical_predictors, rotation='vertical')

plt.show()

We can see that tenure has the highest score, which makes sense. Intuitively, customers that have been with the company for longer are less likely to churn. I want to stress that, in practice, feature selection should be performed on training data. Further, to improve the reliability of the features selected you can run K-fold cross-validation and take the average score for each feature and use the results for feature selection.

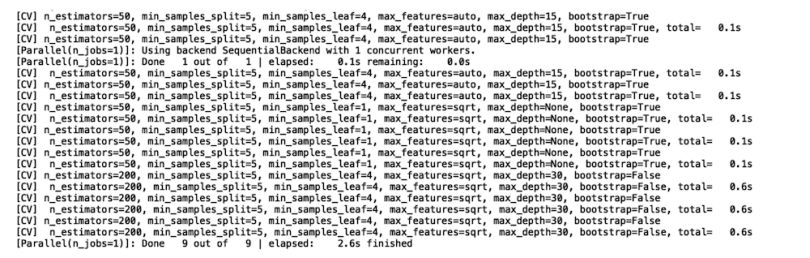

Hyperparameter Tuning

In addition to model testing and feature selection, model hyperparameter tuning is another very important part of model building. The idea is to search for the model parameters that give the best performance.

The RandomizedSearchCV method from Scikit-learn allows you to perform a randomized search over parameters for an estimator. The documentation for RandomizedSearchCV can be found here. Here, we will perform a randomized search for random forest parameters. We start by defining a grid of random forest parameter values. First, let’s specify a list of the number of trees we will use in the random forest:

n_estimators = [50, 100, 200]Then, we specify the number of features to consider at every split

max_features = ['auto', 'sqrt', 'log2']We also specify the maximum number of levels in a tree:

max_depth = [int(x) for x in np.linspace(10, 30, num = 5)]

max_depth.append(None)The minimum number of samples required to split a node:

min_samples_split = [2, 5, 10]The minimum number of samples required at each leaf node:

min_samples_leaf = [1, 2, 4]And, finally, whether or not we will use bootstrap sampling:

bootstrap = [True, False]We can now specify a dictionary which will be our grid of parameters:

random_grid = {'n_estimators': n_estimators,

'max_features': max_features,

'max_depth': max_depth,

'min_samples_split': min_samples_split,

'min_samples_leaf': min_samples_leaf,

'bootstrap': bootstrap}Let’s also define a random forest model object:

model = RandomForestClassifier()Similar to feature selection, model hyperparameter tuning should be done on the training data. To proceed, let’s import RandomizedSearchCV from Scikit-learn:

from sklearn.model_selection import RandomizedSearchCVNext, we define a RandomizedSearchCV object. In the object, we pass in our random forest model, the random_grid, and the number of iterations for each random search.

Notice there is a parameter called cv, which is for cross-validation. We use this parameter to define the number of folds to be used for validation, just as we did for K-folds. Again, we’d like to find the set of random forest parameters that give the best model performance and model performance is calculated by RandomizedSearchCV using cross-validation.

The parameter verbose displays output for each iteration. Since we have three folds and three iterations, we should see output for nine test runs:

rf_random = RandomizedSearchCV(estimator = model, param_distributions

= random_grid, n_iter = 3, cv =3, verbose=2, random_state=42)After defining the RandomizedSearchCV object, we can fit to our training data:

rf_random.fit(X_train, y_train)

Upon fitting, we can output the parameters that give the best performance:

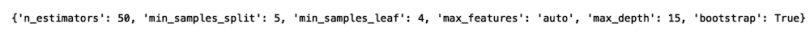

parameters = rf_random.best_params_

print(parameters)

You can increase the number of iterations to search and test more parameters. The larger the number of iterations, the more likely you are to find a better-performing model from the set of hyperparameters. Obviously, the more parameters you search and test the longer the calculation will take.

Keep in mind that on a typical machine or laptop, this process may become intractable for very large data sets and you may need to use distributed computing tools such as databricks. There are other hyperparameter tuning tools that are useful to know as well. For example, GridSearchCV performs an exhaustive search on the entire grid. This means that every possible combination of parameters gets tested. This method is a good option if you have sufficient computational power.

Get Started With Model Building Now

Having a good understanding of which tools are available for building robust machine learning models is a skill every data scientist should have. Being able to prepare data for training and testing, to select features, and to tune model parameters is necessary for building stable models whose predictions are reliable. Further, having these tools in your back pocket can save significant labor hours since these methods automate what would otherwise be done manually. These techniques, when used correctly, can reduce the risk of model deterioration that can cost companies millions of dollars down the road.

Understanding how to appropriately set up model testing is also vital for ensuring that you don’t overfit your models. For example, if you don’t correctly split your data for training and testing, your model tests can give you a false sense of model accuracy, which can be very expensive for a company. In the case of churn, if your model is deceptively accurate, you may incorrectly target customers with ads and discounts who aren’t actually likely to churn. This can result in millions lost in ad dollars.

To reduce the risk of overfitting and overestimating model performance, it is crucial to have a hold-out test set (like what we generate from the randomized train test split) which we perform a single test on after model tuning and feature selection on the training data. Further, the cross-validation methods give us a good understanding of how stable our models are both in terms of model tuning and feature selection. Cross-validation allows us to see how performance varies across multiple tests. Ideally, we’d like to select the features and model parameters that give the best performance with the lowest variance in model performance. Low variance in model performance, means that model predictions are more reliable and lower risk.

Feature selection is also very important since it can help filter down a potentially large number of inputs. No matter how accurate a model is, it will be very difficult to interpret how the values for 1,000 features, for example, determine whether or not a customer will churn. Feature selection, along with domain expertise, can help data scientists select and interpret the most important features for predicting an outcome.

Hyperparameter tuning is also an essential step required for achieving optimal model performance. While default parameter values provided by Scikit-learn machine learning packages typically provide decent performance, model tuning is required in order to achieve very accurate models. For example, with churn, the more accurately you can target customers likely to leave a company, the less money is lost to incorrectly targeted customers and the more you increase the customer lifetime value of customers at high risk of leaving.

Having a strong familiarity with tools available for setting up model testing, selecting features and performing model tuning is an invaluable skill set for data scientists in any industry. Having this knowledge can help data scientists build robust and reliable models that can add significant value to a company, resulting in savings in resources in terms of money and labor, as well as increase company profits. The code from this post is available on GitHub.