Most 3D-printed objects are prototypes or one-off creations, in large part because 3D printing is more finicky than traditional manufacturing.

Because the process works by adding layers of material atop each other, subtle changes in temperatures or material quality can result in imperfections and hours of lost work. Inkbit, a Boston-area 3D printing company, is using machine vision and artificial intelligence to help its equipment course correct.

Javier Ramos, co-founder and director of hardware at Inkbit, said Inkbit’s machine vision technology instantly scans the objects it prints, relying on AI to correct for any mistakes made. He imagines a future where Inkbit’s tech is used on every factory floor, printing out millions of products more cheaply — and faster — than traditional manufacturing processes ever could.

“Once you make something reliable, you can scale it to industrial scales.”

“One of the many gating issues to getting 3D printing to be a production technology is around reliability,” Ramos said. “So we were looking at how adding vision and sensing allows us to make the process more reliable. Once you make something reliable, you can scale it to industrial scales.”

We spoke to Ramos about the challenges of creating a 3D printer that checks its own work.

born at MIT

Inkbit spun out of the Computer Science and Artificial Intelligence Laboratory at MIT in 2017, and today says it’s the first 3D printing firm to use machine vision and AI to self-correct its printed products.

The firm has about three dozen business customers, Ramos said, which primarily operate in the medical, life science and industrial fields. Inkbit recently entered a public partnership with consumer goods firm Johnson & Johnson.

In early November, Inkbit raised $12 million in an equity financing round led by materials manufacturers Stratasys and DSM Venturing. Ramos said the funds will be used to create more functional materials for the firm to print.

“Having a great, repeatable process is half the story,” Ramos said. “The other half is having great materials that will allow you to pursue very high-value applications.”

How it works

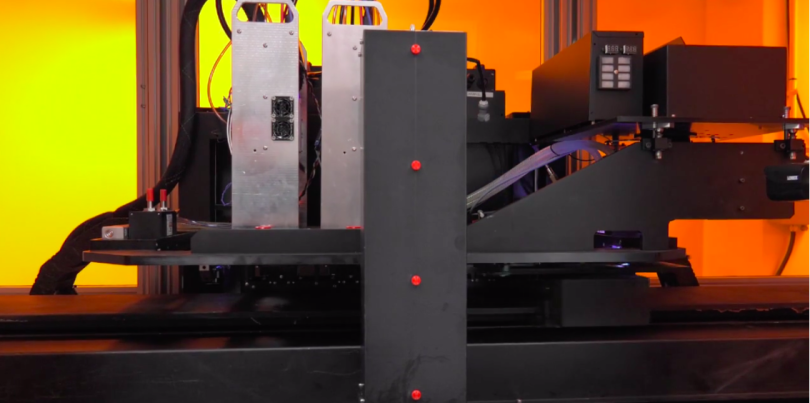

The firm uses a printing process similar to what your color printer does at home, but instead of depositing lines of ink on paper, Inkbit drips ultrathin layers of liquid photopolymer products on top of one another in layers. The firm uses ultraviolet light to harden — or “cure” — these layers.

A machine vision system then scans each droplet, and reports the data back to an AI system that monitors how that layer was made, adjusting the next material layer to account for any shortfalls or overreaching in product. Inkbit prints a new layer of material every four seconds, Ramos said.

“Our current solution is very simple, but that wasn’t the case in the beginning.”

He said building the machine vision system took years, and that it was the principal challenge Inkbit faced when building a 3D printing process that could scan quickly, with a high resolution, and process many different optical elements.

“Our current solution is very simple, but that wasn’t the case in the beginning,” Ramos said. “We were working with way more complex technologies and converging to something simple is difficult. Getting there came as a systemic elimination of different possibilities.”

Designing a versatile 3D scanning process

One of the biggest challenges to creating a good 3D scanner is accounting for variability in material color, opacity and shine. Today, Inkbit’s scanner can identify materials in every color under the sun. Ramos said its ability to identify different optical elements is the result of constant testing.

“Doing this in real time, with very high robustness, and very different optical properties, is very challenging.”

“You might have a range of optical properties that ranges from black to red to blue. Also, you might have parts that are translucent or transparent, which traditionally have been very difficult to scan,” Ramos said. “And now doing this in real time, with very high robustness, and very different optical properties, is very challenging.”

Identifying the different colors and optical elements of products was dependent on the machine vision, or 3D scanning system, engineers built.

Inkbit’s engineers initially thought to install a shape from specularity system in the scanner, essentially using a process that exploits the reflective properties of a part to read its shape and colors. Ramos said this system works well for objects that are shiny, but not for those whose colors absorb light.

“If we’re printing a black part, which, a lot of our customers want black components, then that wouldn’t work,” Ramos said.

Engineers then went on to try embedding basic laser profilometry as an optical reading method, which essentially meant shining a laser on the part being printed and trying to interpret its shape by how the laser bent. This is a popular technique for scanning pieces of metal, Ramos said, but resulted in shadows that partially blocked engineers’ view of the part. It also didn’t scan translucent products well, he said.

“Imagine something like wax or a milk — the reflection actually comes from the surface but also the subsurface. So you might get reflections from the bottom, or the inside, of the part,” Ramos said. “It’s typically used for scanning things that reflect and things that don’t have a lot of subsurface gathering.”

The firm then worked to incorporate into the scanner an optical coherence tomography system, which is a process that uses infrared light to scan the surface and subsequent layers of the part. Ramos said the cost and complexity of this system deters Inkbit from using it for every product it prints, but that the firm has incorporated it as part of its core scanning technology today because it works well for specific materials.

But for most materials, Inkbit engineers have settled on a variation of the basic laser profilometry technique to use as their primary scanning mechanism. Instead of using lasers, Ramos said Inkbit’s scanner uses a light whose wavelength measures around 400 nanometers, similar to blue light. He said Inkbit chose this wavelength because it’s particularly sensitive to different colors and optical properties, which allows it to scan parts with a range of textures and colors. Inkbit’s scanner projects a light pattern onto the part and essentially measures the part’s shape by how the pattern bends.

“We project onto the part an illumination pattern and then we observe with the camera how that illumination pattern changes as we move the part underneath that pattern,” Ramos said. “That allows us to have a very high resolution and detect the 3D shape of the part.”

Processing data at the speed of, um, light

After the scanner collects data on how the layer was printed, that data is processed by Inkbit’s computer system.

Inkbit leverages specialized graphical processing units (GPUs) in its computer system to process the scanning data quickly.

“All your processing, and all your data generation, has to happen in the scale of hundreds of milliseconds. So fractions of a second.”

“We print, we scan, and process the entire scan, and then decide how the next layer should be printed,” Ramos said. “So all your processing, and all your data generation, has to happen in the scale of hundreds of milliseconds. So fractions of a second. That’s one of the key challenges: Everything needs to happen very quickly.”

Once the data is processed, Inkbit’s AI system compares the data generated from the previous scan with a model of what the scan should look like. If its AI system detects deviations, it automatically sets the next layer to correct features identified in the next layer printed.

Simple AI solutions can be better than complex ones

Inkbit tested several AI systems before settling on its current process.

The firm tried implementing deep learning and convoluted neural networks, but both required a lot of data and computational power to analyze the data input and accurately and precisely instruct the machine on what the next layer should look like.

“A lot of these data sets can get really, really, really big, which essentially means that you need to run a lot of these training models in a computer cluster or in a server for hours or days to get this model to converge,” Ramos said.

Inkbit ended up building its own system, which Ramos said is much simpler than both deep learning and convoluted neural networks. He said their model is trained on physical models of reality, or an idea of what the process should look like. He said this constrains the model, which means the AI system has fewer parameters to learn.

“We wanted something that could be quickly trained in a matter of minutes or hours,” Ramos said. “We wanted to be able to do it on a normal computer and not have to rely on more specialized computer clusters or supercomputers."